Building End-to-End Data Pipelines with Microsoft Fabric

In today’s fast-paced digital landscape, managing data efficiently has become a cornerstone of success. Microsoft Fabric empowers you to build End-to-End Data Pipelines by integrating tools for ingestion, transformation, storage, analysis, and automation into a single platform. This unified approach eliminates the need for multiple tools, simplifying workflows and reducing overhead costs. By streamlining the entire data lifecycle, Microsoft Fabric accelerates the journey from raw data to actionable insights. Its ability to ensure data consistency and reliability makes it a game-changer in the Data Revolution with Microsoft Fabric.

Key Takeaways

Microsoft Fabric streamlines the entire data lifecycle by integrating tools for ingestion, transformation, storage, analysis, and automation into a single platform.

End-to-End Data Pipelines automate data processes, ensuring seamless flow from raw data to actionable insights, which reduces errors and saves time.

Utilizing a unified platform like Microsoft Fabric enhances collaboration among teams, improves data consistency, and simplifies workflows.

Advanced features such as AI and machine learning integration in Microsoft Fabric empower users to extract deeper insights and make data-driven decisions faster.

Scalability is a key advantage of Microsoft Fabric, allowing businesses to efficiently manage growing data volumes without compromising performance.

Automation and orchestration capabilities in Microsoft Fabric help reduce manual effort, minimize errors, and ensure reliable data pipelines.

Implementing best practices for security, compliance, and monitoring within Microsoft Fabric enhances data integrity and builds trust in analytics processes.

Understanding End-to-End Data Pipelines

What Are End-to-End Data Pipelines?

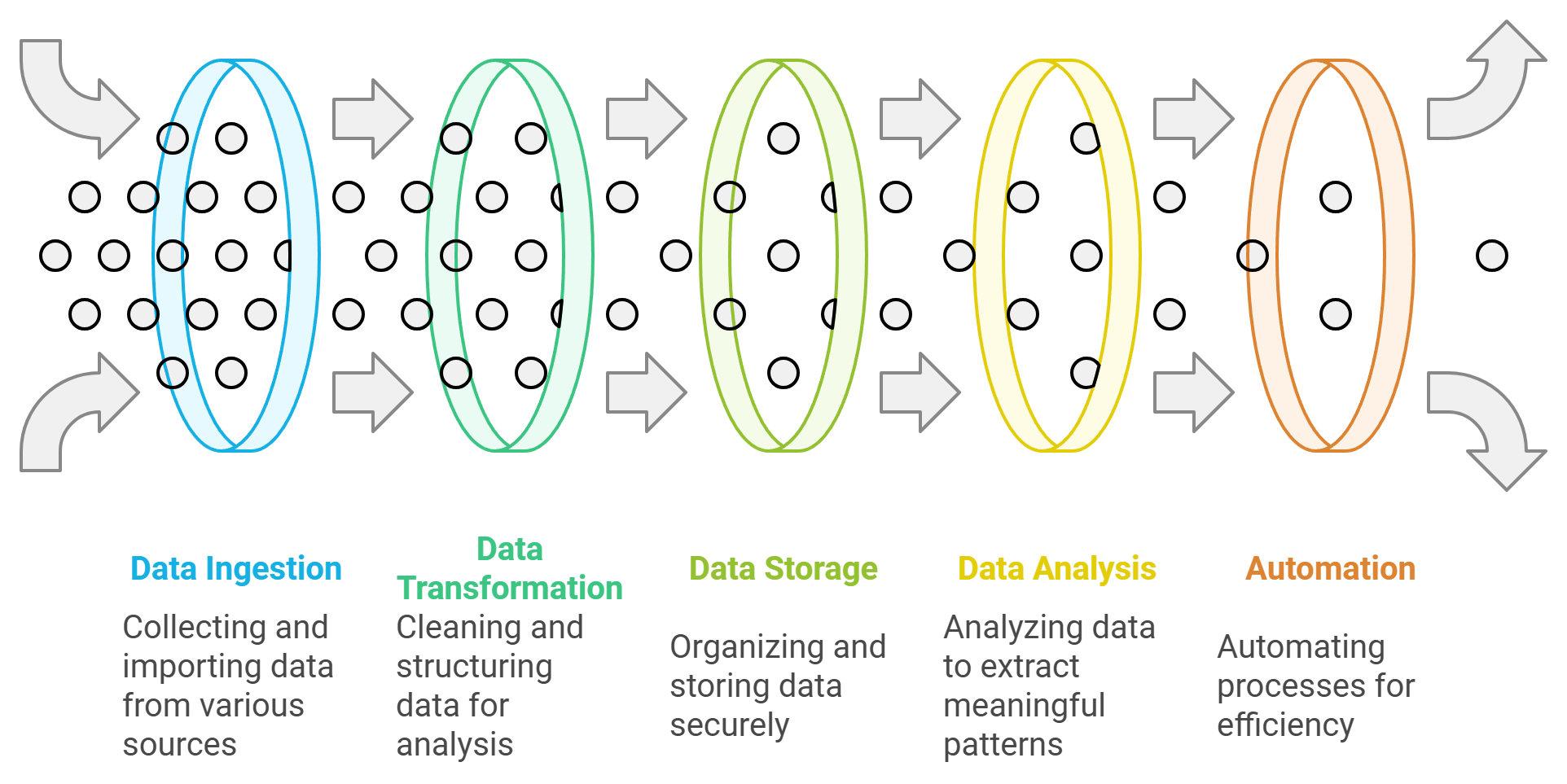

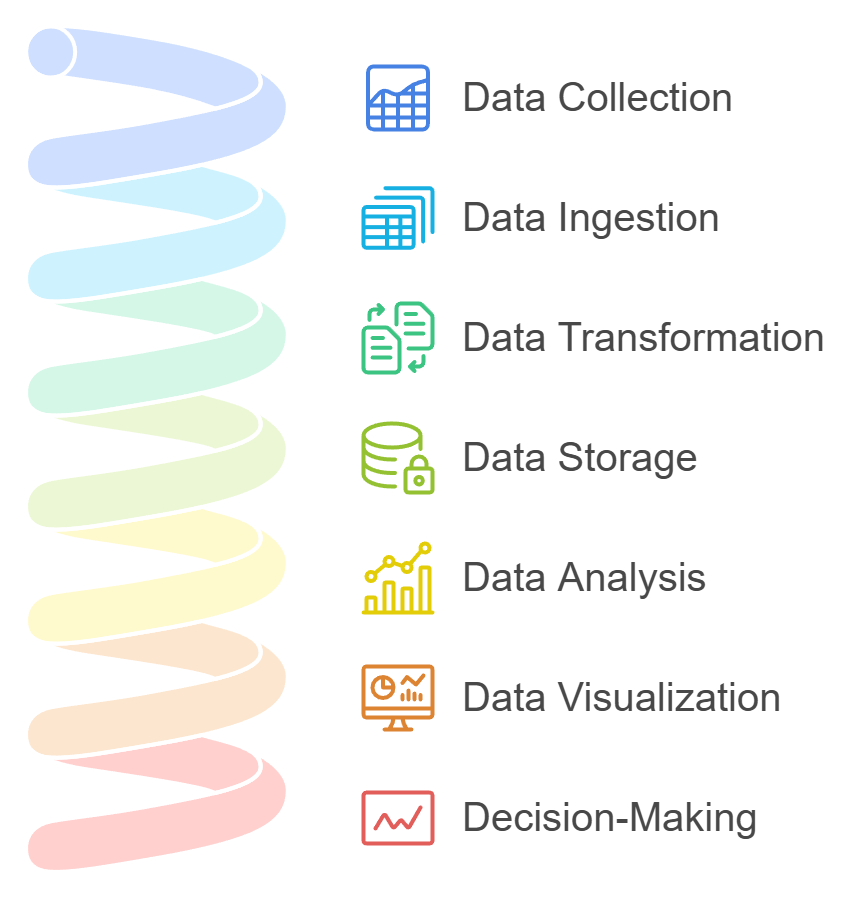

End-to-End Data Pipelines represent the complete journey of data, starting from its collection to its final use in decision-making. These pipelines handle every step, including data ingestion, transformation, storage, analysis, and visualization. By automating these processes, you can ensure that data flows seamlessly across systems without manual intervention. This approach eliminates bottlenecks and reduces errors, enabling you to focus on deriving insights rather than managing data.

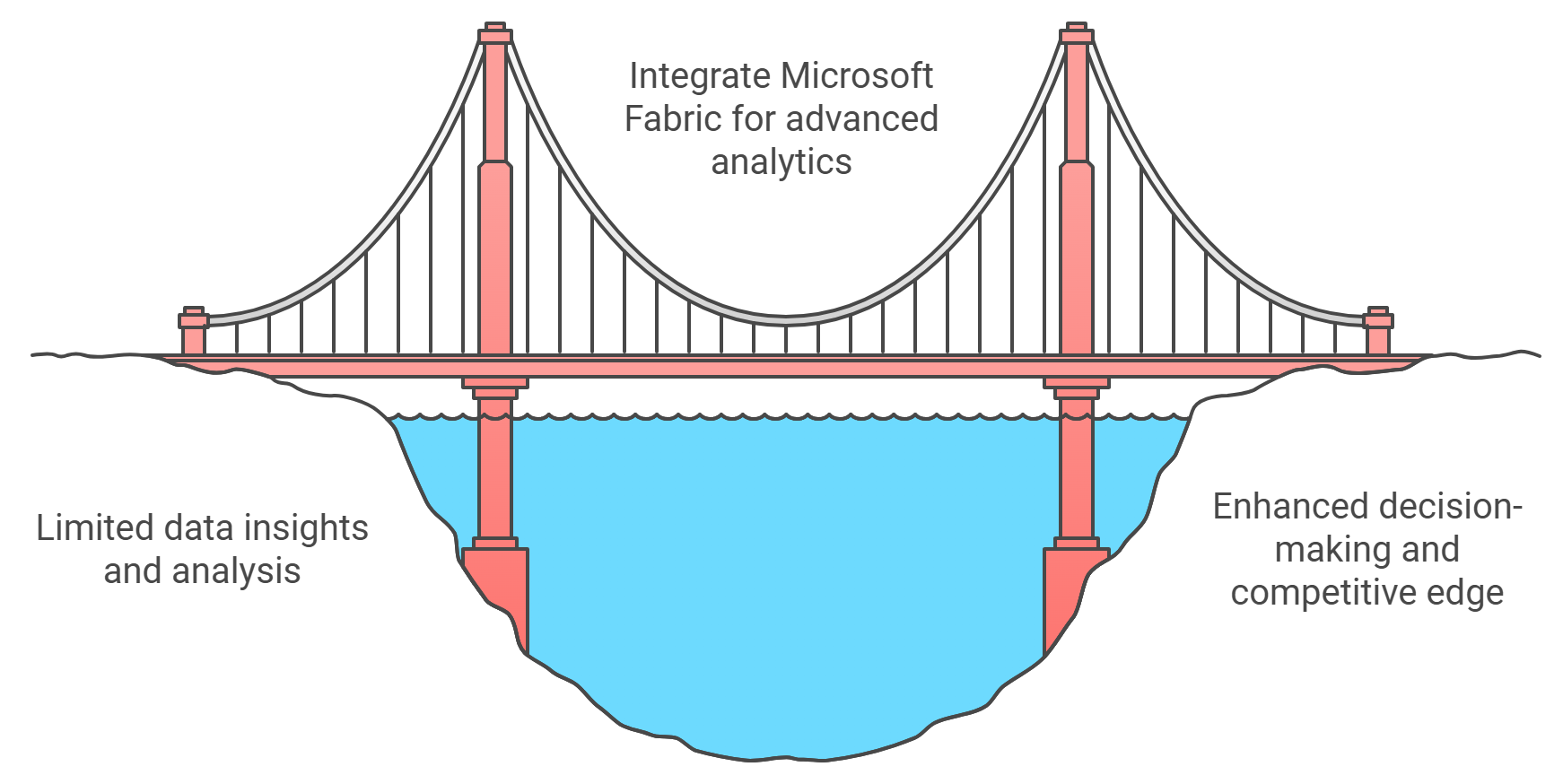

In simpler terms, an End-to-End Data Pipeline acts as a bridge that connects raw data to actionable insights. It ensures that data is collected from diverse sources, processed into meaningful formats, and delivered to the right tools for analysis. Whether you are working with structured or unstructured data, these pipelines provide a systematic way to manage and utilize your data effectively.

Importance of Unified Platforms for Data Workflows

Unified platforms like Microsoft Fabric play a critical role in simplifying data workflows. Instead of relying on multiple tools for different tasks, you can use a single platform to manage the entire data lifecycle. This integration reduces complexity and enhances efficiency, allowing you to achieve more with fewer resources.

"Microsoft Fabric consolidates data processes into an integrated system, addressing complexities in big data management and fostering streamlined workflows."

A unified platform ensures consistency across all stages of the pipeline. For example, Microsoft Fabric integrates data warehousing, engineering, analytics, and business intelligence into one solution. This integration not only saves time but also improves collaboration among teams. When everyone works within the same system, it becomes easier to maintain data integrity and security.

Additionally, unified platforms enhance scalability. As your data grows, you can rely on the platform's robust architecture to handle larger volumes without compromising performance. This scalability ensures that your data workflows remain efficient, even as your business expands.

By using a unified platform, you also gain access to advanced features like automation and AI-powered analytics. These capabilities enable you to optimize your workflows further, ensuring that your data pipelines deliver maximum value.

Overview of Microsoft Fabric for Data Pipelines

What is Microsoft Fabric?

Microsoft Fabric is a unified platform designed to simplify how you manage and analyze data. It integrates tools for data ingestion, processing, storage, and analytics into a single cohesive system. By combining these capabilities, it eliminates the need for multiple disconnected tools, making your data workflows more efficient and streamlined.

This platform serves as a comprehensive solution for modern data needs. It incorporates technologies from the Azure ecosystem, such as Azure Data Factory and Azure Synapse Analytics, to support real-time and batch data processing. Whether you are handling structured or unstructured data, Microsoft Fabric ensures that your data flows seamlessly from source to insight.

One of its standout features is its ability to act as a single source of truth. By consolidating data from diverse sources, it guarantees consistency and accuracy. This foundation enables you to make reliable decisions based on trustworthy data. Additionally, Microsoft Fabric supports advanced analytics, including AI and machine learning, empowering you to unlock deeper insights and drive innovation.

"Microsoft Fabric brings together a wide range of data and analytics capabilities into a single platform, enabling businesses to harness the full potential of their data."

Key Features and Benefits for Data Pipelines

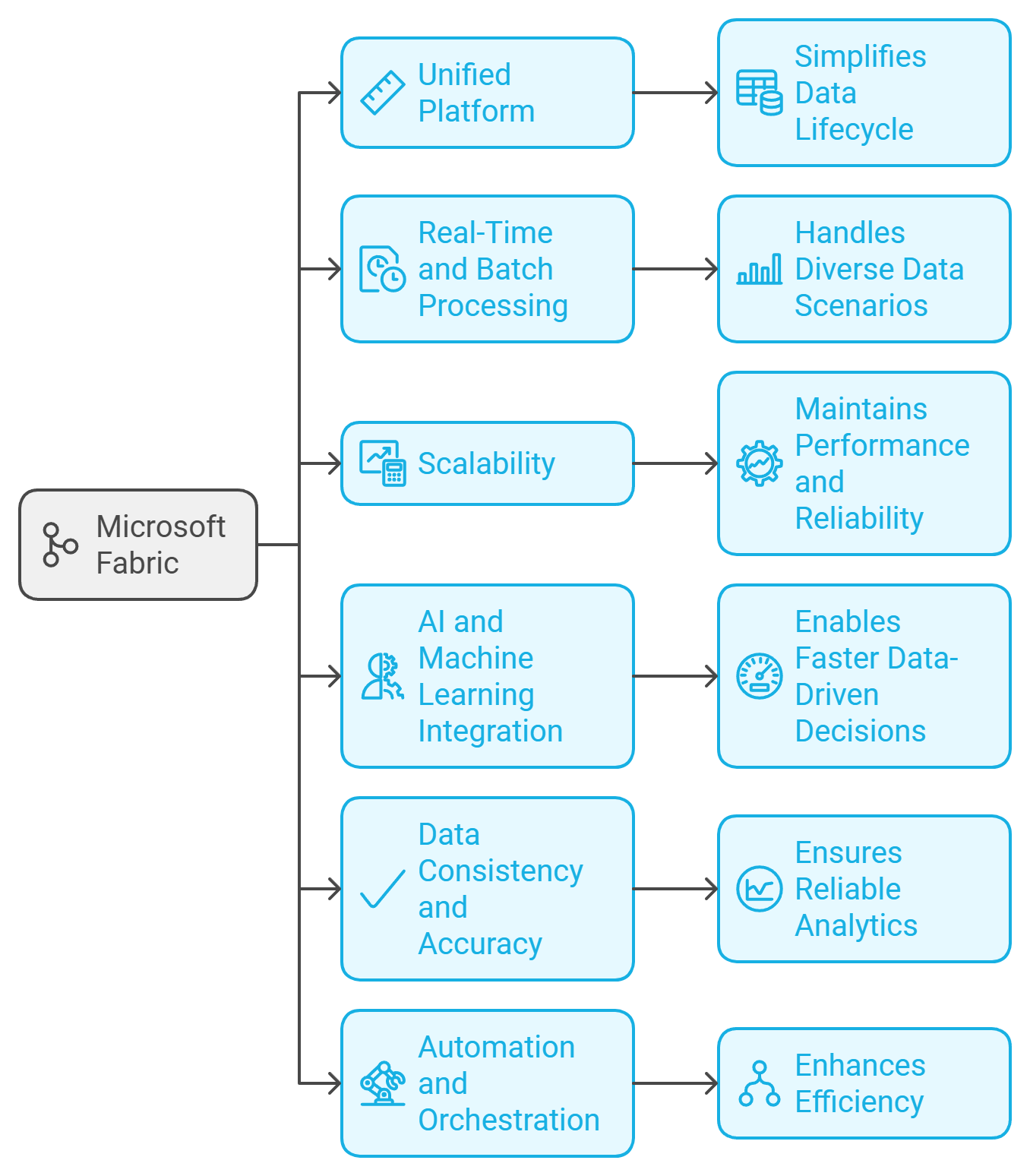

Microsoft Fabric offers a range of features that make it an ideal choice for building and managing End-to-End Data Pipelines. Here are some of its key features and the benefits they provide:

Unified Platform: By integrating tools for data engineering, analytics, and science, it simplifies the entire data lifecycle. You can manage everything from ingestion to visualization without switching between platforms.

Real-Time and Batch Processing: With support for both real-time and batch data ingestion, you can handle diverse data scenarios. This flexibility ensures that your pipelines meet the demands of modern business environments.

Scalability: The platform's robust architecture allows you to scale your data workflows as your business grows. Whether you are dealing with small datasets or massive volumes, Microsoft Fabric maintains performance and reliability.

AI and Machine Learning Integration: Advanced analytics capabilities, including AI-powered insights, enable you to extract meaningful patterns from your data. These tools help you make data-driven decisions faster.

Data Consistency and Accuracy: By consolidating data into a single platform, it ensures that your data remains consistent and accurate across all stages of the pipeline. This reliability builds trust in your analytics processes.

Automation and Orchestration: The platform supports automation, allowing you to set up triggers and schedules for your workflows. This feature reduces manual effort and enhances efficiency.

"Microsoft Fabric stands as a cornerstone in the modern data landscape, offering unparalleled advantages to businesses seeking to harness the power of their data."

By leveraging these features, you can build robust End-to-End Data Pipelines that transform raw data into actionable insights. The platform's comprehensive capabilities make it a game-changer for organizations aiming to streamline their data workflows and achieve better outcomes.

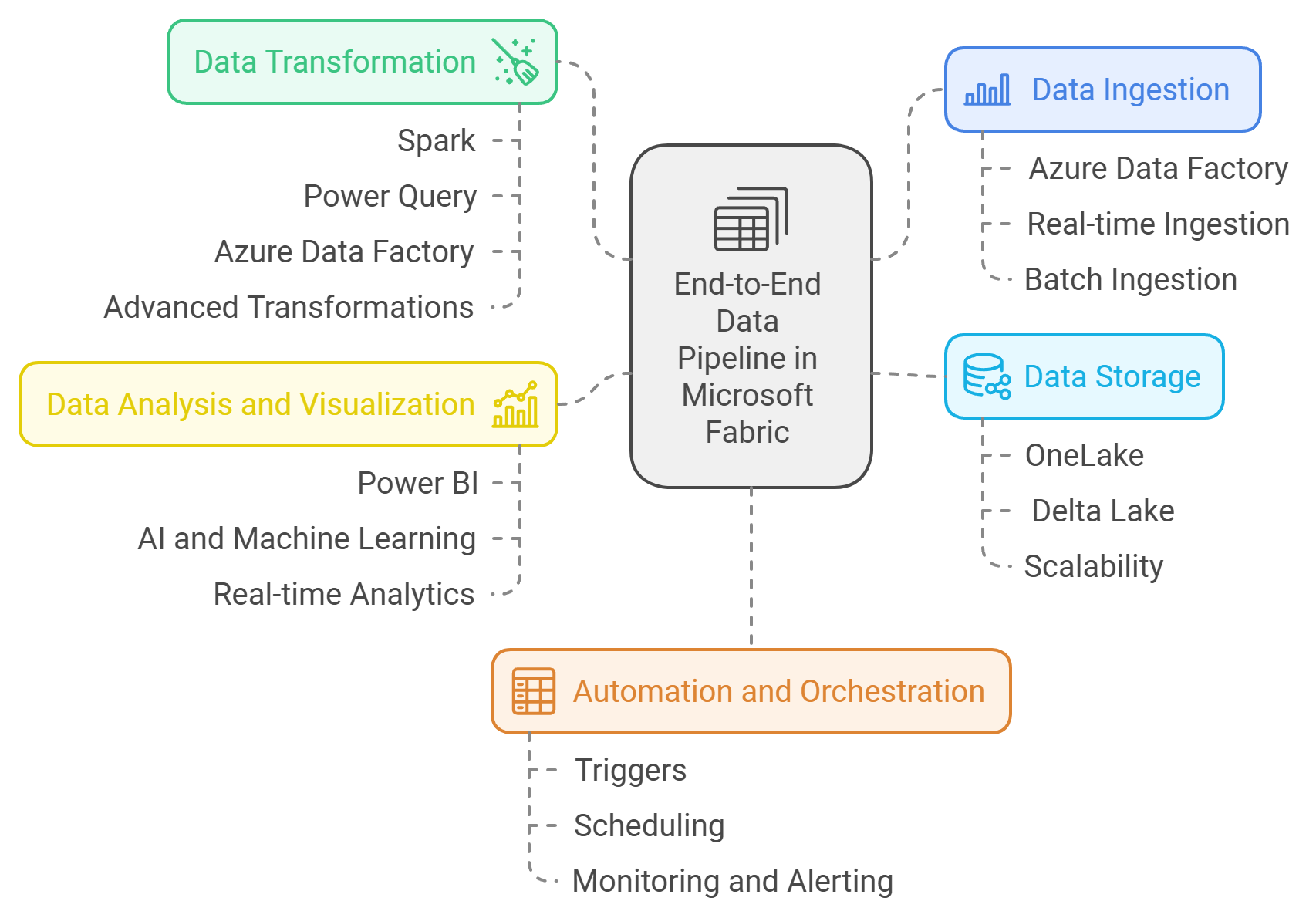

Key Components of an End-to-End Data Pipeline in Microsoft Fabric

Data Ingestion

Data ingestion serves as the foundation of any data pipeline. In Microsoft Fabric, you can connect to a wide range of data sources, including on-premises databases, cloud-based platforms, and streaming data feeds. This flexibility ensures that you can gather data from diverse systems without compatibility issues. The platform provides built-in connectors that simplify the process of bringing data into your pipeline, reducing the need for custom integrations.

With tools like Azure Data Factory integrated into Microsoft Fabric, you can handle both real-time and batch data ingestion. Real-time ingestion allows you to process streaming data, enabling immediate insights and faster decision-making. Batch ingestion, on the other hand, is ideal for processing large volumes of data at scheduled intervals. By supporting both methods, Microsoft Fabric ensures that your pipelines can adapt to various business needs.

"Microsoft Fabric makes it easy to bring together data from different parts of an organization, creating a seamless flow from source to insight."

Data Transformation

Once data is ingested, transformation becomes the next critical step. Raw data often comes in unstructured or semi-structured formats, making it unsuitable for analysis. Microsoft Fabric includes powerful tools like Spark and Power Query to help you clean, structure, and enrich your data. These tools allow you to perform tasks such as filtering, aggregating, and joining datasets with ease.

For more complex transformations, Microsoft Fabric leverages the capabilities of Azure Data Factory. You can design workflows that automate the transformation process, ensuring consistency and accuracy across your pipelines. The platform also supports advanced transformations, such as applying machine learning models to enhance your data with predictive insights.

By streamlining the transformation process, Microsoft Fabric reduces manual effort and minimizes errors. This ensures that your data is ready for storage and analysis, saving you time and resources.

Data Storage

After transformation, storing data securely and efficiently becomes essential. Microsoft Fabric offers robust storage solutions like OneLake and Delta Lake. These tools provide centralized and scalable storage options, ensuring that your data remains accessible and reliable.

OneLake acts as a unified storage layer, consolidating data from various sources into a single repository. This centralization eliminates data silos, making it easier to manage and retrieve information. Delta Lake, on the other hand, enhances storage reliability by supporting ACID transactions. This ensures that your data remains consistent, even during updates or modifications.

"Microsoft Fabric's storage solutions adapt to growing needs while maintaining high levels of security and performance."

Scalability is another key feature of Microsoft Fabric's storage capabilities. As your data grows, the platform's architecture ensures that performance remains unaffected. This scalability allows you to focus on leveraging your data rather than worrying about infrastructure limitations.

Data Analysis and Visualization

Data analysis and visualization play a crucial role in transforming raw data into actionable insights. With Microsoft Fabric, you gain access to advanced tools that simplify this process and enhance your decision-making capabilities. The platform integrates seamlessly with Power BI, enabling you to create interactive reports and dashboards that provide a clear view of your data.

Power BI allows you to visualize data in various formats, such as charts, graphs, and maps. These visualizations help you identify trends, patterns, and anomalies quickly. By presenting data in an intuitive manner, you can communicate insights effectively to stakeholders. Additionally, the platform supports real-time analytics, ensuring that your visualizations reflect the most up-to-date information.

"Microsoft Fabric empowers you to uncover insights through AI-powered analytics, making data-driven decisions faster and more accurate."

The integration of AI and machine learning within Microsoft Fabric takes data analysis to the next level. You can leverage these capabilities to predict outcomes, identify correlations, and automate complex analyses. For instance, AI models can analyze historical data to forecast future trends, helping you stay ahead in a competitive market.

Moreover, Microsoft Fabric ensures that your data remains consistent and reliable throughout the analysis process. By consolidating data from diverse sources into a unified platform, it eliminates discrepancies and enhances the accuracy of your insights. This consistency builds trust in your analytics and supports better decision-making.

Automation and Orchestration

Automation and orchestration are essential for maintaining efficient and reliable data pipelines. Microsoft Fabric provides robust tools to automate repetitive tasks and orchestrate complex workflows, saving you time and effort. With features like triggers and scheduling, you can ensure that your data pipelines run smoothly without manual intervention.

The platform's orchestration capabilities allow you to design workflows that connect various stages of your pipeline. For example, you can set up a workflow that ingests data, transforms it, stores it, and then triggers an analysis process. This end-to-end automation ensures that your data flows seamlessly from source to insight.

"Microsoft Fabric streamlines the scheduling and orchestration of complex jobs, ensuring consistent and reliable data pipelines."

By automating your workflows, you reduce the risk of errors and improve efficiency. The platform also supports monitoring and alerting, enabling you to track the performance of your pipelines and address issues proactively. This proactive approach minimizes downtime and ensures that your data pipelines deliver consistent results.

Scalability is another key advantage of Microsoft Fabric's automation features. As your data needs grow, the platform adapts to handle larger volumes and more complex workflows. This scalability ensures that your pipelines remain efficient and reliable, even as your organization expands.

Data Ingestion with Microsoft Fabric

Using Connectors for Diverse Data Sources

Data ingestion is the first step in building a robust data pipeline, and Microsoft Fabric simplifies this process with its extensive range of connectors. These connectors allow you to seamlessly integrate data from various sources, including on-premises databases, cloud platforms, and third-party applications. By using these pre-built connectors, you can eliminate the need for custom coding and reduce the time required to bring data into your pipeline.

For example, you can connect to popular platforms like Azure SQL Database, Salesforce, or even streaming data sources such as IoT devices. This flexibility ensures that you can gather data from diverse systems without compatibility issues. The platform also supports real-time ingestion, enabling you to process streaming data as it arrives. This capability is particularly useful for scenarios like monitoring live events or tracking customer interactions in real time.

"Microsoft Fabric provides a unified analytics solution that connects all your data in one place, making it easier to manage and analyze."

By leveraging these connectors, you can ensure that your data flows smoothly into the pipeline, regardless of its origin. This seamless integration not only saves time but also enhances the accuracy and reliability of your data.

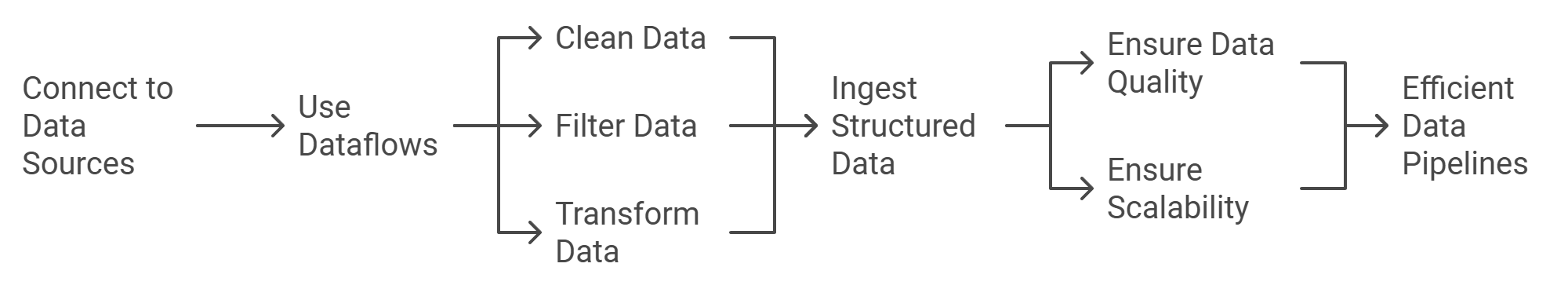

Leveraging Dataflows for Structured Ingestion

Once you have connected to your data sources, structuring the data becomes essential for effective analysis. Microsoft Fabric offers powerful tools like Dataflows to help you organize and prepare your data during the ingestion process. Dataflows allow you to define reusable data preparation logic, ensuring consistency across your pipelines.

With Dataflows, you can perform tasks such as cleaning, filtering, and transforming data before it enters the pipeline. For instance, you can remove duplicates, standardize formats, or apply business rules to ensure that the data meets your specific requirements. This structured approach reduces the need for manual intervention and minimizes errors.

"Microsoft Fabric streamlines the entire data lifecycle, from ingestion to actionable insights, by providing tools like Dataflows for structured data preparation."

Another advantage of using Dataflows is their ability to handle large datasets efficiently. Whether you are working with transactional data or unstructured logs, Dataflows can process and structure the data at scale. This scalability ensures that your pipelines remain efficient, even as your data grows.

By combining connectors and Dataflows, Microsoft Fabric enables you to build a strong foundation for your data pipelines. These tools simplify the ingestion process, enhance data quality, and ensure that your data is ready for the next stages of the pipeline.

Data Transformation in Microsoft Fabric

Preparing Data with Power Query

Power Query in Microsoft Fabric provides a user-friendly interface for preparing and transforming data. It allows you to clean, shape, and refine your data without requiring advanced coding skills. This tool is ideal for users who want to focus on data preparation while minimizing technical complexities.

With Power Query, you can perform essential tasks such as:

Removing duplicates to ensure data accuracy.

Filtering rows to focus on relevant information.

Splitting or merging columns for better organization.

Changing data types to standardize formats.

For example, if you are working with a dataset containing inconsistent date formats, Power Query enables you to standardize these formats quickly. This ensures that your data is ready for analysis without manual intervention.

"Power Query simplifies data preparation by offering intuitive tools that streamline the transformation process."

Another advantage of Power Query is its ability to handle large datasets efficiently. Whether you are working with transactional data or unstructured logs, this tool processes data at scale, ensuring that your workflows remain smooth and reliable. By leveraging Power Query, you can save time and focus on deriving insights from your data.

Advanced Transformations with Data Factory

For more complex data transformation needs, Microsoft Fabric integrates the powerful capabilities of Azure Data Factory. This tool allows you to design and automate sophisticated workflows, ensuring consistency and accuracy across your pipelines.

Azure Data Factory supports advanced transformations such as:

Aggregating data from multiple sources into a unified format.

Applying machine learning models to enrich data with predictive insights.

Creating workflows that automate repetitive tasks, reducing manual effort.

For instance, you can use Azure Data Factory to combine sales data from various regions into a single dataset. You can then apply business rules to calculate metrics like total revenue or average sales per region. This automation ensures that your data remains consistent and up-to-date.

"Data Factory in Microsoft Fabric combines citizen and professional data integration capabilities, offering a modern experience for managing complex transformations."

The platform also provides connectivity to over 100 relational and non-relational databases, REST APIs, and other interfaces. This flexibility ensures that you can integrate data from diverse sources seamlessly. Additionally, Azure Data Factory supports real-time and batch processing, enabling you to handle both streaming and static data effectively.

By using Azure Data Factory, you can build robust workflows that adapt to your organization's growing data needs. Its scalability and reliability make it an essential tool for managing advanced transformations in your data pipelines.

Data Storage Solutions in Microsoft Fabric

Centralized Storage with OneLake

OneLake serves as the cornerstone of centralized data storage in Microsoft Fabric, offering a unified repository for all your data needs. This solution consolidates data from various sources into a single, easily accessible location. By eliminating data silos, you can streamline your workflows and ensure that your teams work with consistent and reliable data.

One of the key advantages of OneLake is its ability to simplify data management. You can store structured, semi-structured, and unstructured data in one place, making it easier to organize and retrieve information. This centralization reduces the complexity of managing multiple storage systems and enhances collaboration across departments.

"OneLake provides a unified storage layer, enabling seamless integration of data from diverse sources into a single repository."

OneLake also supports advanced features like versioning and metadata management. These capabilities allow you to track changes to your data and maintain a clear audit trail. For example, if you need to analyze historical trends, you can access previous versions of your datasets without any hassle. This feature ensures that your data remains accurate and trustworthy over time.

Scalability is another strength of OneLake. As your data grows, the platform adapts to handle larger volumes without compromising performance. This scalability ensures that your storage solution remains efficient, even as your organization expands. By leveraging OneLake, you can focus on deriving insights from your data rather than worrying about storage limitations.

Scalable and Reliable Storage with Delta Lake

Delta Lake complements OneLake by providing scalable and reliable storage solutions that enhance data consistency and performance. Built on open-source technology, Delta Lake introduces features like ACID transactions, which ensure that your data remains accurate and consistent, even during updates or modifications.

With Delta Lake, you can perform complex operations like merging, updating, and deleting data without risking inconsistencies. For instance, if you need to update customer records across multiple datasets, Delta Lake guarantees that all changes are applied correctly. This reliability builds trust in your data and supports better decision-making.

"Delta Lake enhances storage reliability by supporting ACID transactions, ensuring data consistency across all operations."

Another standout feature of Delta Lake is its ability to handle large-scale data processing. Whether you are working with batch or streaming data, Delta Lake ensures that your pipelines run smoothly and efficiently. This capability makes it an ideal choice for organizations dealing with massive datasets or real-time analytics.

Delta Lake also integrates seamlessly with other tools in Microsoft Fabric, such as Azure Data Factory and Power BI. This integration allows you to create end-to-end workflows that connect data ingestion, transformation, storage, and analysis. By using Delta Lake, you can build robust pipelines that deliver actionable insights with minimal effort.

In addition to scalability and reliability, Delta Lake offers advanced features like schema enforcement and time travel. Schema enforcement ensures that your data adheres to predefined structures, reducing errors and improving data quality. Time travel enables you to access historical versions of your data, making it easier to analyze trends and identify patterns.

By combining the strengths of OneLake and Delta Lake, Microsoft Fabric provides a comprehensive storage solution that meets the demands of modern data workflows. These tools empower you to manage your data effectively, ensuring that your pipelines deliver consistent and reliable results.

Data Analysis and Visualization in Microsoft Fabric

Creating Reports and Dashboards with Power BI

Power BI in Microsoft Fabric empowers you to create visually engaging reports and dashboards that transform raw data into actionable insights. This tool allows you to present complex information in a simplified and interactive format, making it easier to understand trends and patterns.

You can use Power BI to design dashboards tailored to your specific needs. For instance, you might create a sales performance dashboard that highlights key metrics like revenue, customer acquisition, and regional performance. These dashboards provide a clear overview of your data, enabling you to make informed decisions quickly.

"Power BI enables users to visualize data in real-time, fostering better decision-making and collaboration."

The drag-and-drop interface in Power BI simplifies the process of building reports. You can choose from a variety of visualization options, such as bar charts, line graphs, and heat maps, to represent your data effectively. Additionally, the tool supports real-time data updates, ensuring that your reports always reflect the latest information.

Collaboration becomes seamless with Power BI. You can share your dashboards with team members or stakeholders, allowing everyone to access the same insights. This shared understanding promotes alignment and enhances decision-making across your organization.

Gaining Insights with AI-Powered Analytics

Microsoft Fabric integrates advanced AI-powered analytics to help you uncover deeper insights from your data. These capabilities allow you to go beyond traditional analysis and explore predictive and prescriptive insights.

AI models within the platform can analyze historical data to forecast future trends. For example, you can predict customer behavior, such as purchase patterns or churn rates, using machine learning algorithms. These predictions enable you to take proactive measures to improve outcomes.

"AI-powered analytics in Microsoft Fabric unlocks the potential of your data by identifying patterns and providing actionable recommendations."

The platform also supports natural language processing (NLP), which allows you to interact with your data using simple queries. You can ask questions like, "What were the top-performing products last quarter?" and receive instant answers in the form of visualizations or summaries. This feature makes data analysis accessible even to non-technical users.

Another advantage of AI-powered analytics is anomaly detection. The system can automatically identify outliers or unusual patterns in your data, helping you address potential issues before they escalate. For instance, if sales drop unexpectedly in a specific region, the platform can alert you to investigate further.

By leveraging AI-powered analytics, you can enhance your decision-making process and gain a competitive edge. These tools enable you to extract maximum value from your data, ensuring that your organization stays ahead in a data-driven world.

Automating and Orchestrating Data Pipelines

Setting Up Pipelines and Triggers

Automation begins with setting up pipelines that connect the various stages of your data workflow. In Microsoft Fabric, you can design these pipelines to handle tasks like data ingestion, transformation, storage, and analysis. The platform provides an intuitive interface that allows you to create workflows without requiring extensive coding knowledge. This accessibility ensures that both technical and non-technical users can build efficient pipelines.

Triggers play a crucial role in automation. They initiate specific actions within your pipeline based on predefined conditions. For example, you can configure a trigger to start a data ingestion process whenever new data arrives in a source system. This eliminates the need for manual intervention, ensuring that your workflows remain consistent and reliable.

"Microsoft Fabric streamlines automation by enabling users to set up triggers that respond to real-time events or scheduled tasks."

To set up triggers, you can choose from options like time-based schedules, event-based triggers, or manual execution. Time-based triggers are ideal for recurring tasks, such as daily data updates. Event-based triggers respond to changes in your data environment, ensuring immediate action when needed. Manual triggers provide flexibility, allowing you to execute workflows on demand.

By combining pipelines and triggers, you can create a seamless flow of data across your organization. This automation reduces errors, saves time, and ensures that your data pipelines operate efficiently.

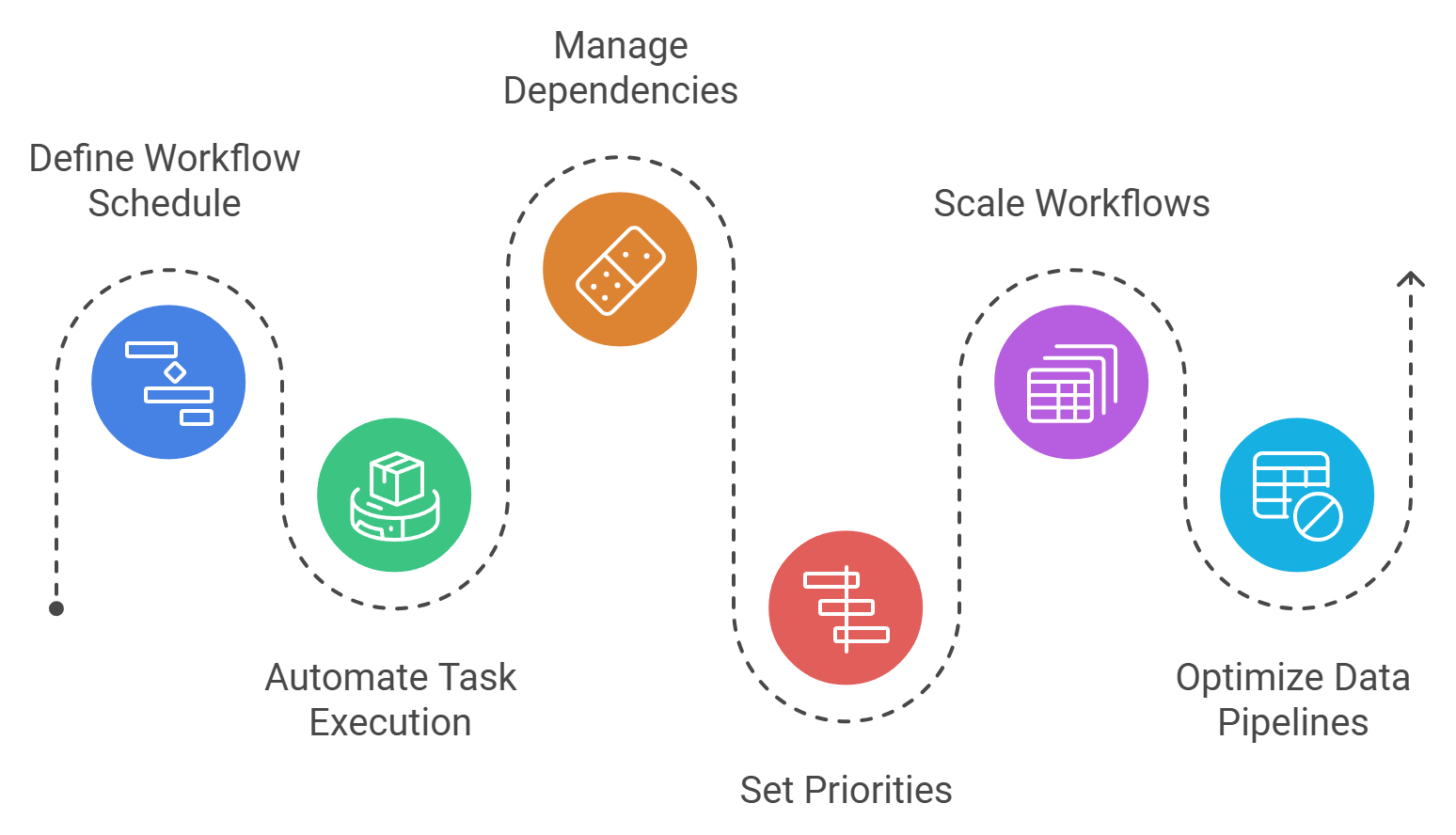

Scheduling Workflows for Efficiency

Scheduling workflows is essential for maintaining efficiency in your data pipelines. Microsoft Fabric offers robust scheduling capabilities that allow you to automate repetitive tasks and optimize resource usage. With these tools, you can ensure that your workflows run at the right time, delivering results when you need them most.

To schedule a workflow, you define the frequency and timing of its execution. For instance, you might schedule a data transformation process to run every night, ensuring that your datasets are ready for analysis each morning. This approach minimizes delays and keeps your data pipelines aligned with your business needs.

"Efficient scheduling in Microsoft Fabric ensures that workflows run seamlessly, reducing downtime and enhancing productivity."

The platform also supports advanced scheduling features, such as dependency management and priority settings. Dependency management ensures that workflows execute in the correct order, preventing conflicts or errors. Priority settings allow you to allocate resources effectively, ensuring that critical tasks receive the attention they require.

Another advantage of scheduling workflows in Microsoft Fabric is scalability. As your data needs grow, the platform adapts to handle larger volumes and more complex workflows. This scalability ensures that your pipelines remain efficient, even as your organization expands.

By leveraging scheduling tools, you can optimize your data workflows, reduce manual effort, and focus on deriving insights from your data. These capabilities make Microsoft Fabric an invaluable tool for automating and orchestrating data pipelines.

Step-by-Step Guide to Building End-to-End Data Pipelines

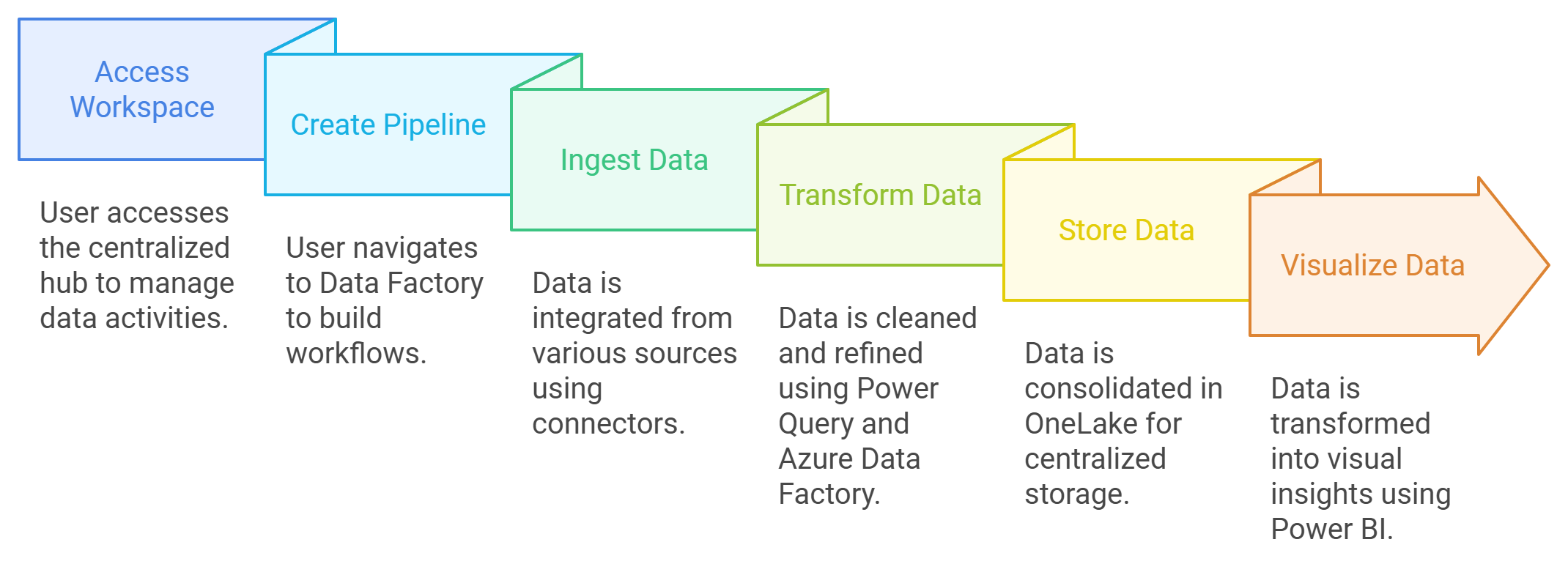

Setting Up Your Environment in Microsoft Fabric

Before you begin building your pipeline, you need to set up your environment in Microsoft Fabric. Start by accessing your workspace within the platform. This workspace acts as a centralized hub where you can manage all your data activities, including ingestion, transformation, and analysis.

To create a new pipeline, navigate to the Data Factory section of your workspace. Here, you will find tools designed to streamline the process of building and orchestrating workflows. Use the intuitive interface to define the structure of your pipeline. You can add components like data sources, transformation steps, and storage destinations.

"Microsoft Fabric simplifies the setup process by providing a unified workspace for managing data pipelines."

Ensure that your environment is configured to handle the specific requirements of your data. For example, if you plan to work with real-time data, enable streaming capabilities. If your focus is on batch processing, configure the necessary schedules and triggers. This preparation ensures that your pipeline operates efficiently from the start.

Ingesting Data from Multiple Sources

Data ingestion is the first step in building a robust pipeline. Microsoft Fabric offers a wide range of connectors that allow you to integrate data from various sources. These connectors support platforms like Azure SQL Database, Salesforce, and even IoT devices. By leveraging these pre-built integrations, you can save time and reduce the complexity of custom coding.

To ingest data, select the appropriate connector for your source system. Configure the connection settings, such as authentication credentials and data formats. Once connected, you can choose between real-time and batch ingestion methods. Real-time ingestion is ideal for scenarios requiring immediate insights, while batch ingestion works well for processing large datasets at scheduled intervals.

"With Microsoft Fabric, you can seamlessly connect to diverse data sources, ensuring a smooth flow of information into your pipeline."

After setting up the connection, use Dataflows to structure and prepare the ingested data. This tool allows you to clean and organize your data during the ingestion process, ensuring consistency and accuracy. For example, you can remove duplicates, standardize formats, or apply business rules to meet your specific requirements.

Transforming and Cleaning Data

Once your data is ingested, the next step is transformation. Raw data often contains inconsistencies or irrelevant information, making it unsuitable for analysis. Microsoft Fabric provides powerful tools like Power Query and Azure Data Factory to help you clean and refine your data.

Use Power Query for basic transformations. This tool offers a user-friendly interface where you can perform tasks like filtering rows, splitting columns, and changing data types. For example, if your dataset includes inconsistent date formats, you can standardize them with just a few clicks. These transformations ensure that your data is ready for further processing.

"Power Query simplifies data preparation, allowing you to focus on extracting insights rather than fixing errors."

For more advanced transformations, turn to Azure Data Factory. This tool enables you to design complex workflows that automate the transformation process. You can aggregate data from multiple sources, apply machine learning models, or create calculated fields based on business logic. Automation reduces manual effort and ensures consistency across your pipeline.

By combining these tools, you can transform raw data into a structured format that meets your analytical needs. This step is crucial for building reliable and efficient End-to-End Data Pipelines.

Storing Data in OneLake

OneLake in Microsoft Fabric provides a centralized storage solution that simplifies how you manage and access your data. It consolidates data from multiple sources into a single repository, eliminating the challenges of data silos. This unified approach ensures that you can store structured, semi-structured, and unstructured data in one place, making it easier to organize and retrieve information.

One of the standout features of OneLake is its scalability. As your data grows, the platform adapts seamlessly to handle larger volumes without compromising performance. This scalability ensures that your storage infrastructure remains efficient, even as your organization expands. Additionally, OneLake supports advanced features like versioning, which allows you to track changes and access previous versions of your datasets. This capability is invaluable for maintaining data accuracy and analyzing historical trends.

"OneLake acts as a unified storage layer, enabling seamless integration of data from diverse sources into a single repository."

Security is another critical aspect of OneLake. The platform incorporates robust security measures to protect sensitive information, ensuring compliance with industry standards. By using OneLake, you can focus on leveraging your data for insights while trusting that it is stored securely and reliably.

Visualizing Data with Power BI

Power BI in Microsoft Fabric empowers you to transform raw data into meaningful visualizations. This tool enables you to create interactive dashboards and reports that present complex information in an easy-to-understand format. With its drag-and-drop interface, you can design visualizations tailored to your specific needs without requiring advanced technical skills.

For example, you can build a sales performance dashboard that highlights key metrics such as revenue, customer acquisition, and regional trends. These visualizations help you identify patterns and make informed decisions quickly. Power BI also supports real-time data updates, ensuring that your dashboards always reflect the latest information.

"Power BI enables users to visualize data in real-time, fostering better decision-making and collaboration."

Collaboration becomes seamless with Power BI. You can share your dashboards with team members or stakeholders, allowing everyone to access the same insights. This shared understanding enhances communication and ensures alignment across your organization. Additionally, Power BI integrates AI-powered analytics, enabling you to uncover deeper insights and predict future trends.

Automating the Pipeline Workflow

Automation is a cornerstone of efficient data pipelines, and Microsoft Fabric excels in this area. The platform allows you to automate repetitive tasks and orchestrate complex workflows, saving time and reducing errors. By setting up pipelines in Data Factory, you can connect various stages of your workflow, such as data ingestion, transformation, storage, and analysis.

Triggers play a vital role in automation. You can configure triggers to initiate specific actions based on predefined conditions. For instance, a trigger can start a data ingestion process whenever new data arrives in a source system. This ensures that your workflows run consistently without manual intervention.

"Microsoft Fabric streamlines automation by enabling users to set up triggers that respond to real-time events or scheduled tasks."

Scheduling workflows further enhances efficiency. You can define when and how often your pipelines should execute, ensuring that tasks align with your business needs. For example, you might schedule a data transformation process to run nightly, ensuring that your datasets are ready for analysis each morning. The platform also supports dependency management, ensuring that workflows execute in the correct order.

By automating and orchestrating your pipeline workflows, Microsoft Fabric helps you maintain reliable and efficient End-to-End Data Pipelines. This automation reduces manual effort, minimizes errors, and ensures that your data flows seamlessly from source to insight.

Best Practices for Building Data Pipelines with Microsoft Fabric

Ensuring Scalability and Performance

Building scalable and high-performing data pipelines requires careful planning and execution. With Microsoft Fabric, you can ensure scalability by leveraging its robust architecture and flexible tools. Start by designing your pipelines to handle increasing data volumes without compromising speed or reliability. Use features like real-time and batch processing to adapt to different workloads effectively.

To enhance performance, optimize your data transformations. Avoid unnecessary steps that slow down your workflows. Tools like Power Query and Azure Data Factory allow you to streamline processes, ensuring that your pipelines run efficiently. Regularly monitor the performance of your pipelines and identify bottlenecks. Addressing these issues promptly helps maintain smooth operations.

"Microsoft Fabric empowers users to scale their data workflows seamlessly while maintaining top-notch performance."

Scalability also depends on efficient storage solutions. Utilize OneLake and Delta Lake to store data securely and reliably. These tools support large-scale data processing, ensuring that your pipelines remain efficient as your organization grows. By focusing on scalability and performance, you can build End-to-End Data Pipelines that meet the demands of modern business environments.

Managing Security and Compliance

Data security and compliance are critical when building data pipelines. Microsoft Fabric provides robust security features to protect sensitive information. Start by implementing role-based access controls to ensure that only authorized users can access specific data. This approach minimizes the risk of unauthorized access and enhances data protection.

Encryption plays a vital role in securing your data. Microsoft Fabric supports encryption both in transit and at rest, ensuring that your data remains safe throughout its lifecycle. Regularly update your security protocols to stay ahead of potential threats. Conduct audits to identify vulnerabilities and address them proactively.

"Embracing Microsoft Fabric is more than just implementing a new tool; it’s about joining a community that thrives on learning, sharing, and innovating within the data space."

Compliance with industry standards is equally important. Microsoft Fabric helps you meet regulatory requirements by providing tools for data governance and auditing. Use these features to track data usage and maintain a clear audit trail. This transparency ensures that your organization adheres to legal and ethical standards.

By prioritizing security and compliance, you not only protect your data but also build trust with stakeholders. A secure and compliant pipeline fosters confidence in your analytics processes and supports better decision-making.

Monitoring and Troubleshooting Pipelines

Effective monitoring is essential for maintaining reliable data pipelines. Microsoft Fabric offers built-in tools to track the performance of your workflows. Use these tools to monitor key metrics like data processing times and error rates. Identifying issues early allows you to address them before they impact your operations.

Set up alerts to notify you of potential problems. For example, you can configure alerts to trigger when data ingestion fails or when processing times exceed acceptable limits. These notifications help you respond quickly and minimize downtime.

"Microsoft Fabric streamlines the scheduling and orchestration of complex jobs, ensuring consistent and reliable data pipelines."

Troubleshooting becomes easier with detailed logs and diagnostic tools. Analyze these logs to pinpoint the root cause of issues. Whether it’s a failed transformation or a storage error, understanding the problem helps you implement effective solutions. Regularly review your pipelines to identify areas for improvement and optimize their performance.

By monitoring and troubleshooting your pipelines, you ensure that they operate smoothly and deliver consistent results. This proactive approach enhances the reliability of your End-to-End Data Pipelines and supports your organization’s data-driven goals.

Common Challenges and How to Overcome Them

Handling Large Data Volumes

Managing large data volumes can overwhelm your pipelines if not handled effectively. As data grows, processing speed and storage reliability often become bottlenecks. To address this, you should leverage the scalability of Microsoft Fabric. Its architecture supports both real-time and batch processing, ensuring that your pipelines remain efficient regardless of data size.

Delta Lake, integrated within Microsoft Fabric, plays a crucial role here. It ensures data consistency through ACID transactions, even when handling massive datasets. This feature allows you to update, merge, or delete records without risking errors or inconsistencies. Additionally, OneLake provides centralized storage that scales seamlessly with your data needs. By consolidating data into a single repository, you eliminate silos and simplify management.

"Delta Lake enhances storage reliability by supporting ACID transactions, ensuring data consistency across all operations."

To optimize performance further, monitor your pipeline regularly. Identify bottlenecks in data ingestion or transformation processes and streamline them using tools like Azure Data Factory. By automating workflows and scheduling tasks during off-peak hours, you can reduce processing delays and improve overall efficiency.

Integrating Legacy Systems

Integrating legacy systems into modern data pipelines often presents compatibility challenges. Older systems may lack the flexibility to connect with advanced platforms like Microsoft Fabric. However, Microsoft Fabric offers a wide range of connectors that bridge this gap. These connectors enable seamless integration with on-premises databases, third-party applications, and even outdated systems.

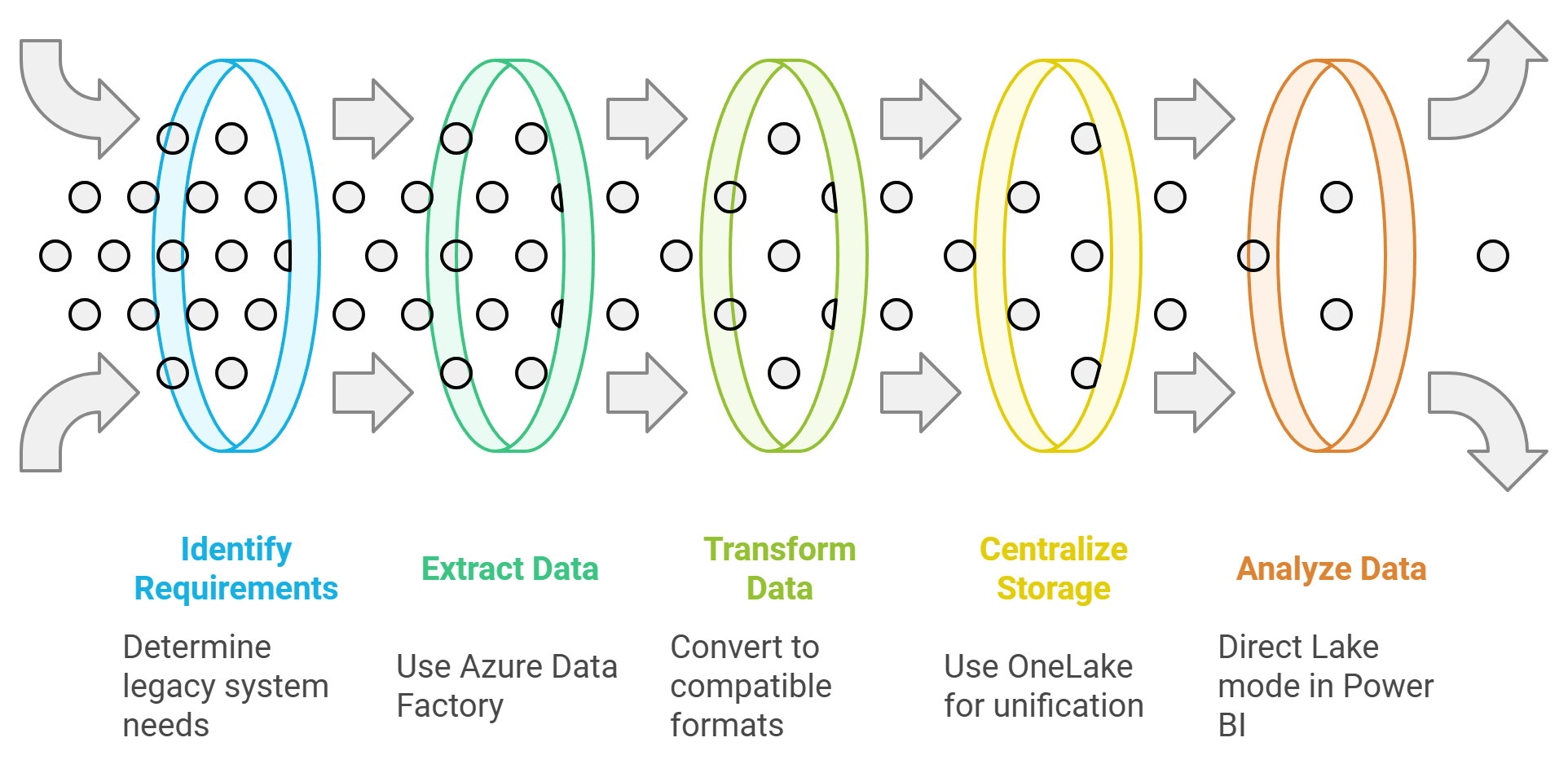

Start by identifying the specific requirements of your legacy systems. Use Azure Data Factory to create workflows that extract data from these systems and transform it into compatible formats. This tool supports over 100 relational and non-relational databases, ensuring broad compatibility. For example, you can aggregate data from legacy ERP systems and prepare it for analysis in Power BI.

"Microsoft Fabric simplifies integration by providing pre-built connectors that eliminate the need for custom coding."

Another strategy involves leveraging OneLake as a unified storage layer. By centralizing data from legacy systems, you can ensure consistency and accessibility. This approach reduces the complexity of managing multiple storage solutions and enhances collaboration across teams. Additionally, Direct Lake mode in Power BI allows you to analyze data directly from OneLake without duplication, streamlining the process further.

Ensuring Data Quality and Consistency

Data quality and consistency are critical for reliable analytics. Inconsistent or inaccurate data can lead to flawed insights and poor decision-making. To overcome this challenge, you should implement robust data governance practices within Microsoft Fabric.

Power Query provides an intuitive interface for cleaning and preparing data. Use it to remove duplicates, standardize formats, and apply business rules. For instance, you can ensure that date formats remain consistent across datasets, reducing errors during analysis. This tool simplifies data preparation, even for users with limited technical expertise.

"Power Query simplifies data preparation by offering intuitive tools that streamline the transformation process."

For more advanced needs, rely on Azure Data Factory to automate data validation workflows. This tool allows you to set up rules that check for missing values, outliers, or inconsistencies during the transformation process. Automation minimizes manual effort and ensures that your pipelines deliver high-quality data consistently.

Finally, centralize your data in OneLake to maintain a single source of truth. By consolidating data from diverse sources, you eliminate discrepancies and enhance accuracy. Features like versioning and metadata management further support data quality by providing clear audit trails and historical records.

By addressing these common challenges proactively, you can build robust and reliable data pipelines with Microsoft Fabric. These strategies ensure that your workflows remain efficient, scalable, and aligned with your business goals.

Use Cases and Real-World Applications

Business Intelligence and Reporting

Microsoft Fabric empowers you to transform raw data into actionable insights, making it a cornerstone for business intelligence and reporting. By consolidating data from multiple sources into a unified platform, you can create comprehensive reports that provide a clear picture of your organization's performance. Tools like Power BI allow you to design interactive dashboards that highlight key metrics such as revenue, customer trends, and operational efficiency.

For example, you can use Microsoft Fabric to analyze sales data across regions. This analysis helps you identify high-performing areas and pinpoint opportunities for improvement. The platform's ability to handle large datasets ensures that your reports remain accurate and reliable, even as your business grows.

"Companies using Microsoft Fabric have reported a staggering 379% return on investment, showcasing its potential to drive impactful business decisions."

By leveraging Microsoft Fabric for business intelligence, you can make data-driven decisions with confidence. The platform's integration of AI-powered analytics further enhances your ability to uncover trends and predict future outcomes, giving you a competitive edge in your industry.

Real-Time Analytics for Decision-Making

In today's fast-paced environment, real-time analytics is essential for staying ahead. Microsoft Fabric enables you to process and analyze data as it arrives, providing instant insights that support quick decision-making. This capability is particularly valuable for industries like retail, finance, and healthcare, where timely information can make a significant difference.

Imagine monitoring customer behavior during a live sales event. With Microsoft Fabric, you can track purchases, identify popular products, and adjust your strategies in real time. The platform's ability to handle streaming data ensures that you never miss critical updates, allowing you to respond proactively to changing circumstances.

"Microsoft Fabric's biggest advantage lies in its ability to integrate and manage vast amounts of data from numerous sources while empowering business leaders to make informed, strategic decisions."

Real-time analytics also enhances operational efficiency. For instance, you can use it to monitor supply chain activities, detect bottlenecks, and optimize resource allocation. By acting on real-time insights, you can improve productivity and deliver better outcomes for your organization.

Data-Driven Automation in Business Processes

Automation is a game-changer for modern businesses, and Microsoft Fabric excels in enabling data-driven automation. By integrating tools for data ingestion, transformation, and analysis, the platform allows you to automate repetitive tasks and streamline complex workflows. This automation reduces manual effort, minimizes errors, and enhances overall efficiency.

For example, you can set up automated workflows to process customer feedback. Microsoft Fabric captures data from surveys, transforms it into structured formats, and generates reports highlighting key insights. This process not only saves time but also ensures that you can act quickly on customer needs.

"Partnering with Launch Consulting for Microsoft Fabric implementation helped address challenges associated with data tool sprawl by establishing a cohesive and centralized data platform."

Data-driven automation also supports innovation. By freeing up resources, you can focus on strategic initiatives that drive growth and competitiveness. Whether it's automating financial reporting or optimizing marketing campaigns, Microsoft Fabric provides the tools you need to succeed in a data-driven world.

Future of End-to-End Data Pipelines with Microsoft Fabric

Emerging Trends in Data Engineering

The field of data engineering is evolving rapidly, driven by the increasing demand for efficient and scalable data solutions. As a professional, you must stay ahead by understanding the trends shaping the future of data pipelines. One major trend is the shift toward unified platforms that integrate multiple data processes. Tools like Microsoft Fabric lead this transformation by combining data engineering, analytics, and machine learning into a single ecosystem. This integration simplifies workflows and reduces the need for multiple tools.

Another trend is the growing reliance on AI and machine learning for automating data tasks. These technologies enable you to predict outcomes, detect anomalies, and optimize workflows. For example, Microsoft Fabric incorporates AI-powered analytics, allowing you to uncover patterns and gain actionable insights without manual intervention. This capability enhances decision-making and accelerates the data-to-insight process.

Scalability is also becoming a critical focus. Modern businesses generate massive amounts of data, and platforms must adapt to handle these volumes efficiently. Microsoft Fabric addresses this challenge by automatically scaling resources based on workload demands. Whether you are processing real-time data streams or managing batch operations, the platform ensures consistent performance.

Finally, data governance and security are gaining prominence. Organizations must comply with strict regulations while maintaining data integrity. Microsoft Fabric excels in this area by offering robust governance tools and encryption features. These capabilities help you manage data responsibly and build trust with stakeholders.

"Microsoft Fabric integrates and manages vast amounts of data from numerous sources, empowering business leaders to make informed decisions."

By embracing these trends, you can build future-ready data pipelines that align with the evolving needs of your organization.

Innovations in Microsoft Fabric for Data Workflows

Microsoft Fabric continues to innovate, setting new benchmarks for data pipeline management. One of its standout features is OneLake, a centralized storage solution that eliminates data silos. With OneLake, you can consolidate data from diverse sources into a single repository, simplifying access and enhancing collaboration. This innovation fosters seamless workflows and ensures that your teams work with consistent data.

The platform also introduces Delta Lake, which supports ACID transactions for reliable data storage. This feature ensures data consistency even during updates or modifications. For instance, you can merge or delete records across datasets without risking errors. Delta Lake’s fault-tolerance capabilities make it ideal for managing large-scale data operations.

Microsoft Fabric’s integration of AI and machine learning takes data workflows to the next level. You can leverage these tools to automate complex tasks, such as applying predictive models or detecting anomalies. These innovations reduce manual effort and improve the accuracy of your insights. For example, AI-powered analytics can forecast trends, helping you make proactive decisions.

Another key innovation is the platform’s ability to handle real-time and batch processing seamlessly. Whether you need immediate insights or scheduled data updates, Microsoft Fabric adapts to your requirements. This flexibility ensures that your pipelines remain efficient in dynamic business environments.

"Microsoft Fabric consolidates data processes into a single system, addressing complexities in big data management and fostering streamlined workflows."

Collaboration is also a priority. Microsoft Fabric bridges the gap between data engineers, analysts, and scientists, enabling them to work together within a unified platform. This integration enhances productivity and ensures that everyone contributes to the data lifecycle effectively.

By adopting these innovations, you can streamline your data workflows and unlock the full potential of your data. Microsoft Fabric not only meets today’s challenges but also prepares you for the future of data engineering.

Building End-to-End Data Pipelines with Microsoft Fabric empowers you to simplify and optimize your data workflows. By integrating tools for ingestion, transformation, storage, analysis, and automation, the platform ensures seamless operations and scalability. Its unified approach eliminates the need for multiple tools, saving time and reducing complexity. Whether you aim to enhance decision-making or drive innovation, Microsoft Fabric provides the foundation to unlock the full potential of your data. Start exploring its capabilities today to transform your analytics processes and achieve impactful results.

See Also

Exploring Microsoft Fabric's Impact on Data Transformation

Achieving Proficiency in Microsoft Fabric Techniques

Creating an Effective Framework for Data Leadership