Navigating the New EU AI Act with Michael Charles Borrelli

The EU AI Act marks a significant shift in how AI technologies are regulated, focusing on risk assessment and data governance. Understanding this act is crucial for businesses to ensure compliance and safe deployment of AI systems.

Did you ever stop to consider how artificial intelligence is not just a futuristic tool that sits in the realm of tech giants but is integrated into everyday apps that we use? As the world embraces AI technology, Europe has taken the lead in regulating it through the newly implemented EU AI Act. In this blog post, I’ll take you on a journey that unpacks the critical aspects of this landmark legislation, ensuring you’re informed about its implications for both personal and professional realms.

Understanding the EU AI Act

Overview of the EU AI Act's Goals and Structure

The EU AI Act is a significant step in regulating artificial intelligence across Europe. Officially in force since August 1, 2023, it stands as the world's first comprehensive law governing AI.

But what does this mean for you? First off, the Act focuses on several key goals:

Establish a human-centric framework: AI systems should support human activities.

Enhance safety: Protecting users from harmful AI applications.

Encourage innovation: Allowing businesses to thrive while adhering to safety standards.

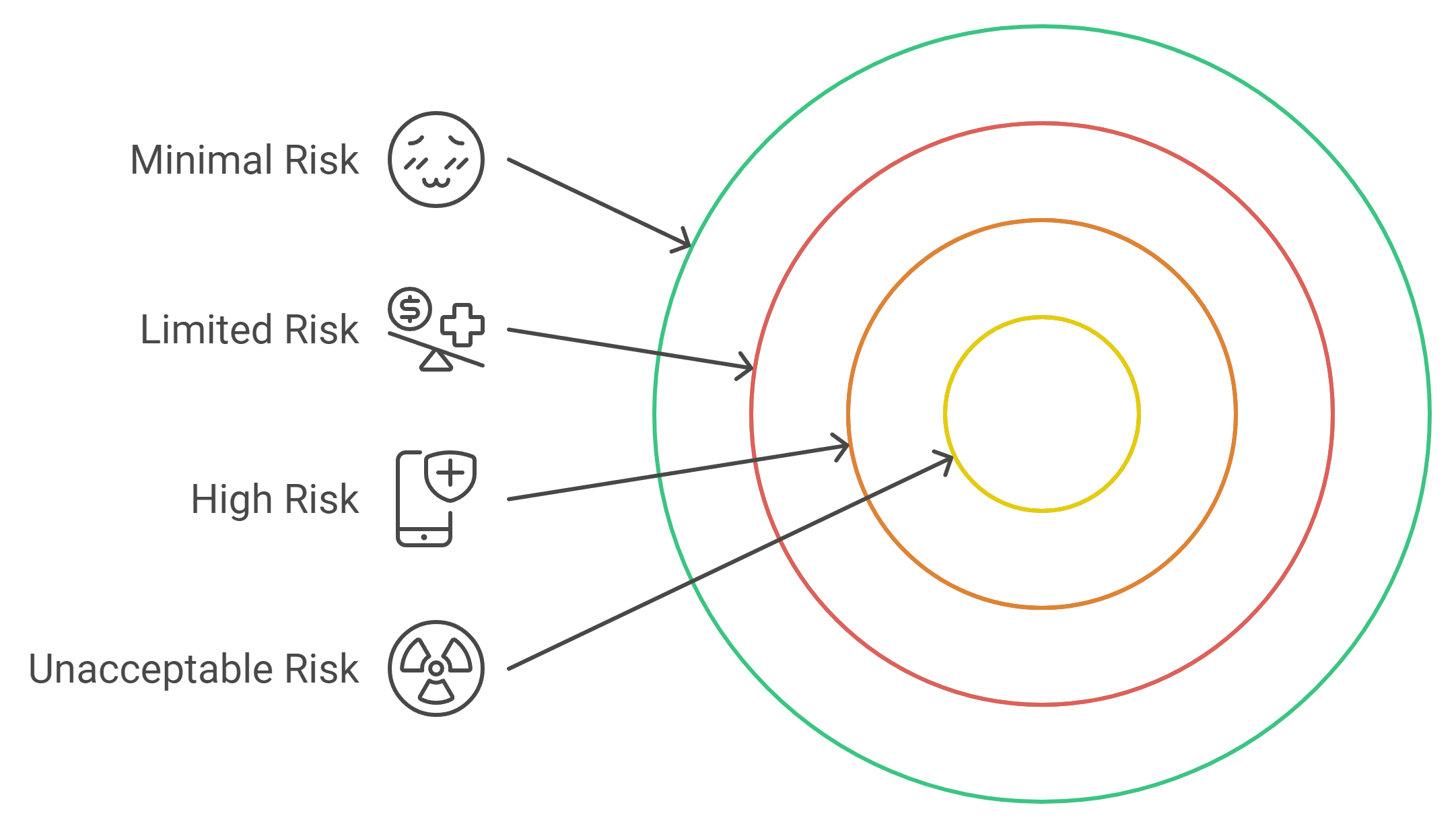

The Act employs a risk-based approach, categorizing AI systems into four risk levels: unacceptable, high, minimal, and limited. This structure allows for tailored compliance measures based on the potential risks each system presents.

The Significance of Its Risk-Based Approach

This risk-based approach isn't just a bureaucratic formality—it's crucial for effective regulation. Think of it like driving a car: the risks change depending on your speed and the road conditions. Similarly, AI systems come with varying levels of risk.

High-risk systems, for instance, may be used in public services such as healthcare. They require strict compliance protocols to safeguard personal data. Barelli mentions, “Data is the new gasoline.” Just as gasoline fuels engines, data drives AI. So, understanding what type of data you’re using can determine how you align with compliance expectations.

How It Impacts Various Sectors

The EU AI Act isn't just relevant to tech companies; it affects diverse sectors. From healthcare to finance, the implications are broad:

Healthcare: Implementing AI for diagnosis must adhere to high standards to ensure patient safety.

Finance: AI used in credit evaluation can’t compromise consumer rights.

Retail: AI-driven marketing strategies must also respect data protection laws.

Each of these sectors must adjust to comply with the newly established regulations. Organizations need to conduct fundamental rights impact assessments to evaluate compliance completely.

The Role of Compliance in AI Development

Compliance is not a mere afterthought; it’s integral to AI development. Companies, big or small, must understand their responsibilities. Noncompliance can hit hard, potentially leading to fines up to 7% of global turnover or 35 million euros.

Are you ready for the challenge? Conducting an inventory of your AI systems is the first step. Recognizing risk levels will help your organization maintain compliance and promote ethical AI use.

As the landscape continues to evolve, staying informed about the EU AI Act is vital for successful AI deployment. Embrace these changes—they could be your key to responsible innovation.

Risk Classification: What You Need to Know

Understanding risk classification is crucial for anyone involved in AI governance. Let's break down the four risk levels outlined in the EU AI Act: unacceptable, high, minimal, and limited. These categories help you grasp how AI systems are assessed based on the potential risk they present.

1. The Four Risk Levels

Unacceptable Risk: This includes systems that pose severe threats to human rights. For example, social scoring systems and certain biometric identification tools fall into this category. These can deeply affect people's safety and dignity.

High Risk: AI applications interacting with the public, like those in healthcare or credit evaluation, are classified as high-risk. They require stringent compliance measures to protect users.

Minimal Risk: These systems have low chances of causing harm. An example could be chatbots that handle general inquiries without sensitive data.

Limited Risk: AI systems here can be slightly more complex but still pose manageable risks. Think of recommendation systems for products — they suggest but don’t impose critical judgments.

2. Examples of AI Systems

What does this mean in real-world scenarios? Consider a healthcare AI that assesses patient conditions. This tool is high-risk because it directly impacts health outcomes. On the other hand, a music recommendation algorithm is categorized as minimal risk. Its impact is low and manageable.

3. Consequences of Non-Compliance

The stakes are high if your system falls short of these regulations. Just like not following traffic signs leads to accidents, failing to comply with AI regulations can result in significant penalties. Fines might reach up to 7% of your global annual turnover or even 35 million euros. Imagine facing such hefty fines—it's a wake-up call to ensure compliance!

4. Influence on Business Strategy

How does risk classification impact your business strategy? Understanding these classifications helps you prioritize compliance measures effectively. It also facilitates better resource allocation. A high-risk AI system will demand more rigorous oversight compared to a minimal risk one. This knowledge can guide your organization in designing responsible AI deployments.

In this evolving landscape, knowing where your AI systems stand in terms of risk can shape not just compliance efforts but also innovative strategies. Are you ready to reassess your practices?

Data Governance: The Heart of AI Compliance

Defining Data Governance in the Context of AI

Have you ever thought about what keeps your AI systems reliable? That's where data governance comes in. It’s like a set of rules that dictate how data is handled and managed. In the world of AI, good data governance ensures that the right data is available at the right time and in the right format. This can include setting up policies for data quality, security, and compliance. The importance of this cannot be overstated, especially as regulations like the EU AI Act come into play.

The Importance of Data Quality

Imagine trying to fill a car with the wrong type of fuel. It just won't run properly, right? Similarly, data quality is crucial for the success of AI systems. Poor-quality data can lead to biased results, inefficiencies, and a lack of trust from users. How can you ensure your data is trustworthy? Focus on:

Accuracy: Is your data correct and relevant?

Consistency: Does the data match across various systems?

Completeness: Are there missing pieces that could affect outcomes?

When data is of high quality, the AI systems built around it can be more effective and trustworthy.

Strategies for Effective Data Leadership

So, how can you improve your organization’s data leadership? Here are some strategies:

Establish Clear Policies: Document the rules and processes governing data.

Invest in Training: Your team should understand these policies and the importance of compliance.

Regular Audits: Consistent reviews will highlight any issues with data governance.

As noted by Michael Barelli from AI and Partners, organizations need a robust control framework to navigate the challenges posed by regulations like the EU AI Act. You might need to conduct impact assessments for high-risk AI systems, just like data protection impact assessments required under GDPR.

Case Studies Highlighting Successful Data Governance

Consider organizations that have excelled in data governance. For instance, a healthcare provider may have implemented a stringent data quality check and monitoring process. The result? Improved patient care and regulatory compliance. Another company in fintech might showcase how they structured their data management approach to ensure transparency and trust. These examples prove that effective governance can lead to significant benefits.

In the evolving landscape of AI, where compliance is key, prioritizing data governance is not just smart—it's necessary. So, start reevaluating your strategies today. After all, if data is "the new gasoline," then effective governance is the engine? Make sure yours runs smoothly!

The Future of AI Regulation in Europe

Understanding the Compliance Timeline

The EU AI Act is a significant milestone in artificial intelligence regulation. You might be wondering, what does the timeline for compliance look like? This legislation officially came into force on August 1, 2023. Organizations now have a two-year window to comply with these regulations. This means by 2025, many businesses must demonstrate adherence to the Act's requirements.

Immediate action is required for systems categorized as prohibited.

High-risk systems need a fundamental rights impact assessment.

Understanding this timeline is crucial. It gives you a clear view of when you need to implement changes and adapt your strategies. Failure to comply may result in hefty fines, possibly reaching up to 7% of your company’s global annual turnover. That's a big risk!

Long-Term Implications for Businesses

The long-term implications of the EU AI Act are profound. It’s not just about compliance; it’s about transforming how your organization approaches AI. Businesses will need to invest in robust data governance frameworks. This would entail a culture shift to prioritize ethical considerations alongside profitability.

Consider this: If data is "the new gasoline," then effective data leadership is akin to having a reliable engine. It powers your business engine while minimizing risks that come with AI technology. As mentioned by Michael Barelli, organizations must conduct an inventory of AI systems and understand their risk levels. This proactive stance can help avert compliance failures.

Ongoing Education and Adaptation

One thing stands out in the discussion: the necessity for ongoing education and adaptation. AI and the regulations surrounding it are ever-evolving. You can’t afford to become complacent. Continuous learning about these regulations is vital to keep your organization compliant.

Consider how often technology changes. Every advancement brings new challenges, right? You must equip your teams with the knowledge to navigate these waters. Regular training sessions can make a significant difference.

Future Predictions in AI Governance

Looking ahead, what might AI governance look like? Predictions suggest tighter regulations may emerge globally as countries observe the EU's actions. The emphasis on human-centric and risk-based approaches could become the norm. Expect to see more countries developing similar compliance frameworks.

Moreover, as AI applications grow, so will scrutiny from regulatory bodies. The EU AI Office has been established to ensure trustworthy AI deployment. You can look forward to a landscape where transparency and ethical considerations reign supreme.

"If you’re not thinking about compliance now, you might be too late," said Michael Charles Borrelli during the discussion.

As you think about the implications of these regulations, remember: they may drastically change how technology interacts with everyday life. Keeping informed and adapting is your best strategy for success in this new era.

Conclusion: Embracing Compliance as Opportunity

As we wrap up our exploration of the EU AI Act, it becomes clear how compliance is more than just a necessity; it can actually drive both innovation and trust in AI systems. With regulations now set in stone, you have an opportunity to rethink how compliance can catalyze growth.

How Compliance Fuels Innovation

Think about compliance not as a hurdle, but as a springboard for creativity. By adhering to the regulations, you can build systems that are reliable and ethical. Trust is vital in today’s tech landscape. Consumers are becoming increasingly discerning. They want to know their data is safe and their rights are protected. When you comply, you're not just following the rules; you're establishing a reputation for trustworthiness.

Aligning Business Goals with AI Regulations

How can you align your business goals with AI regulations? Start by thoroughly understanding the specifics of the EU AI Act. Take a closer look at the four risk classifications—unacceptable, high, minimal, and limited—outlined by Michael Barelli. Draft clear strategies that incorporate these risk levels into your business objectives. By doing so, your organization will be better prepared to embrace compliance as part of its core mission.

Proactive Engagement is Key

Final thoughts: it's crucial to engage proactively with the EU AI Act. Ignoring compliance can lead to dire consequences, including significant fines—up to 7% of global turnover, as Barelli pointed out. Implementing robust data governance protocols and conducting regular audits will ensure that your organization stays ahead of the game.

Take Action Now

Here’s a call to action for all businesses: review your AI systems today. Conduct an inventory to assess which categories your systems fall into according to the EU AI Act. Consider this an invitation to reevaluate and fortify your compliance strategies.

As you dive deeper into this realm, remember that effective data governance is your ally in navigating compliance challenges. Stay informed, stay prepared, and let compliance be an opportunity for innovation and trust in your AI initiatives.