How Generative AI Creates Balanced and Unbiased Datasets

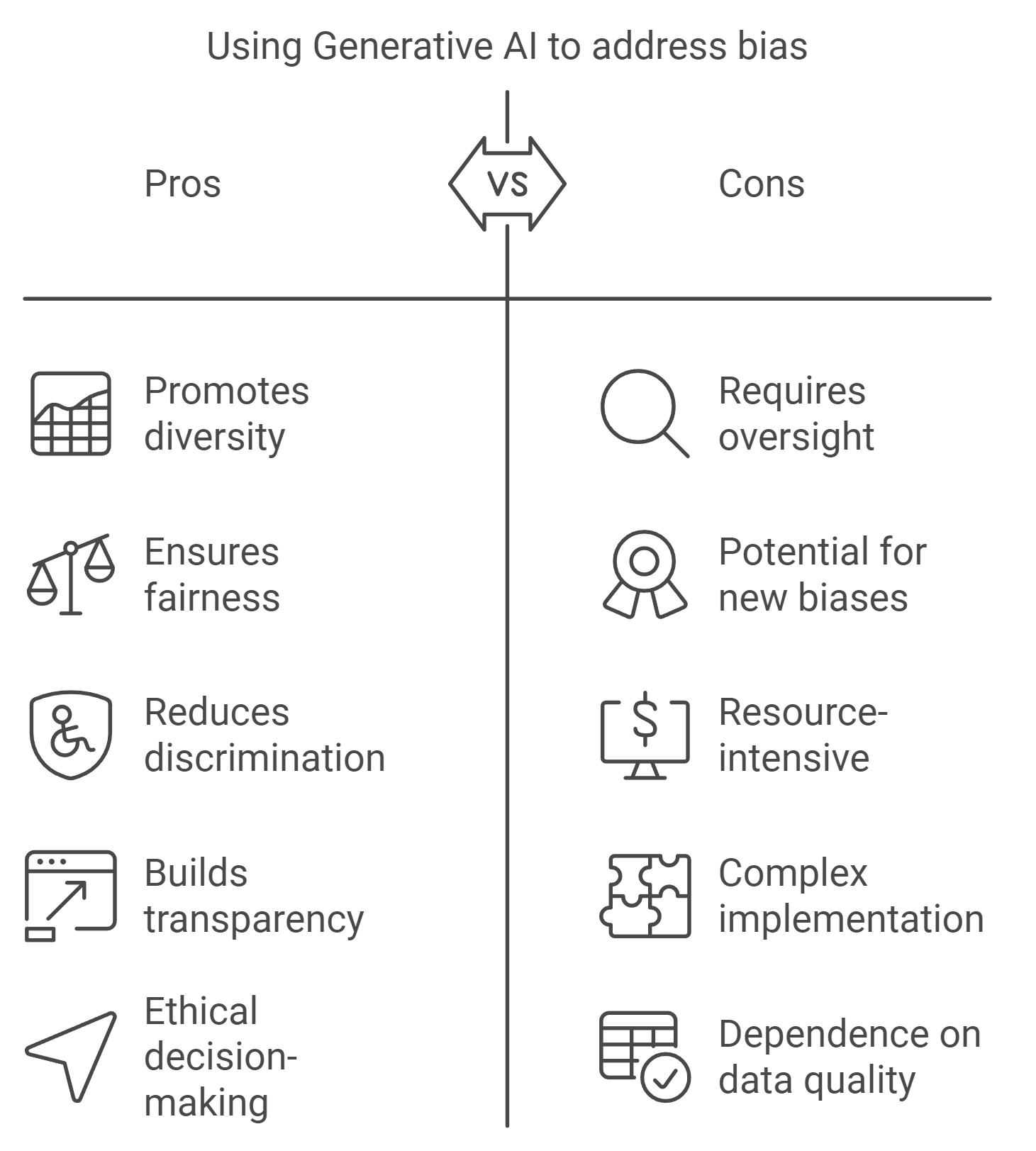

You’ve probably noticed how AI systems sometimes make decisions that feel unfair or biased. This happens because the data they learn from often reflects real-world discrimination or underrepresentation. Generative AI steps in to tackle this issue by creating datasets that promote diversity and inclusion. It doesn’t just replicate existing patterns; it generates new, balanced data that ensures fairness. With proper oversight, Data Analytics and Generative AI can help reduce discrimination and build transparency into AI systems. By addressing bias directly, it empowers you to turn complex data into actionable insights while fostering ethical decision-making.

Key Takeaways

Generative AI can create balanced datasets that promote diversity and inclusion, helping to reduce bias in AI systems.

Understanding different types of bias—historical, selection, measurement, and algorithmic—is crucial for developing fair AI models.

Conducting comprehensive bias audits on existing datasets is essential to identify gaps and ensure fair representation before generating new data. Utilizing tools like GANs and VAEs can enhance data diversity through synthetic data generation and data augmentation, improving AI model accuracy.

Regular validation and testing of generated data are necessary to maintain fairness and prevent the introduction of new biases. Collaboration between AI developers and domain experts is vital for creating ethical AI systems that reflect real-world diversity.

Adopting ethical guidelines and engaging in public discourse about AI practices can help ensure that generative AI contributes to a more equitable future.

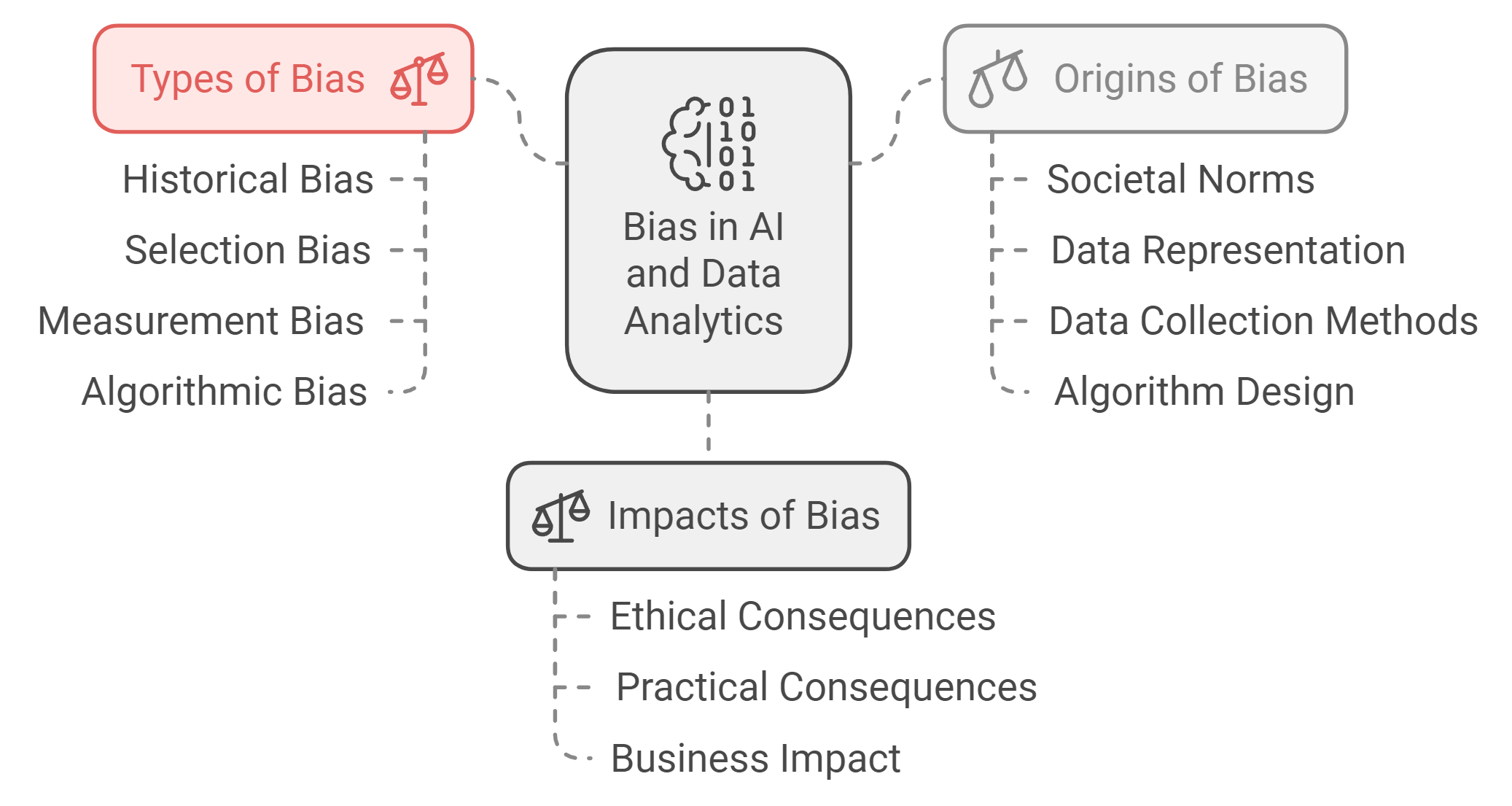

Defining Bias in Data Analytics

Understanding bias in data analytics is crucial for creating fair and effective AI systems. Bias can distort results, leading to unfair outcomes that harm individuals and communities. Let’s break down what bias in AI means, where it comes from, and how it impacts decision-making.

What is Bias in AI and Data Analytics

Bias in AI refers to the systematic errors or unfairness that occur when algorithms produce results favoring certain groups over others. This happens because AI learns patterns from historical data, which often reflects societal inequalities. For example, ProPublica revealed that a criminal risk assessment tool disproportionately labeled African-Americans as high-risk offenders. This kind of algorithmic bias perpetuates existing discrimination instead of solving it.

AI systems don’t think for themselves; they rely on the data you provide. If the data contains skewed patterns, the AI will replicate them. This is why addressing bias in datasets is essential. Without intervention, AI can reinforce stereotypes, such as favoring male candidates in hiring processes or misidentifying dark-skinned women in facial recognition software.

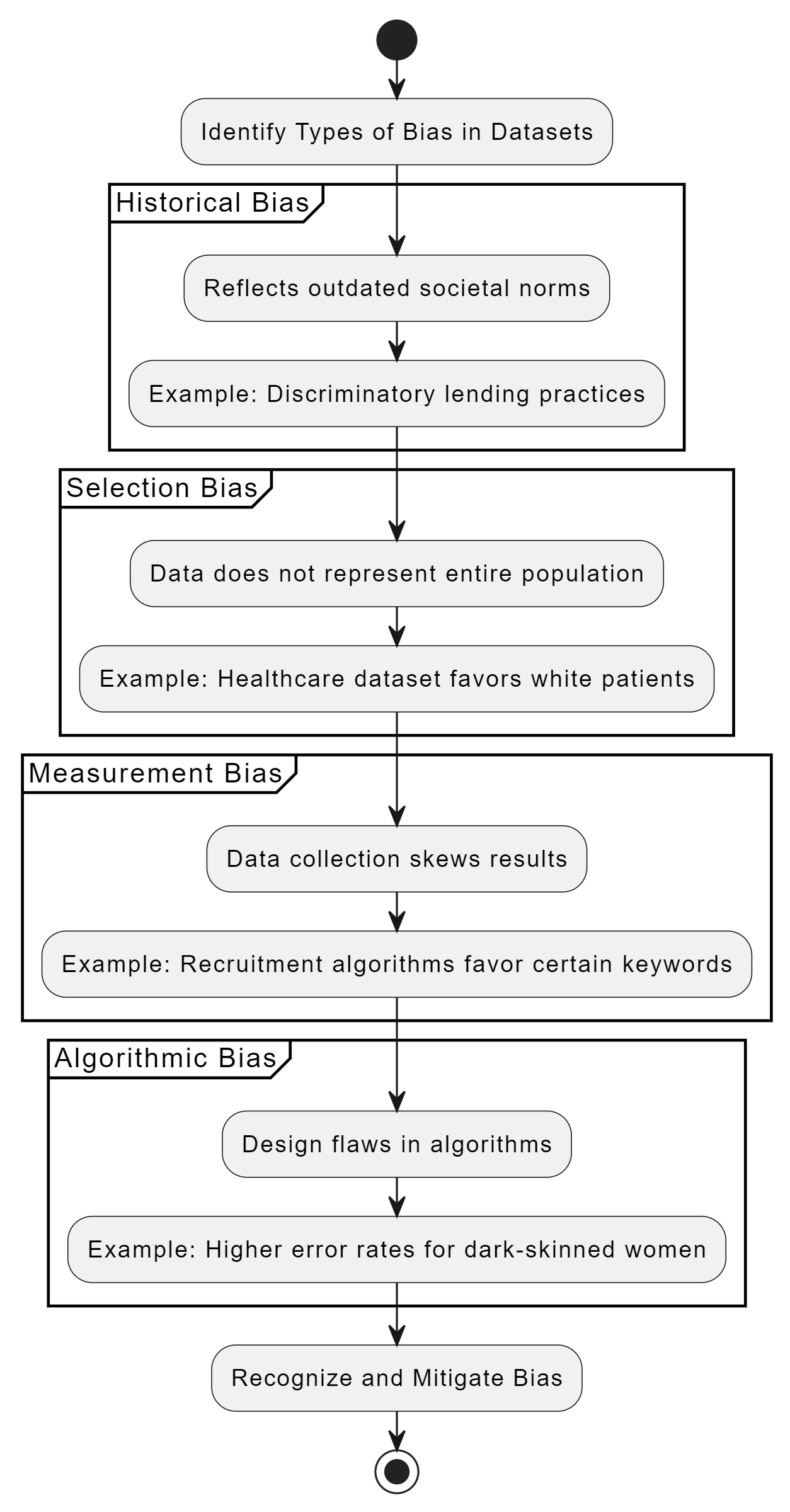

Types of Bias in Datasets and Their Origins

Bias in datasets comes in many forms, each with unique origins. Here are some common types of AI bias:

Historical Bias: This occurs when data reflects outdated societal norms or prejudices. For instance, lending algorithms have discriminated against racial groups because they were trained on historical lending practices that excluded marginalized communities.

Selection Bias: This happens when the data used to train AI doesn’t represent the entire population. For example, if a healthcare dataset includes mostly white patients, the AI might favor their outcomes over those of black patients.

Measurement Bias: This arises when the way data is collected skews the results. In recruitment, algorithms might prioritize certain keywords that favor one group over another, leading to biased hiring decisions.

Algorithmic Bias: This type of bias stems from the design of the algorithm itself. Even with balanced data, poorly designed algorithms can still produce unfair outcomes. For instance, Amazon’s facial recognition software showed higher error rates for dark-skinned women due to flaws in its algorithmic structure.

Recognizing these types of AI bias helps you identify the root causes and take steps to mitigate them. By understanding the origins, you can ensure your data represents diverse perspectives and avoids perpetuating inequality.

The Impact of Bias on AI Models and Decision-Making

Bias in AI models can have serious consequences, both ethically and practically. When AI systems make decisions based on biased data, they can harm marginalized groups and erode trust in technology. For example, biased lending algorithms have denied loans to racial minorities, while healthcare AI has favored white patients over black patients, leading to unequal treatment.

The business impact of bias is equally significant. Biased AI can damage your brand’s reputation and lead to costly legal challenges. Customers expect fairness and transparency, and failing to meet these expectations can result in lost trust and revenue. Moreover, biased AI models often produce inaccurate predictions, reducing the effectiveness of automated insights and decision-making processes.

To address these issues, you need to combine AI with human feedback. Human oversight ensures that ethical considerations guide the development and deployment of AI systems. By prioritizing fairness and inclusivity, you can create AI solutions that benefit everyone.

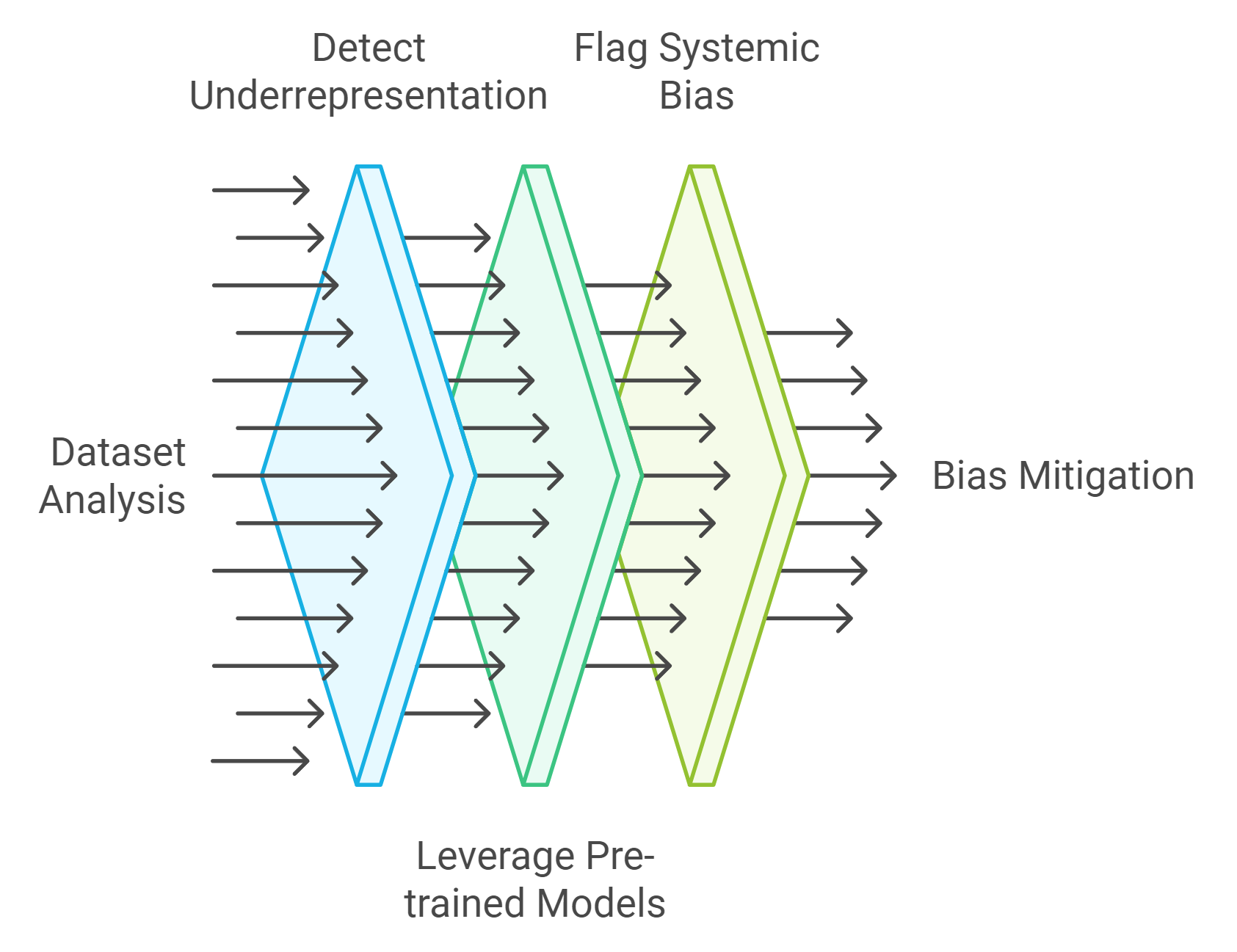

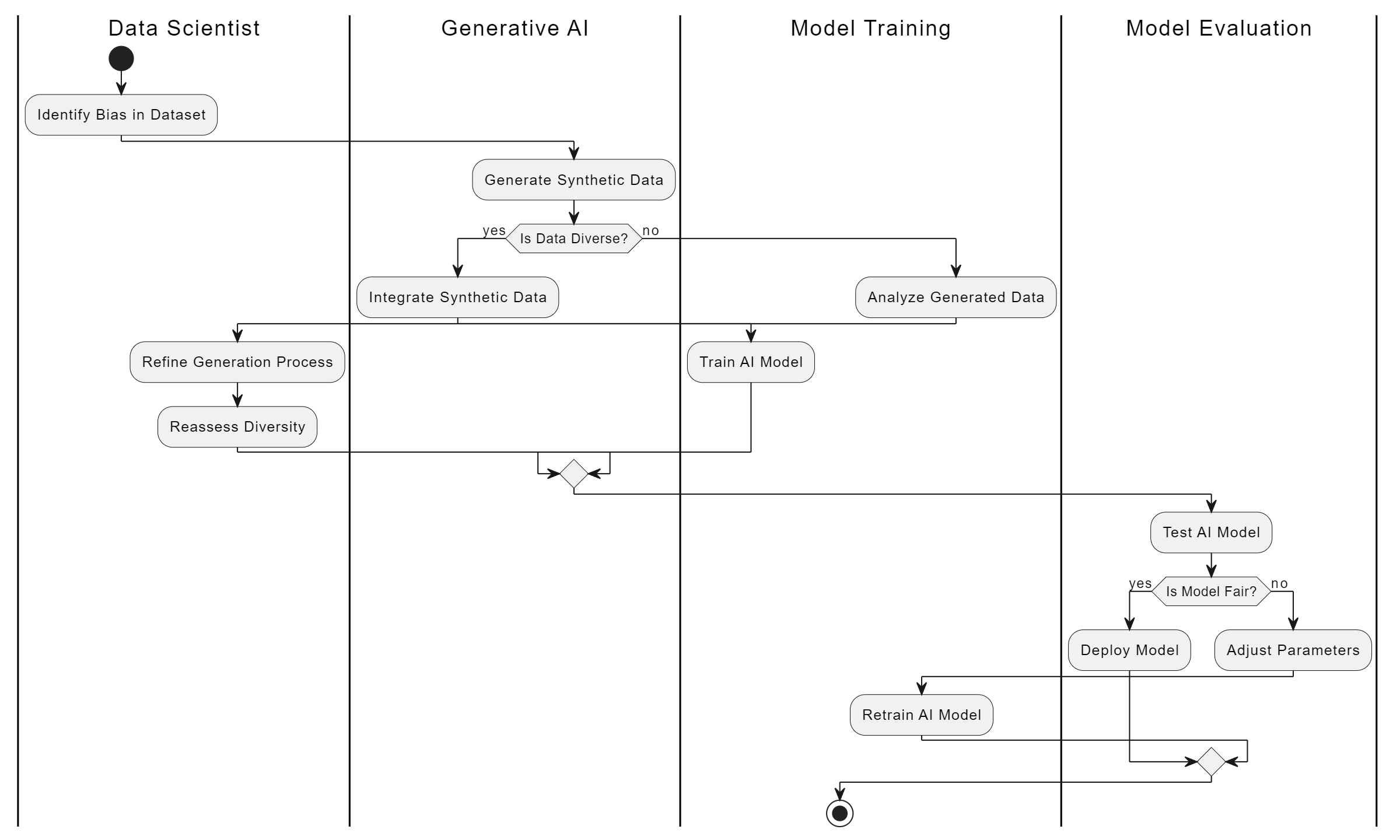

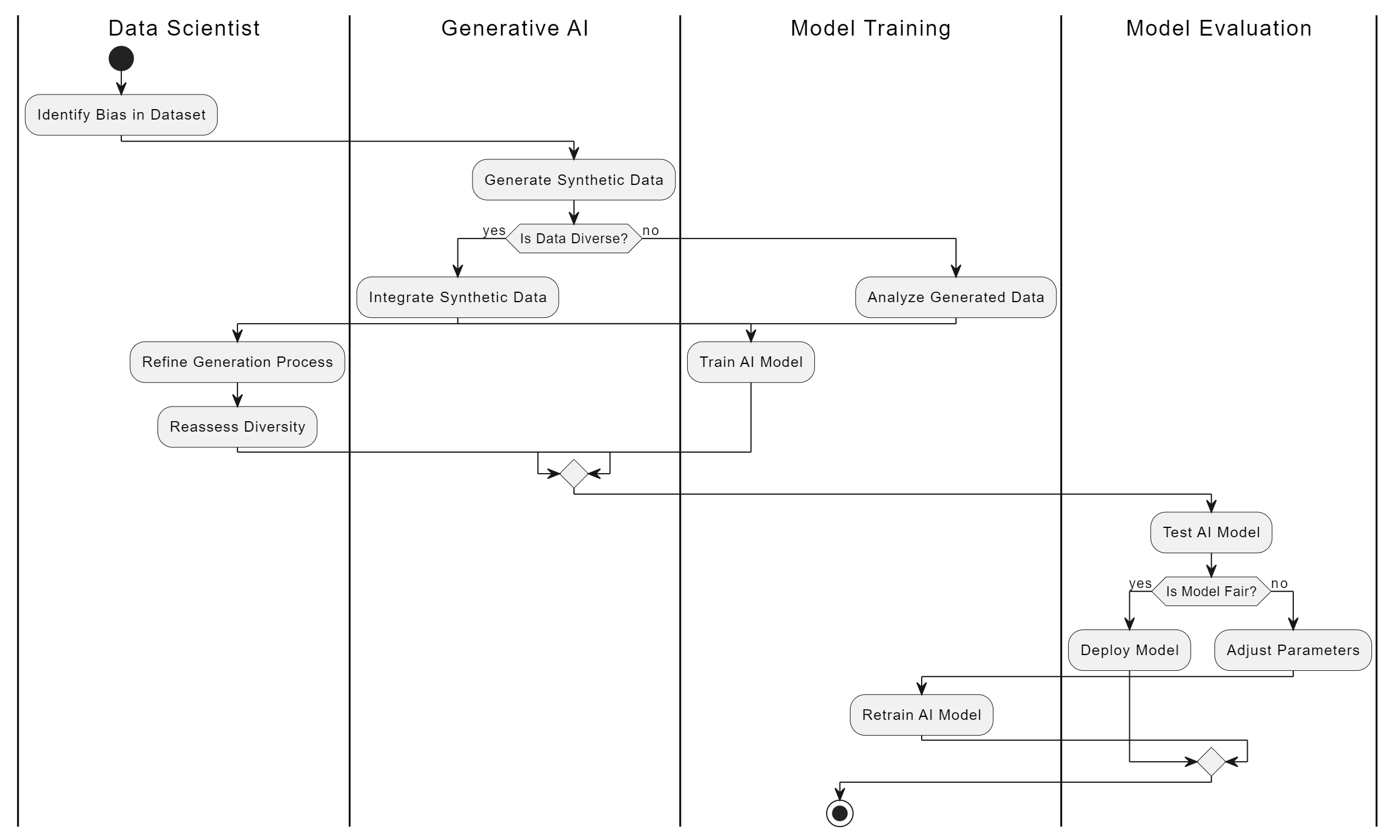

How Generative AI Identifies Bias in Datasets

Generative AI has become a powerful tool for identifying and addressing bias in datasets. By analyzing patterns and generating synthetic data, it helps you uncover hidden imbalances that could affect the fairness of your AI models. Let’s explore how it detects underrepresentation, leverages pre-trained models, and flags systemic bias.

Detecting Patterns of Underrepresentation

Underrepresentation in datasets often leads to skewed AI outcomes. Generative AI excels at spotting these gaps by analyzing the distribution of data points across different categories. For example, if your dataset contains fewer samples from certain demographic groups, generative AI can highlight these disparities. This ensures you’re aware of the missing representation before training your models.

Generative AI doesn’t just stop at detection. It provides actionable insights by visualizing these patterns. Imagine you’re working with a recruitment dataset. If the data lacks diversity in gender or ethnicity, generative AI can pinpoint these deficiencies. This allows you to take corrective measures, such as augmenting the dataset with more balanced samples.

A 2023 study published in Analytics Vidhya emphasized the practicality of using generative AI to mitigate bias. It demonstrated how identifying underrepresentation early can prevent discriminatory outcomes in AI systems.

Leveraging Pre-trained Models to Uncover Bias

Pre-trained models play a crucial role in generative AI’s ability to identify bias. These models, trained on vast datasets, come equipped with the knowledge to recognize patterns that might go unnoticed by human analysts. You can use these models to analyze your data and detect subtle biases embedded within it.

For instance, pre-trained models can identify historical biases in datasets. If your data reflects outdated societal norms, generative AI can flag these issues. This is particularly useful in fields like healthcare or finance, where biased data can lead to unequal treatment or unfair decisions.

By leveraging pre-trained models, you gain a deeper understanding of your data’s limitations. This empowers you to make informed decisions about how to address bias effectively. As highlighted by Forbes in 2023, generative AI tools are invaluable for uncovering the social inequalities present in training datasets.

Using Generative AI to Analyze and Flag Systemic Bias

Systemic bias often stems from deeply ingrained societal structures. Generative AI helps you tackle this challenge by analyzing datasets for recurring patterns of inequality. It uses advanced algorithms to identify systemic issues, such as gender pay gaps or racial disparities in hiring practices.

Once generative AI identifies systemic bias, it flags these issues for further investigation. For example, if your marketing dataset shows a consistent preference for one demographic over others, generative AI will bring this to your attention. This enables you to take proactive steps to create more inclusive campaigns.

According to AI Multiple (2024), all AI models tend to reproduce the biases found in their training data. Generative AI, however, offers a unique advantage by not only identifying these biases but also providing solutions to mitigate them.

By using generative AI to analyze and flag systemic bias, you ensure your AI systems align with ethical standards. This fosters trust and transparency, both of which are essential for building fair and inclusive technologies.

Mechanisms Generative AI Uses to Reduce Bias

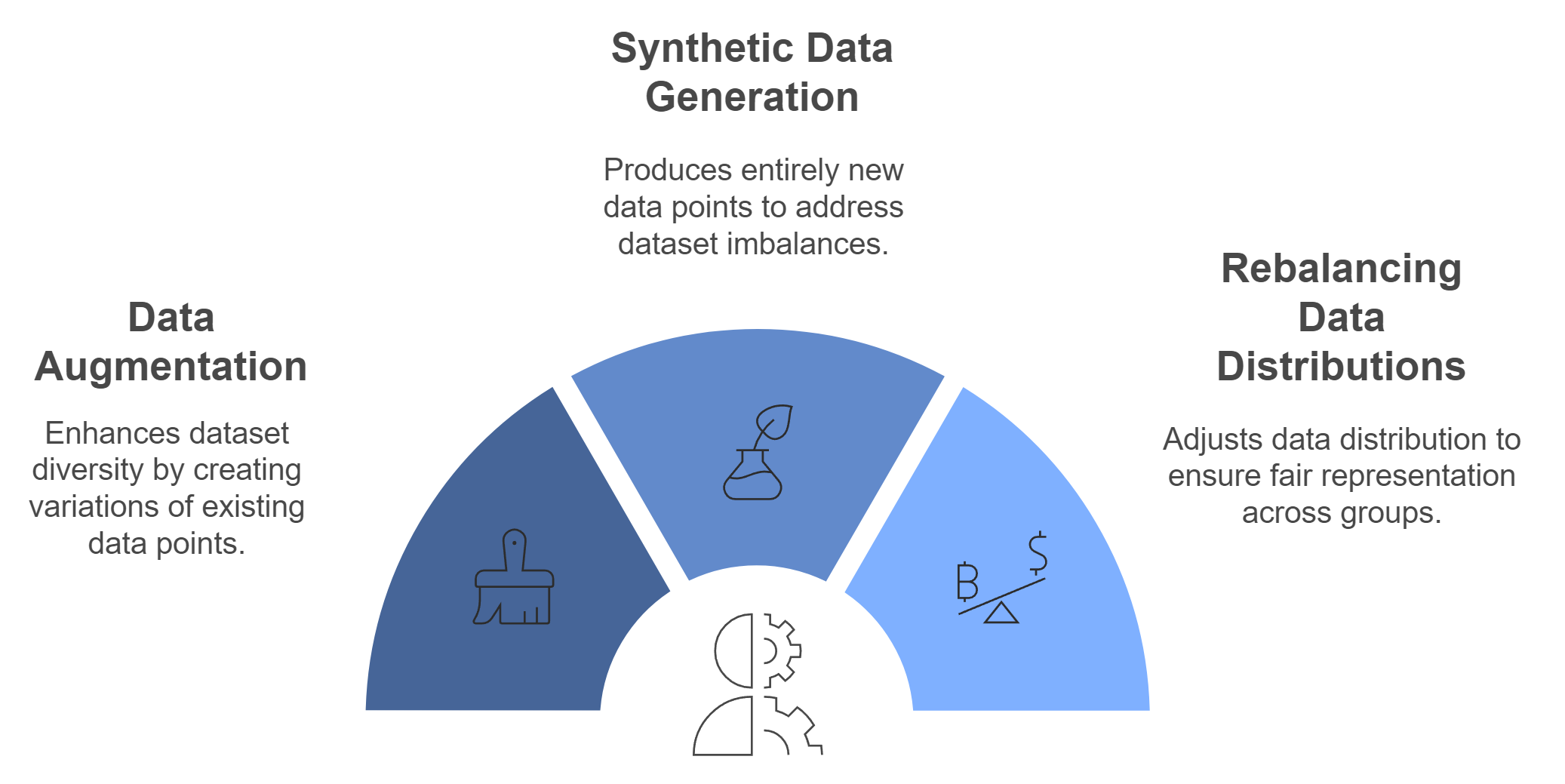

Generative AI offers practical solutions to reduce bias in datasets, ensuring fairer and more inclusive AI systems. By leveraging advanced techniques, you can create balanced data that improves representation and minimizes inequalities. Let’s explore how generative AI achieves this through data augmentation, synthetic data generation, and rebalancing data distributions.

Data Augmentation for Improved Representation

Data augmentation is a powerful way to enhance the diversity of your datasets. Generative AI helps you achieve this by creating variations of existing data points. For example, if your dataset lacks representation from certain demographic groups, generative AI can generate new samples that fill these gaps. This ensures your AI models learn from a broader range of perspectives.

Imagine you’re working with an image recognition dataset. If the dataset includes fewer images of individuals from underrepresented groups, generative AI can augment it by generating additional images. These new samples maintain the original data’s quality while improving representation. This process not only reduces bias but also strengthens your AI model’s ability to make accurate predictions across diverse scenarios.

A study by AI Ethics Journal in 2023 highlighted how data augmentation with generative AI significantly improved fairness in facial recognition systems. By increasing the representation of minority groups, the models achieved more equitable outcomes.

Using data augmentation, you can address imbalances without needing to collect more real-world data, saving time and resources. This technique ensures your datasets reflect the diversity of the real world, fostering transparency and fairness in AI applications.

Synthetic Data Generation to Address Imbalances

Synthetic data generation takes bias reduction a step further by creating entirely new data points. Generative AI uses advanced algorithms to produce synthetic data that mirrors the characteristics of your original dataset while addressing its shortcomings. This approach is especially useful when dealing with sensitive or limited data.

For instance, in healthcare analytics, patient data often lacks diversity due to privacy concerns or sampling limitations. Generative AI can generate synthetic patient records that represent underrepresented groups, ensuring your AI models provide equitable healthcare solutions. These synthetic datasets maintain the statistical properties of real data, making them reliable for training AI systems.

According to AI Multiple (2024), generative AI tools excel at producing synthetic data that reduces gender and racial biases in training datasets. This capability makes them invaluable for industries like finance, healthcare, and recruitment.

Synthetic data generation also allows you to experiment with different scenarios, helping you identify potential biases before deploying your AI models. By addressing imbalances proactively, you can build systems that prioritize fairness and inclusivity.

Rebalancing Data Distributions with Generative Models

Rebalancing data distributions is another key mechanism generative AI employs to reduce bias. When your dataset skews heavily toward certain groups, it can lead to unfair AI outcomes. Generative AI helps you correct this by generating data points that balance the distribution across all categories.

For example, if your marketing dataset overrepresents one demographic, generative AI can create data points for underrepresented groups. This ensures your campaigns resonate with a wider audience, promoting diversity and inclusion. Rebalancing data distributions not only improves fairness but also enhances the effectiveness of your AI models.

Generative models like GANs (Generative Adversarial Networks) and VAEs (Variational Autoencoders) play a crucial role in this process. These models analyze your dataset’s structure and generate new data points that align with your goals. By rebalancing distributions, you can eliminate systemic biases and create datasets that reflect real-world diversity.

As noted by Forbes in 2023, rebalancing data distributions with generative AI leads to more transparent and ethical AI systems. This approach ensures your models perform well across all user groups, building trust and credibility.

By using generative AI to rebalance data distributions, you can create datasets that empower your AI systems to make fair and accurate decisions. This step is essential for developing technologies that benefit everyone, regardless of their background.

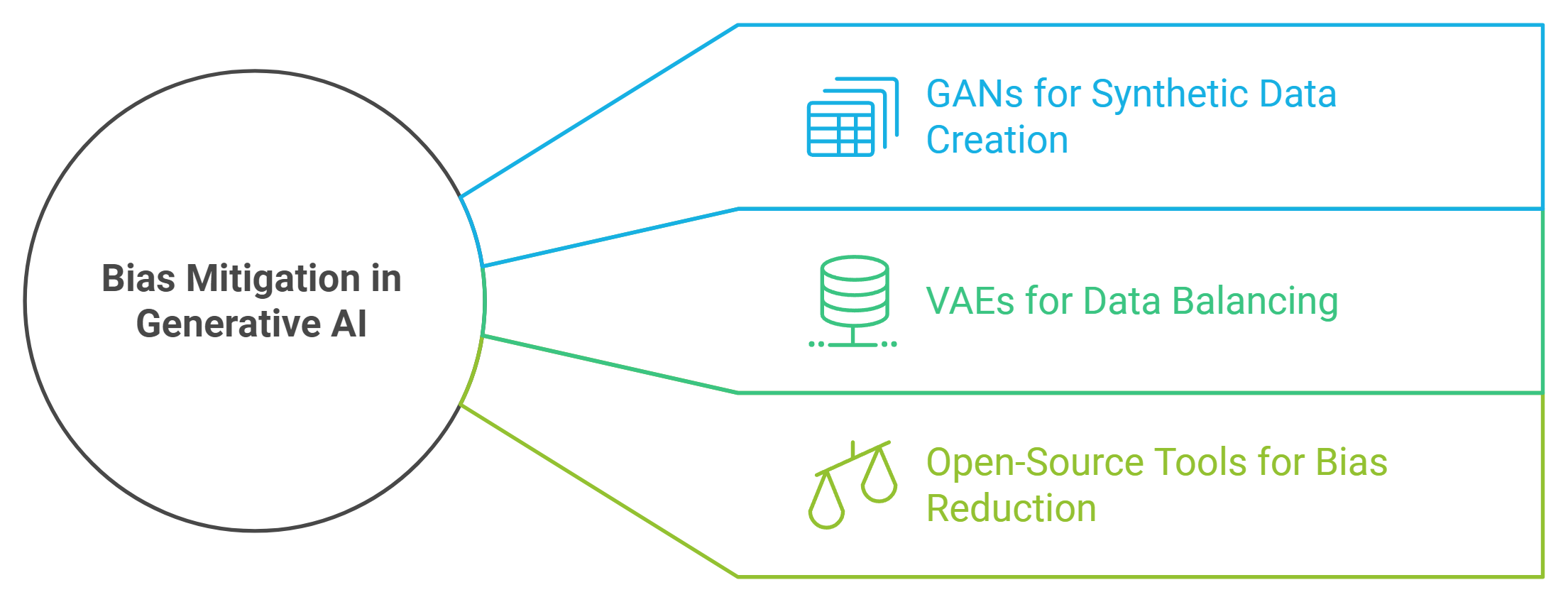

Tools and Techniques for Bias Mitigation in Generative AI

Generative AI has revolutionized how you approach bias mitigation, offering tools and techniques that actively address imbalances in datasets. By leveraging advanced methods, you can create fairer AI systems that reflect diverse perspectives. Let’s dive into some of the most effective tools and techniques you can use to reduce bias in your data.

Using GANs (Generative Adversarial Networks) for Synthetic Data Creation

Generative Adversarial Networks (GANs) are a game-changer when it comes to creating synthetic data. GANs consist of two neural networks—the generator and the discriminator—that work together to produce realistic data samples. The generator creates new data points, while the discriminator evaluates their authenticity. This back-and-forth process ensures high-quality synthetic data that mimics real-world datasets.

You can use GANs to address underrepresentation in your datasets. For example, if your dataset lacks diversity in gender or ethnicity, GANs can generate synthetic samples to fill those gaps. This helps your AI models learn from a more balanced dataset, reducing the risk of biased outcomes.

According to AI Ethics Journal (2023), GANs have been instrumental in creating synthetic data that improves fairness in applications like facial recognition and recruitment.

The flexibility of GANs makes them ideal for industries like healthcare, finance, and marketing. Whether you’re working with image data or text-based datasets, GANs can help you create synthetic data that promotes inclusivity and fairness.

Applying Variational Autoencoders (VAEs) for Data Balancing

Variational Autoencoders (VAEs) offer another powerful technique for balancing datasets. Unlike GANs, VAEs focus on learning the underlying structure of your data and generating new samples based on that structure. This makes them particularly useful for addressing subtle biases that might not be immediately obvious.

VAEs excel at rebalancing data distributions. For instance, if your dataset skews heavily toward one demographic, VAEs can generate new data points to even out the distribution. This ensures your AI models perform well across all user groups, enhancing both accuracy and fairness.

A 2024 report by AI Multiple highlighted how VAEs have been used to mitigate gender and racial biases in training datasets, particularly in sensitive fields like healthcare and education.

By incorporating VAEs into your workflow, you can tackle biases at their root. This not only improves the quality of your AI models but also builds trust with users who expect fair and transparent decision-making.

Exploring Open-Source Tools for Bias Reduction in Data Analytics

Open-source tools provide accessible and cost-effective solutions for bias mitigation. These tools often come with pre-built algorithms and frameworks designed to identify and reduce bias in datasets. You can integrate them into your existing data analytics pipeline to enhance fairness and inclusivity.

Some popular open-source tools include:

AI Fairness 360: Developed by IBM, this toolkit offers a range of algorithms to detect and mitigate bias in datasets. It provides actionable insights to help you create more equitable AI systems.

Fairlearn: This Microsoft tool focuses on improving fairness in machine learning models. It includes metrics and visualizations to help you understand and address bias.

Themis-ML: This tool specializes in identifying discrimination in datasets and offers techniques to correct it.

These tools empower you to take control of your data and ensure your AI models align with ethical standards. By using open-source solutions, you can stay ahead of potential biases and build systems that prioritize fairness.

As noted by Generative AI Tools and Biases, open-source tools play a crucial role in addressing the biases that generative AI might inadvertently amplify.

Incorporating these tools into your workflow doesn’t just improve your AI models—it also demonstrates your commitment to ethical practices. This can enhance your reputation and foster trust among your users.

By using GANs, VAEs, and open-source tools, you can effectively mitigate bias in generative AI. These techniques allow you to create balanced datasets that reflect the diversity of the real world. When you prioritize fairness and inclusivity, you not only improve your AI systems but also contribute to a more equitable future.

Real-World Applications of Generative AI in Bias Reduction

Generative AI has proven its value in addressing bias across various industries. By creating balanced datasets and uncovering hidden inequalities, it helps you build systems that promote fairness and inclusivity. Let’s explore three real-world examples where generative AI has made a significant impact.

Case Study: Reducing Gender Bias in Recruitment Data

Recruitment processes often suffer from gender bias, which can limit opportunities for qualified candidates. Generative AI offers a solution by analyzing recruitment data and identifying patterns that favor one gender over another. For example, if your hiring data shows a preference for male candidates, generative AI can generate synthetic profiles of qualified female candidates to balance the dataset.

This approach ensures your AI-powered hiring tools evaluate candidates based on merit rather than gender. By rebalancing the data, you create a fairer recruitment process that values diversity. Companies like LinkedIn have already started using AI to address gender disparities in hiring, setting an example for others to follow.

“Integrating generative AI into decision-making seems like the antidote to bias, particularly in hiring.” This insight highlights how generative AI can help you overcome unconscious biases that may seep into recruitment algorithms.

By using generative AI, you not only reduce gender bias but also attract a wider pool of talent. This fosters an inclusive workplace culture where everyone has an equal chance to succeed.

Case Study: Addressing Racial Bias in Healthcare Analytics

Racial bias in healthcare data can lead to unequal treatment and poor outcomes for marginalized groups. Generative AI helps you tackle this issue by generating synthetic patient records that represent underrepresented racial groups. These records ensure your AI models provide equitable healthcare solutions for all patients.

For instance, if your healthcare data skews heavily toward white patients, generative AI can create synthetic data for Black, Hispanic, or Asian patients. This improves the accuracy of AI models in diagnosing and treating diverse populations. A 2024 report emphasized how generative AI tools excel at reducing racial disparities in healthcare analytics, making them invaluable for creating fairer systems.

By addressing racial bias, you build trust with patients and ensure your healthcare solutions meet the needs of everyone, regardless of their race. Generative AI empowers you to create systems that prioritize equity and inclusivity in medical decision-making.

Case Study: Balancing Customer Demographics in Marketing Campaigns

Marketing campaigns often target specific demographics, which can lead to biased outcomes. Generative AI helps you balance customer data by identifying underrepresented groups and generating synthetic profiles to fill these gaps. This ensures your campaigns resonate with a diverse audience.

For example, if your marketing data overrepresents younger age groups, generative AI can create synthetic profiles for older customers. This allows you to design campaigns that appeal to all age groups, improving engagement and inclusivity. Companies like Netflix use AI to personalize recommendations for users of all ages, demonstrating the power of balanced data in marketing.

By leveraging generative AI, you create campaigns that reflect the diversity of your customer base. This not only enhances your brand’s reputation but also drives better business outcomes by reaching a broader audience.

Generative AI transforms how you address bias in real-world applications. Whether it’s reducing gender disparities in hiring, tackling racial inequalities in healthcare, or balancing age demographics in marketing, generative AI provides practical solutions for creating fairer systems. By adopting these techniques, you take a responsible approach to data analytics, ensuring your AI models benefit everyone.

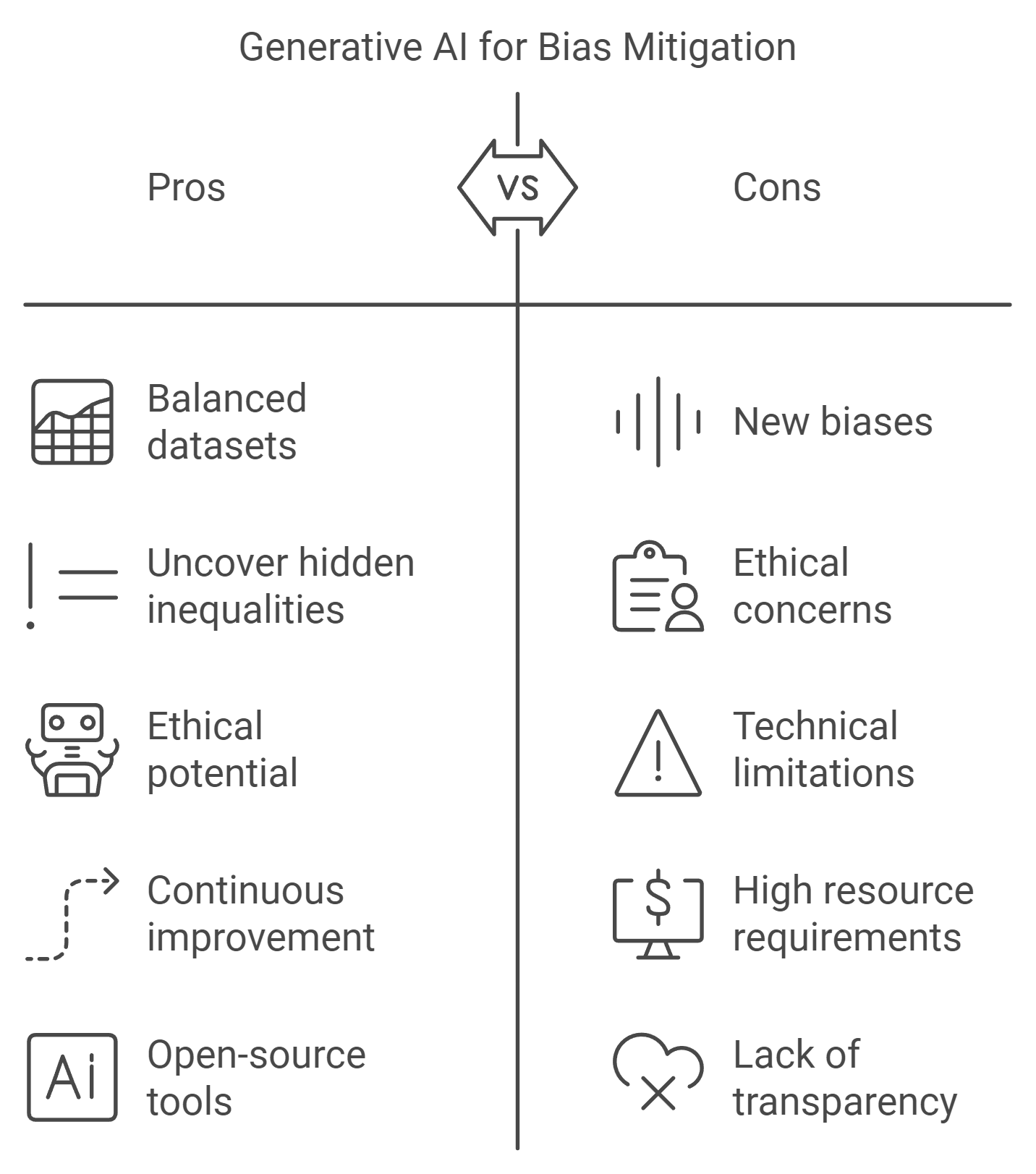

Challenges and Limitations of Generative AI in Bias Mitigation

Generative AI holds immense potential for reducing bias, but it’s not without its challenges. While it can create balanced datasets and uncover hidden inequalities, it also introduces risks and limitations that you must address to ensure ethical and effective outcomes. Let’s explore some of the key challenges you might face when using generative AI for bias mitigation.

Risks of Introducing New Biases Through Synthetic Data

When you use generative AI to create synthetic data, there’s always a risk of unintentionally introducing new biases. Synthetic data aims to fill gaps in representation, but if the algorithms generating this data are flawed, they might replicate or even amplify existing biases. For example, if the training data already contains subtle patterns of discrimination, the synthetic data could reinforce these patterns instead of correcting them.

This issue becomes particularly concerning in sensitive fields like healthcare or criminal justice. Imagine a healthcare dataset where certain racial groups are underrepresented. If generative AI creates synthetic data based on biased patterns, it might lead to unequal treatment recommendations. Similarly, in criminal justice, biased synthetic data could result in unfair sentencing predictions.

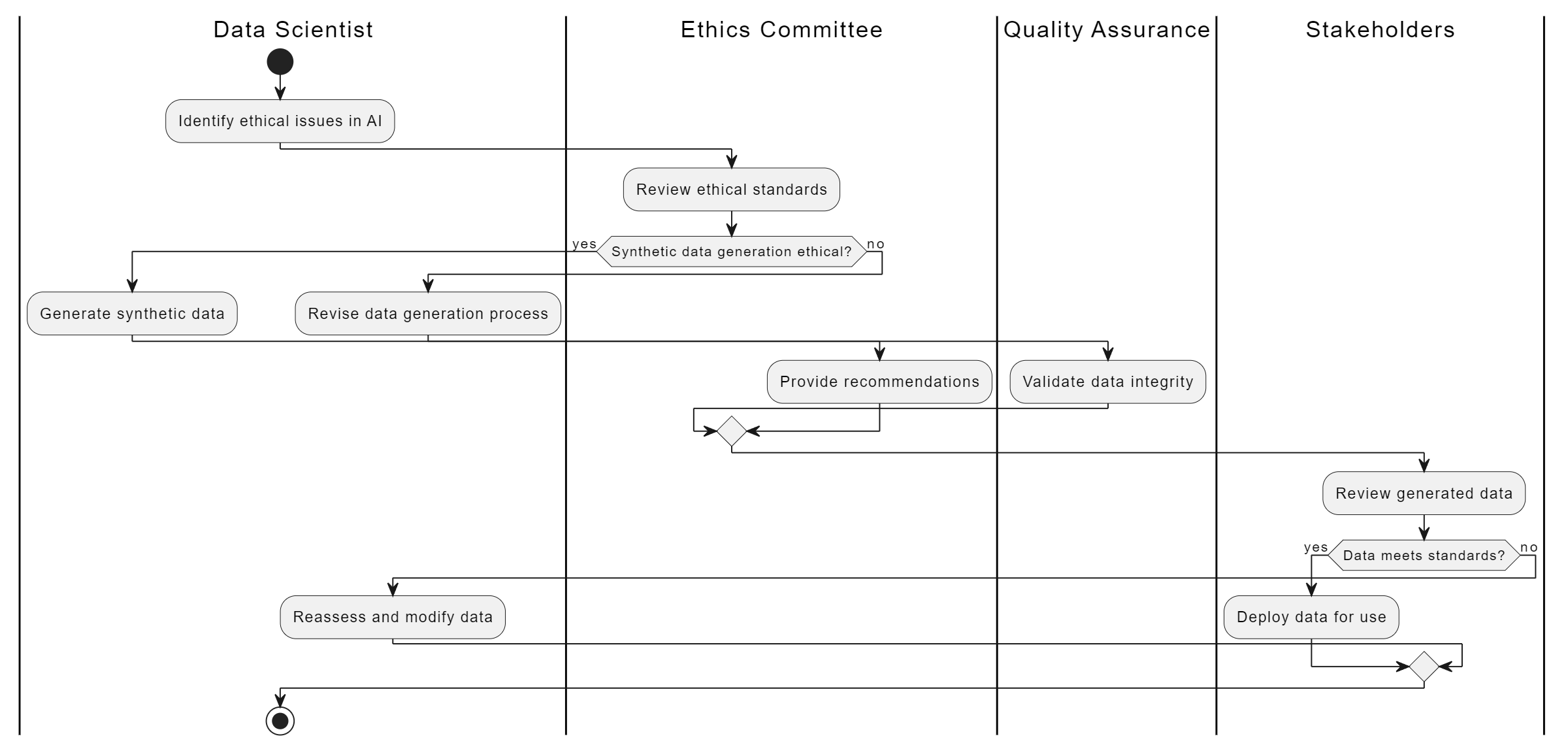

To minimize these risks, you need to carefully audit the synthetic data and validate its fairness before using it. Regular testing and human oversight play a crucial role in ensuring that generative AI doesn’t perpetuate or worsen discrimination. As highlighted by industry experts, addressing these risks requires a combination of robust algorithms and ethical vigilance.

Ethical Concerns in Data Generation and Usage

Ethical considerations are at the heart of generative AI’s challenges. When you generate synthetic data, you must ensure it adheres to ethical guidelines and avoids causing harm. For instance, synthetic data should never misrepresent real individuals or create scenarios that could lead to harmful decisions.

One major ethical concern involves the potential misuse of synthetic data. If someone uses generative AI irresponsibly, it could produce content that perpetuates stereotypes or reinforces systemic inequalities. For example, in hiring processes, synthetic profiles might unintentionally favor one demographic over another, leading to biased hiring decisions.

Another ethical dilemma arises from the lack of transparency in how generative AI models operate. If you can’t fully understand or explain how the AI generates data, it becomes difficult to ensure its fairness. This lack of transparency can erode trust and raise questions about the ethical implications of using such technology.

To address these concerns, you should implement strict ethical guidelines and content filtering mechanisms. Collaborating with domain experts and ethicists can help you navigate these challenges and ensure responsible use of generative AI.

Technical Constraints of Current Generative AI Models

Generative AI models, while powerful, have technical limitations that can hinder their effectiveness in bias mitigation. These models rely heavily on the quality and diversity of their training data. If the training data lacks representation or contains biases, the AI will struggle to produce fair and balanced outputs.

Another technical challenge lies in the complexity of real-world biases. Bias often stems from deeply ingrained societal structures, making it difficult for AI models to fully understand or address these issues. For example, generative AI might identify surface-level imbalances in a dataset but fail to recognize the systemic factors driving those imbalances.

Additionally, current generative AI models require significant computational resources, which can limit their accessibility. Smaller organizations or individuals may find it challenging to implement these technologies due to high costs and technical expertise requirements.

To overcome these constraints, you should focus on improving the quality of training data and investing in more advanced algorithms. Open-source tools and collaborative efforts can also help make generative AI more accessible and effective for bias mitigation.

By understanding these challenges, you can take proactive steps to address them and maximize the potential of generative AI. Ethical oversight, rigorous testing, and continuous improvement are essential for ensuring that generative AI contributes to a fairer and more inclusive future.

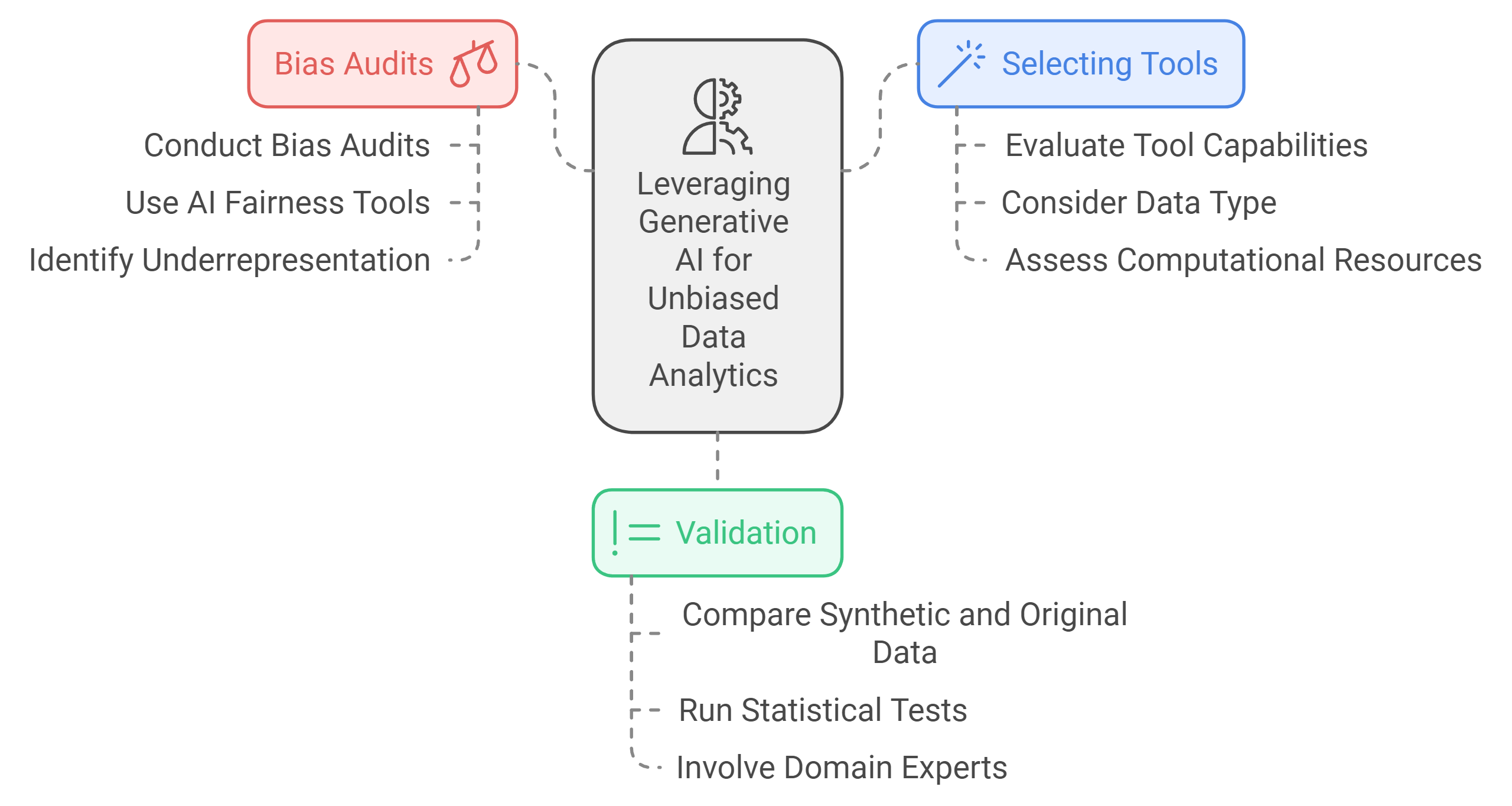

Actionable Steps to Leverage Generative AI for Unbiased Data Analytics

Generative AI offers powerful tools to help you create fair and balanced datasets. However, to truly harness its potential, you need a clear strategy. Here’s a step-by-step guide to ensure you’re using generative AI effectively for unbiased data analytics.

Conducting Comprehensive Bias Audits Before Data Generation

Before diving into data generation, you must first understand the biases present in your existing datasets. A bias audit helps you identify gaps or imbalances that could skew your AI models. Start by analyzing your data for underrepresentation or patterns that favor certain groups over others. For instance, does your dataset include enough samples from diverse demographics? Are there any historical trends that might perpetuate inequality?

Use tools like AI Fairness 360 or Fairlearn to assist with this process. These tools provide metrics and visualizations that make it easier to spot biases. For example, if your recruitment data shows a disproportionate number of male candidates, these tools can highlight the imbalance. Once you’ve identified the issues, you’ll know exactly what needs to be addressed during data generation.

“Bias audits are the foundation of ethical AI development,” as noted by researchers in a recent survey. Among those surveyed, 31% actively used generative AI tools, with many focusing on refining datasets to reduce bias.

By conducting thorough audits, you set the stage for creating datasets that reflect fairness and inclusivity.

Selecting the Right Generative AI Tools for Your Specific Needs

Not all generative AI tools are created equal. Choosing the right one depends on your specific goals and the type of data you’re working with. For example, if you need to generate synthetic images, tools like GANs (Generative Adversarial Networks) are highly effective. On the other hand, if you’re dealing with text-based data, consider using VAEs (Variational Autoencoders) or pre-trained language models.

When selecting a tool, evaluate its capabilities and limitations. Does it support the type of data you need to generate? Can it address the specific biases you’ve identified? Open-source tools like Themis-ML offer flexibility and customization, making them a great choice for many applications. Additionally, consider the computational resources required. Some tools demand significant processing power, which might not be feasible for smaller teams.

A growing number of researchers use generative AI tools weekly to refine datasets and troubleshoot issues. This trend highlights the importance of selecting tools that align with your workflow and objectives.

By choosing the right tools, you ensure that your efforts to reduce bias are both efficient and effective.

Regularly Validating and Testing Generated Data for Fairness and Accuracy

Once you’ve generated new data, the work doesn’t stop there. Validation and testing are critical to ensure the data meets your fairness and accuracy standards. Start by comparing the synthetic data to your original dataset. Does it address the gaps you identified during the bias audit? Does it introduce any new biases?

Use statistical tests to evaluate the distribution of your data. For example, check whether the synthetic data accurately represents underrepresented groups without overcompensating. You can also run your AI models on the new dataset to see how they perform. Are the predictions more equitable? Do they align with real-world expectations?

Human oversight plays a key role in this process. Involve domain experts to review the data and provide feedback. Their insights can help you spot issues that automated tools might miss. Regular validation ensures that your datasets remain reliable and unbiased over time.

According to industry experts, frequent testing and validation are essential for maintaining the integrity of AI systems. This practice builds trust and ensures your models deliver accurate, fair results.

By prioritizing validation, you create a feedback loop that continuously improves the quality of your data and AI models.

Taking these actionable steps ensures that you’re leveraging generative AI responsibly and effectively. By auditing your data, selecting the right tools, and validating your results, you can create datasets that drive ethical and inclusive AI systems.

The Future of Generative AI in Reducing Bias

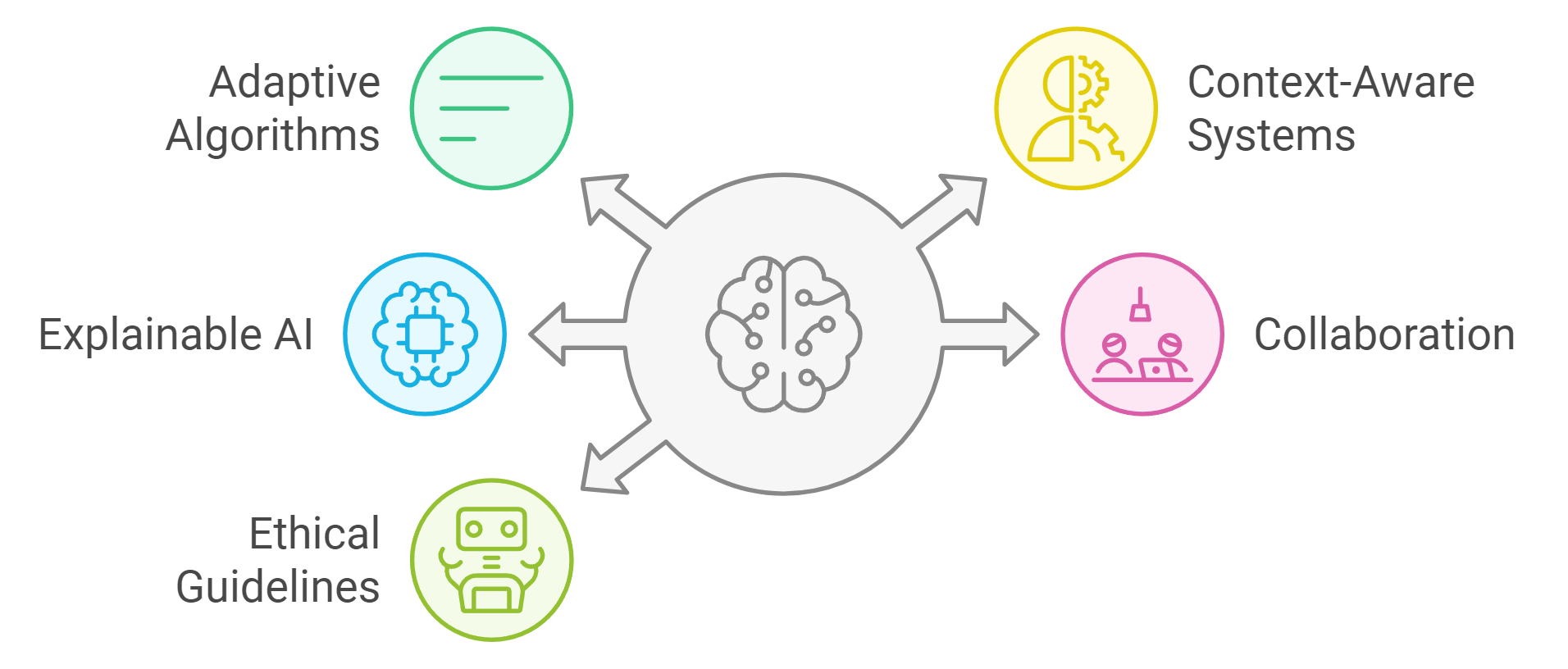

The future of generative AI holds immense promise for addressing bias in ways that were once unimaginable. As technology evolves, you’ll see new trends, collaborations, and ethical frameworks shaping how AI systems operate. Let’s explore what lies ahead and how you can play a role in creating fairer, more inclusive AI solutions.

Emerging Trends in Generative AI for Bias Mitigation

Generative AI is rapidly advancing, and its potential to mitigate bias continues to grow. One emerging trend is the use of adaptive algorithms that learn to identify and correct biases in real-time. These algorithms analyze data as it’s generated, ensuring that underrepresented groups receive fair representation. For example, adaptive models can dynamically adjust datasets to include diverse perspectives, reducing the risk of skewed outcomes.

Another trend involves context-aware AI systems. These systems consider the cultural, social, and historical contexts of data, allowing you to address biases more effectively. Imagine training an AI model for recruitment. A context-aware system could recognize and correct gender stereotypes embedded in job descriptions, ensuring a more equitable hiring process.

You’ll also notice a growing focus on explainable AI (XAI). This approach emphasizes transparency, helping you understand how generative AI makes decisions. By making AI processes more interpretable, XAI builds trust and ensures accountability. As noted by Forbes, explainable AI will play a critical role in addressing unconscious biases that may seep into AI models.

“The buzz around AI has raised awareness of gaps in fairness, pushing developers to innovate solutions that prioritize inclusivity,” as highlighted by industry experts.

These trends signal a shift toward more ethical and inclusive AI practices, giving you the tools to create systems that truly reflect the diversity of the real world.

The Role of Collaboration Between AI Developers and Domain Experts

Collaboration is key to reducing bias in generative AI. AI developers bring technical expertise, but domain experts provide the contextual knowledge needed to address complex biases. By working together, you can create AI systems that are both technically robust and socially responsible.

For instance, marketing teams often collaborate with data specialists to curate diverse datasets for AI-driven campaigns. This partnership ensures that marketing strategies resonate with a wide audience, avoiding stereotypes or exclusion. Similarly, healthcare professionals can guide AI developers in creating synthetic patient data that represents all demographics, improving the accuracy of medical predictions.

Scaling up review and feedback activities is another critical aspect of collaboration. Regular audits by diverse teams help you identify and address biases early in the development process. As noted by MarTech, engaging multiple stakeholders fosters accountability and ensures that AI systems align with ethical standards.

“Collaboration between developers and domain experts is crucial for curating representative datasets,” according to recent insights from industry leaders.

By fostering collaboration, you not only enhance the quality of your AI systems but also build trust among users who expect fairness and transparency.

Long-Term Implications for Ethical AI Development

The long-term implications of generative AI in bias mitigation extend far beyond technology. As you adopt these tools, you contribute to a broader movement toward ethical AI development. This involves creating systems that prioritize fairness, inclusivity, and trust.

One significant implication is the establishment of ethical guidelines for AI usage. These guidelines help you navigate challenges like data privacy, transparency, and accountability. For example, organizations are increasingly adopting frameworks that require regular bias audits and human oversight, ensuring that AI systems remain aligned with societal values.

Generative AI also opens the door to public engagement in AI development. By involving communities in the design process, you can ensure that AI systems address real-world needs and concerns. This participatory approach builds trust and empowers users to take an active role in shaping the future of AI.

Finally, the evolution of generative AI will likely lead to global standards for bias mitigation. These standards will provide a unified framework for addressing bias across industries, making it easier for you to implement ethical practices. As noted by Analytics Vidhya, the development of sophisticated mitigation techniques will play a pivotal role in achieving this goal.

“Mitigating bias effectively hinges on promoting fairness and ethics within AI systems,” as emphasized by thought leaders in the field.

By embracing these long-term implications, you help pave the way for a future where AI systems benefit everyone, regardless of their background.

The future of generative AI in reducing bias is bright, but it requires your active participation. By staying informed about emerging trends, fostering collaboration, and prioritizing ethical practices, you can create AI systems that reflect the diversity and inclusivity of the world we live in.

Generative AI empowers you to create balanced and unbiased datasets by addressing underrepresentation and systemic bias. It doesn’t just stop at identifying gaps; it actively generates diverse data to ensure fairness in decision-making. However, your role remains vital. Combining generative AI with human oversight ensures ethical outcomes and prevents unintended consequences. Oversight helps you validate the data and align it with real-world values. By adopting these tools and techniques, you can enhance your data analytics processes while fostering inclusivity and transparency. Start leveraging generative AI today to build a fairer, more equitable future.

FAQ

What is bias in generative AI, and why should you care about it?

Bias in generative AI happens when the system produces content that unfairly favors or disadvantages certain groups. You should care because biased AI can lead to discriminatory outcomes, harm individuals, or perpetuate stereotypes. For example, if an AI model generates job descriptions that subtly favor one gender, it could limit opportunities for others. Understanding and addressing bias ensures fairness and inclusivity in AI applications.

How does generative AI help reduce bias in datasets?

Generative AI reduces bias by creating synthetic data that fills gaps in representation. It identifies underrepresented groups in your dataset and generates new data points to balance the distribution. For instance, if your dataset lacks diversity in age or ethnicity, generative AI can create samples to ensure fairer outcomes. This process helps your AI models learn from a broader range of perspectives, improving accuracy and inclusivity.

How could generative AI impact the data analytics landscape?

Generative AI is transforming data analytics by automating the creation of diverse datasets. However, it also reproduces biases found in the data it’s trained on. This means it can unintentionally perpetuate harm or misinformation if not carefully managed. On the positive side, when used responsibly, generative AI enhances fairness, improves decision-making, and opens up new possibilities for ethical data analysis.

What are the challenges in integrating generative AI?

Integrating generative AI comes with challenges, especially around ethics. These models can unintentionally produce biased or inappropriate content, raising concerns about responsible use. Another challenge is the technical expertise required to implement and monitor these systems effectively. You also need to ensure that the synthetic data aligns with real-world values and doesn’t introduce new biases.

Can generative AI completely eliminate bias in AI systems?

No, generative AI cannot completely eliminate bias, but it can significantly reduce it. Bias often stems from societal structures and historical inequalities, which AI alone cannot fix. Generative AI helps by addressing imbalances in datasets, but human oversight remains essential. You need to combine AI tools with ethical guidelines and regular audits to create fair and inclusive systems.

How can you ensure the fairness of synthetic data generated by AI?

To ensure fairness, you should validate and test the synthetic data regularly. Start by comparing it to your original dataset to check if it addresses gaps without introducing new biases. Use statistical tests to evaluate the distribution and involve domain experts for additional insights. Regular audits and human oversight help maintain the integrity of the data and ensure it aligns with ethical standards.

What industries benefit the most from generative AI in bias reduction?

Generative AI benefits industries like healthcare, recruitment, and marketing the most. In healthcare, it creates synthetic patient data to improve treatment for underrepresented groups. In recruitment, it balances hiring datasets to ensure fair evaluations. Marketing teams use it to design campaigns that resonate with diverse audiences. These applications show how generative AI promotes inclusivity across various fields.

Are there any risks of using generative AI for bias mitigation?

Yes, there are risks. Generative AI might unintentionally introduce new biases if the algorithms or training data are flawed. It can also produce synthetic data that misrepresents real-world scenarios, leading to inaccurate predictions. To mitigate these risks, you need to audit the data, validate its fairness, and involve human oversight throughout the process.

What tools can you use to reduce bias with generative AI?

Several tools help you reduce bias effectively. AI Fairness 360 by IBM offers algorithms to detect and mitigate bias in datasets. Fairlearn by Microsoft provides metrics and visualizations to improve fairness in machine learning models. Themis-ML specializes in identifying discrimination and correcting it. These tools empower you to create ethical and inclusive AI systems.

How can you start using generative AI to address bias in your data?

Begin by conducting a bias audit of your existing datasets. Identify gaps or patterns that favor certain groups. Choose the right generative AI tools, such as GANs or VAEs, to generate synthetic data that fills these gaps. Validate the data regularly to ensure fairness and accuracy. Finally, involve domain experts and follow ethical guidelines to align your efforts with real-world values.

See Also

Leveraging Data Leadership Amidst the AI Revolution

The Role of Data Governance in Fostering Innovation

Identifying and Cleaning Dirty Data: A Practical Approach

Understanding Transparency Requirements of the EU AI Act

Data Analytics Mastery: Insights from Rishi Sapra's MVP Experience