Mastering AI Governance with These Essential Principles

AI Governance plays a pivotal role in shaping the future of artificial intelligence. You need it to ensure ethical practices and prevent unintended consequences. Recent developments, like the creation of AI safety institutes and the EU AI Office, show that global efforts to manage AI risks are accelerating. These measures build trust and encourage responsible innovation. By mastering governance, you can safeguard systems, protect users, and meet growing demands for skilled professionals in this field.

Key Takeaways

AI governance is important to use AI in a fair way. It reduces risks and helps people trust AI systems.

Being clear about how AI works builds trust. Write down each step of your AI system to make it easy to understand.

Fairness is needed to stop unfair results in AI. Use fairness tools and work with experts to get fair outcomes.

Make sure everyone knows their job in creating AI. This helps fix problems and encourages responsibility.

Keep checking and updating AI systems to keep them working well. Use tools to watch how they perform and adjust as needed.

Understanding AI Governance

What Is AI Governance?

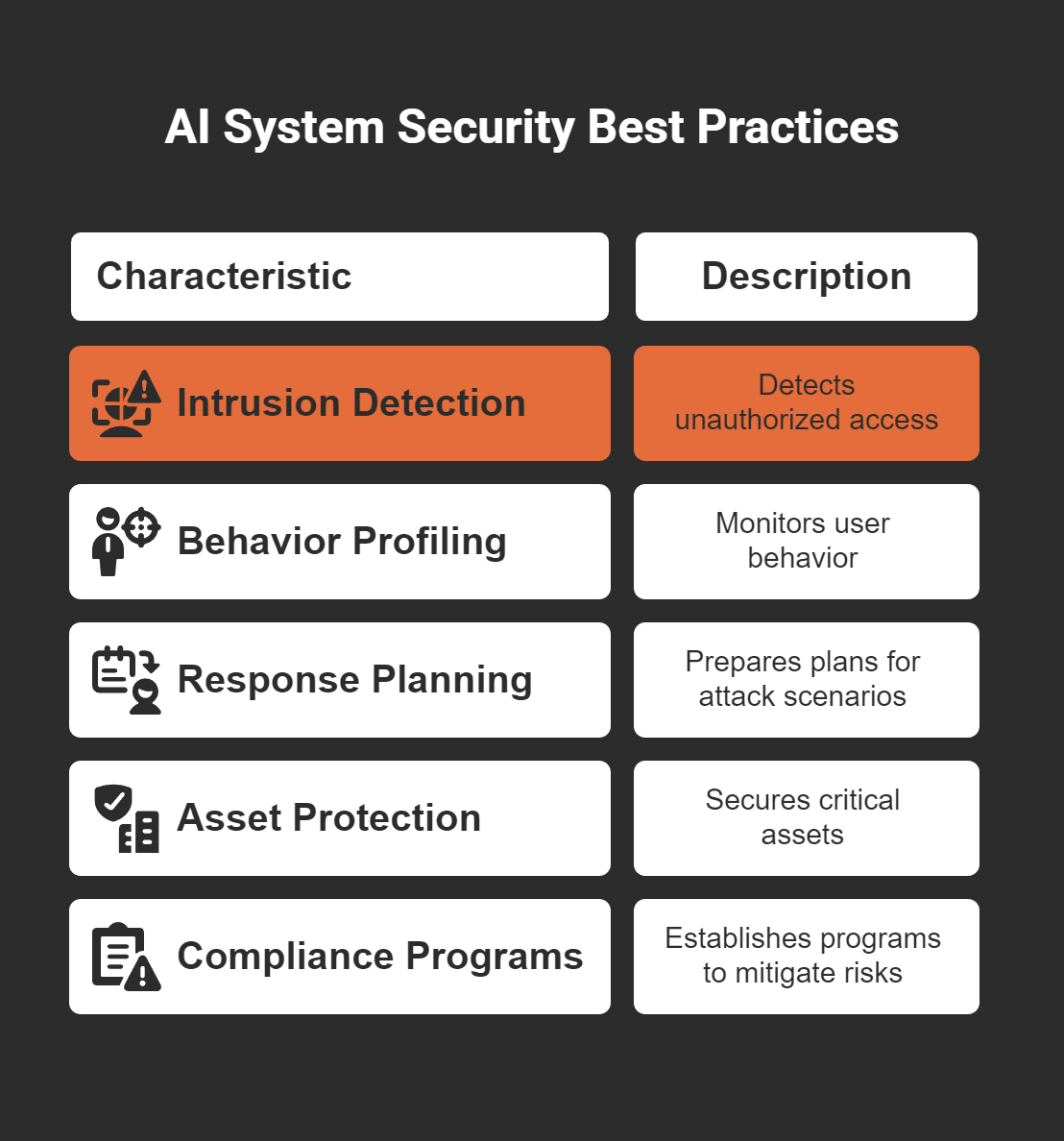

AI governance refers to the system of laws, policies, and practices that guide the responsible use of artificial intelligence. It ensures that AI technologies are developed and deployed ethically, transparently, and safely. For example, the US Federal Reserve SR-11-7 requires banks to oversee and inventory AI models to manage risks. Similarly, Canada’s Directive on Automated Decision-Making enforces transparency and accountability in AI-driven decisions. These frameworks illustrate how governance helps regulate AI systems across industries.

Why AI Governance Is Critical in Today’s World

The rapid adoption of AI tools has surpassed the pace of early cloud computing, creating an urgent need for governance. Without proper oversight, AI systems can pose risks such as systemic failures, malicious use, and loss of human control. For instance, fragmented incident tracking mechanisms make it difficult to address emergent AI capabilities effectively. Additionally, the potential for AI exploitation by malicious actors highlights the importance of accountability and mandatory reporting. By establishing robust governance frameworks, you can mitigate these risks and ensure AI systems remain safe and trustworthy.

Benefits of AI Governance for Organizations and Society

AI governance offers numerous advantages. For organizations, it enhances transparency and accountability, especially in high-risk sectors like finance and healthcare. Explainable AI (XAI), for instance, builds trust by making decision-making processes clear and justifiable. Governance also helps organizations comply with regulatory standards, reducing legal and reputational risks. On a societal level, governance promotes ethical AI use, protecting user rights and preventing harm. The integration of blockchain further strengthens accountability by creating immutable records of AI decisions. By adopting governance practices, you contribute to a safer and more equitable technological landscape.

Core Principles of AI Governance

Transparency: Making AI Systems Understandable

Transparency ensures that AI systems operate in a way that is clear and understandable to users, stakeholders, and regulators. When you make AI systems transparent, you allow others to see how decisions are made, which builds trust and reduces the risk of misuse. For example, explainable AI (XAI) tools can help you understand why an AI model made a specific decision, whether it's approving a loan or diagnosing a medical condition. These tools break down complex algorithms into simpler, human-readable explanations.

To achieve transparency, you should document every stage of your AI system's lifecycle. This includes detailing the data sources, training processes, and decision-making logic. By doing so, you create a roadmap that others can follow to verify the system's integrity. Transparency also involves using tools that make AI models interpretable. For instance, visualization techniques can show how different inputs influence an AI's output, making it easier to identify potential errors or biases.

Tip: Transparency is not just about technical clarity. It also involves clear communication with non-technical stakeholders, ensuring everyone understands the system's purpose and limitations.

Fairness: Addressing Bias and Promoting Equity

Fairness in AI governance focuses on eliminating bias and ensuring equitable outcomes for all users. AI systems often reflect the biases present in their training data, which can lead to discriminatory outcomes. For example, hiring algorithms have been known to favor male candidates over female ones, while lending systems have disproportionately rejected applications from minority groups. These issues highlight the importance of fairness in AI governance.

To address bias, you can use fairness metrics such as:

Demographic Parity: Ensures equal distribution of outcomes across demographic groups.

Equal Opportunity: Guarantees that qualified individuals have the same chance of receiving positive outcomes, regardless of their background.

Equalized Odds: Balances false positive and false negative rates across groups, which is especially important in areas like criminal justice and healthcare.

Disparate Impact Analysis: Identifies whether an AI model disproportionately affects certain groups, helping you uncover hidden biases.

Collaboration between technical teams and domain experts is essential for implementing these fairness metrics effectively. For instance, domain experts can help interpret fairness results in the context of specific industries, ensuring that the metrics align with real-world needs. Continuous monitoring and iterative improvements further enhance fairness, allowing you to adapt to changing societal expectations.

Note: Fairness is not a one-time effort. It requires ongoing evaluation and adjustments to ensure your AI systems remain equitable over time.

Accountability: Assigning Responsibility for AI Outcomes

Accountability ensures that someone is responsible for the outcomes of AI systems, whether they are positive or negative. Without clear accountability, it becomes difficult to address issues like biased decisions or system failures. For example, the "many hands" problem arises when multiple actors contribute to an AI system, making it unclear who should be held accountable for its actions. This lack of clarity can lead to accountability gaps, where no one takes responsibility, or accountability surpluses, where too many parties are blamed.

To establish accountability, you should define roles and responsibilities at every stage of your AI system's lifecycle. This includes identifying who is responsible for data collection, model training, deployment, and monitoring. Case studies have shown that biased training data and programmer errors often lead to negative outcomes, emphasizing the need for clear accountability structures. By assigning specific responsibilities, you can ensure that issues are addressed promptly and effectively.

Additionally, you can use tools like audit logs to track changes and decisions made within your AI system. These logs create a transparent record of actions, making it easier to identify and correct errors. Regular audits and reviews further strengthen accountability, ensuring that your AI systems comply with ethical and regulatory standards.

Tip: Accountability is not just about assigning blame. It’s about creating a culture of responsibility where everyone involved in AI development and deployment works toward ethical and effective outcomes.

Privacy: Protecting User Data and Rights

Protecting user data and rights is a cornerstone of responsible AI governance. As AI systems increasingly rely on vast amounts of personal data, the risks to privacy grow exponentially. High-profile data breaches involving AI have exposed vulnerabilities that jeopardize sensitive information. These incidents highlight the urgent need for robust privacy measures to safeguard user trust and prevent misuse.

Consider these alarming statistics:

40% of organizations have experienced an AI-related privacy breach.

57% of global consumers view AI's role in data collection as a significant threat to their privacy.

70% of US adults express little to no trust in companies to make responsible decisions about AI usage.

91% of organizations acknowledge the need to reassure customers about how their data is used.

To address these concerns, you should prioritize privacy by design. This approach embeds privacy protections into every stage of AI development. For example, anonymizing datasets can reduce the risk of exposing personal information. Encryption techniques further enhance security by ensuring that only authorized parties can access sensitive data. Additionally, implementing user consent mechanisms allows individuals to control how their data is collected and used.

Transparency also plays a vital role in protecting privacy. By clearly communicating how your AI systems handle data, you can build trust with users and stakeholders. Regular audits and compliance with data protection regulations, such as GDPR or CCPA, demonstrate your commitment to safeguarding user rights. These steps not only protect individuals but also strengthen the integrity of your AI systems.

Tip: Always evaluate your AI systems for potential privacy risks. Regular updates and monitoring can help you stay ahead of emerging threats.

Security: Safeguarding AI Systems from Threats

AI systems face a wide range of security threats, from data theft to adversarial attacks. Safeguarding these systems is essential to ensure their reliability and protect them from malicious actors. Without proper security measures, AI systems can become vulnerable, leading to compromised data, disrupted operations, or even harmful outcomes.

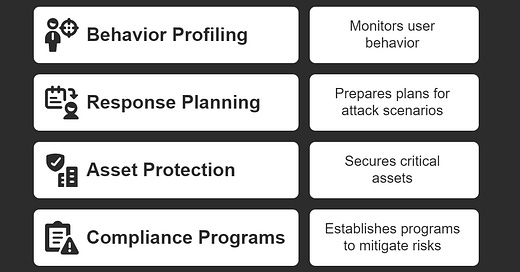

Effective security strategies focus on both prevention and response. For instance, intrusion detection systems can identify unauthorized access to datasets and models, enabling you to respond swiftly to potential breaches. Behavior profiling helps monitor user activity, detecting patterns that may signal an impending attack. These proactive measures reduce the likelihood of security incidents and enhance system resilience.

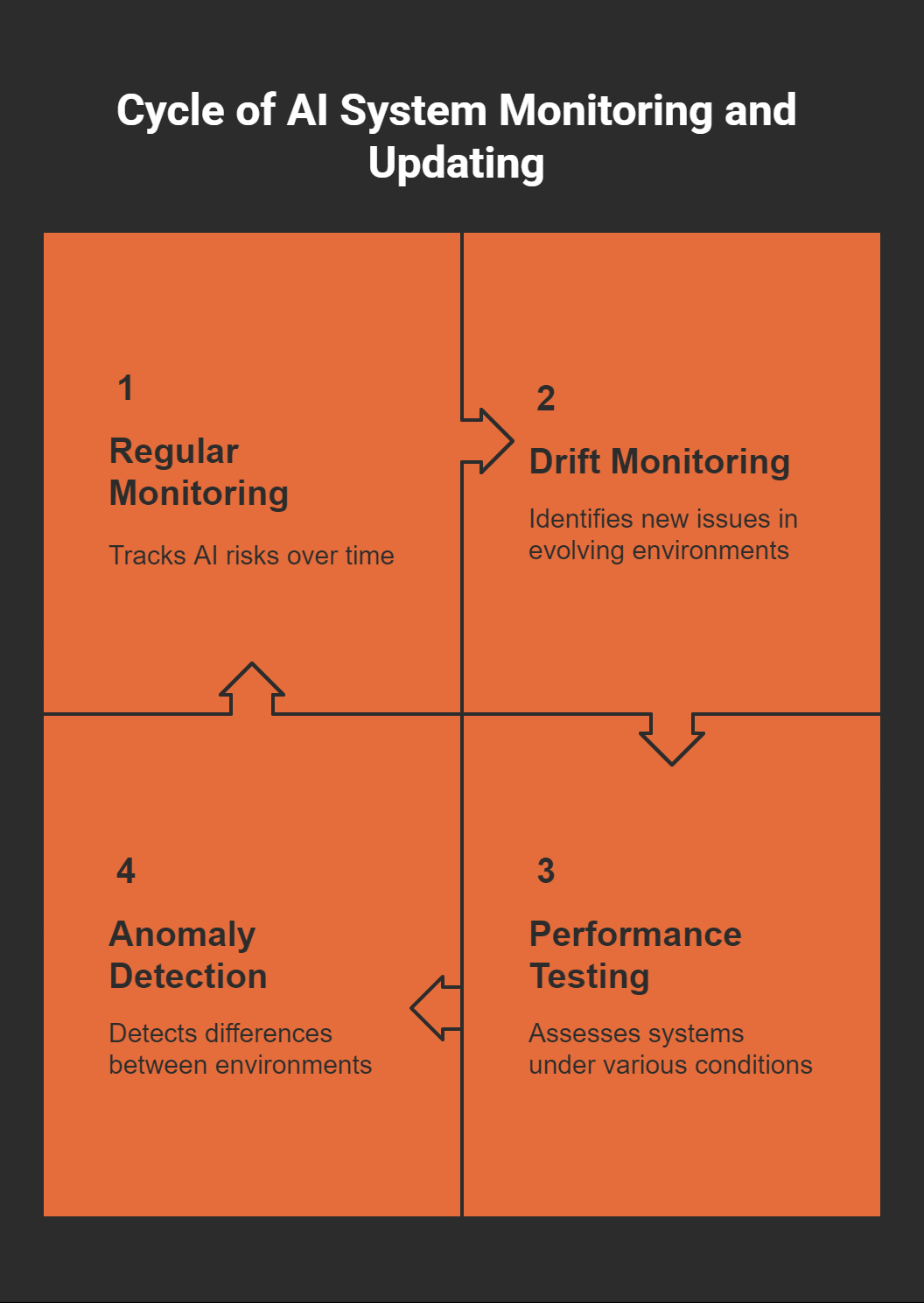

Here are some best practices for securing AI systems:

You should also consider the role of encryption and access controls in protecting AI systems. Encrypting sensitive data ensures that even if attackers gain access, they cannot exploit the information. Access controls limit who can interact with your AI models, reducing the risk of internal and external threats.

Regular security audits and penetration testing further strengthen your defenses. These practices help identify vulnerabilities before attackers can exploit them. By staying proactive, you can ensure that your AI systems remain secure and trustworthy.

Note: Security is an ongoing process. Continuously update your systems to address new threats and maintain robust defenses.

Implementing AI Governance in Practice

Establishing Governance Committees and Frameworks

Creating governance committees and frameworks is essential for managing AI systems effectively. These committees bring together experts from diverse fields, including technology, ethics, and law, to oversee AI operations. By establishing clear roles and responsibilities, you ensure that every aspect of AI governance is addressed. For example, the EU AI Act provides a structured framework that prohibits harmful AI practices and enforces transparency. Similarly, IBM's AI Ethics Board has been instrumental in aligning AI products with ethical standards since 2019.

To implement governance frameworks, you can start by defining objectives and milestones. A roadmap helps you outline priorities, timelines, and responsibilities. Collaborating with stakeholders such as executives, IT teams, and legal advisors ensures alignment across your organization. Choosing the right governance model—whether centralized, decentralized, or hybrid—further enhances efficiency.

Here’s a table showcasing examples of existing governance committees and frameworks:

Tip: Start small by testing governance frameworks on pilot projects before scaling them across your organization.

Conducting Regular Audits and Bias Assessments

Regular audits and bias assessments are critical for maintaining the integrity of AI systems. These practices help you identify risks, ensure compliance, and align AI systems with organizational goals. For instance, conducting risk assessments allows you to uncover potential issues, while reviewing governance infrastructure ensures oversight mechanisms are functioning effectively.

Bias assessments play a key role in promoting fairness. By analyzing AI systems for disparate impacts, you can identify and address hidden biases. Metrics like demographic parity and equalized odds provide measurable ways to evaluate fairness. Collaboration with domain experts enhances the accuracy of these assessments, ensuring they align with industry-specific needs.

Here’s a table summarizing common objectives of audits and bias assessments:

Note: Regular audits not only identify vulnerabilities but also build trust by demonstrating your commitment to responsible AI practices.

Developing Ethical AI Guidelines and Policies

Ethical guidelines and policies form the backbone of responsible AI governance. These documents outline the principles and standards that guide AI development and deployment. By creating clear policies, you ensure that AI systems align with societal values and organizational goals. For example, the OECD AI Principles emphasize transparency, fairness, and accountability, serving as a global benchmark for ethical AI practices.

To develop effective guidelines, start by defining a governance roadmap. This roadmap should include objectives, timelines, and responsibilities. Engaging stakeholders ensures that ethical considerations are integrated into every stage of AI development. Deploying risk management strategies further strengthens your policies, addressing issues like bias, security, and compliance.

Here’s a step-by-step strategy for implementing ethical AI guidelines:

Define a governance roadmap with clear milestones.

Engage key stakeholders to align priorities.

Choose a governance model suited to your organization.

Deploy risk management strategies to address bias and security.

Continuously update policies to adapt to new technologies.

Educate employees on the benefits of ethical AI practices.

Test guidelines on pilot projects before broader application.

Standardize processes for consistency across the organization.

Learn from successful case studies to refine your approach.

Invest in training programs to build expertise in ethical AI governance.

Future-proof frameworks to adapt to evolving regulations.

Tip: Ethical guidelines should evolve alongside AI technologies. Regular updates ensure your policies remain relevant and effective.

Creating Comprehensive Documentation for AI Systems

Comprehensive documentation is essential for ensuring the transparency and reliability of AI systems. It provides a clear record of how systems are designed, trained, and deployed, making it easier for stakeholders to understand their functionality and compliance with regulations. When you document AI systems thoroughly, you create a foundation for effective governance and risk management.

Here are some ways documentation improves transparency and compliance:

It supports risk management by helping organizations identify potential failure modes.

It includes artifacts like datasheets and model cards, which explain how systems meet organizational or legal requirements.

To create effective documentation, focus on the following elements:

Datasheets for Datasets: These describe the origin, structure, and intended use of the data. They help you ensure that the data aligns with ethical and legal standards.

Model Cards: These outline the purpose, limitations, and performance metrics of AI models. They provide stakeholders with insights into how models operate and where they might fall short.

Decision Logs: These track changes made during the development and deployment phases. Decision logs allow you to trace the reasoning behind modifications, ensuring accountability.

User Manuals: These explain how to interact with AI systems safely and effectively. Clear instructions reduce the risk of misuse and improve user experience.

When documenting AI systems, consistency is key. Use standardized templates to ensure all records follow the same format. This makes it easier for stakeholders to review and compare information. Regular updates to documentation also keep it relevant as systems evolve.

Tip: Treat documentation as a living resource. Update it frequently to reflect changes in technology, regulations, and organizational goals.

Monitoring and Updating AI Systems Continuously

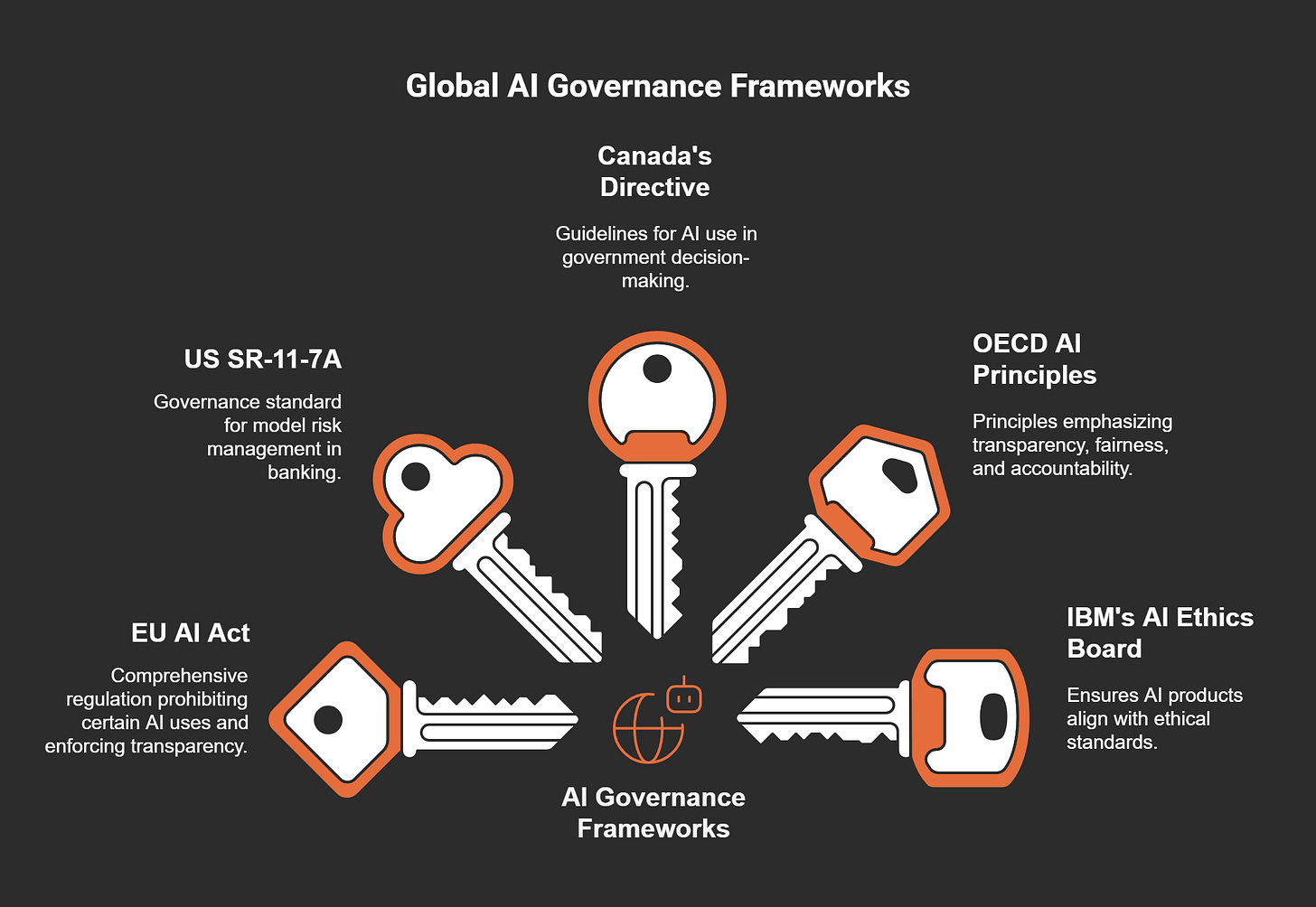

Continuous monitoring and updating are vital for maintaining the robustness and reliability of AI systems. Monitoring allows you to track system performance, identify risks, and address issues before they escalate. Regular updates ensure that AI systems adapt to changing environments and remain effective over time.

Here’s a table summarizing proven monitoring techniques and their benefits:

To implement effective monitoring, start by setting clear performance benchmarks. These benchmarks help you measure whether your AI systems are meeting expectations. Use automated tools to monitor metrics like accuracy, response time, and error rates. Automated systems reduce the workload and provide real-time insights into system behavior.

Updating AI systems involves more than just fixing bugs. It includes retraining models with new data, refining algorithms, and incorporating feedback from users. Schedule updates at regular intervals to ensure systems stay aligned with organizational goals and regulatory requirements.

Note: Continuous monitoring and updates not only improve system performance but also build trust by demonstrating your commitment to responsible AI governance.

Tools and Resources for AI Governance

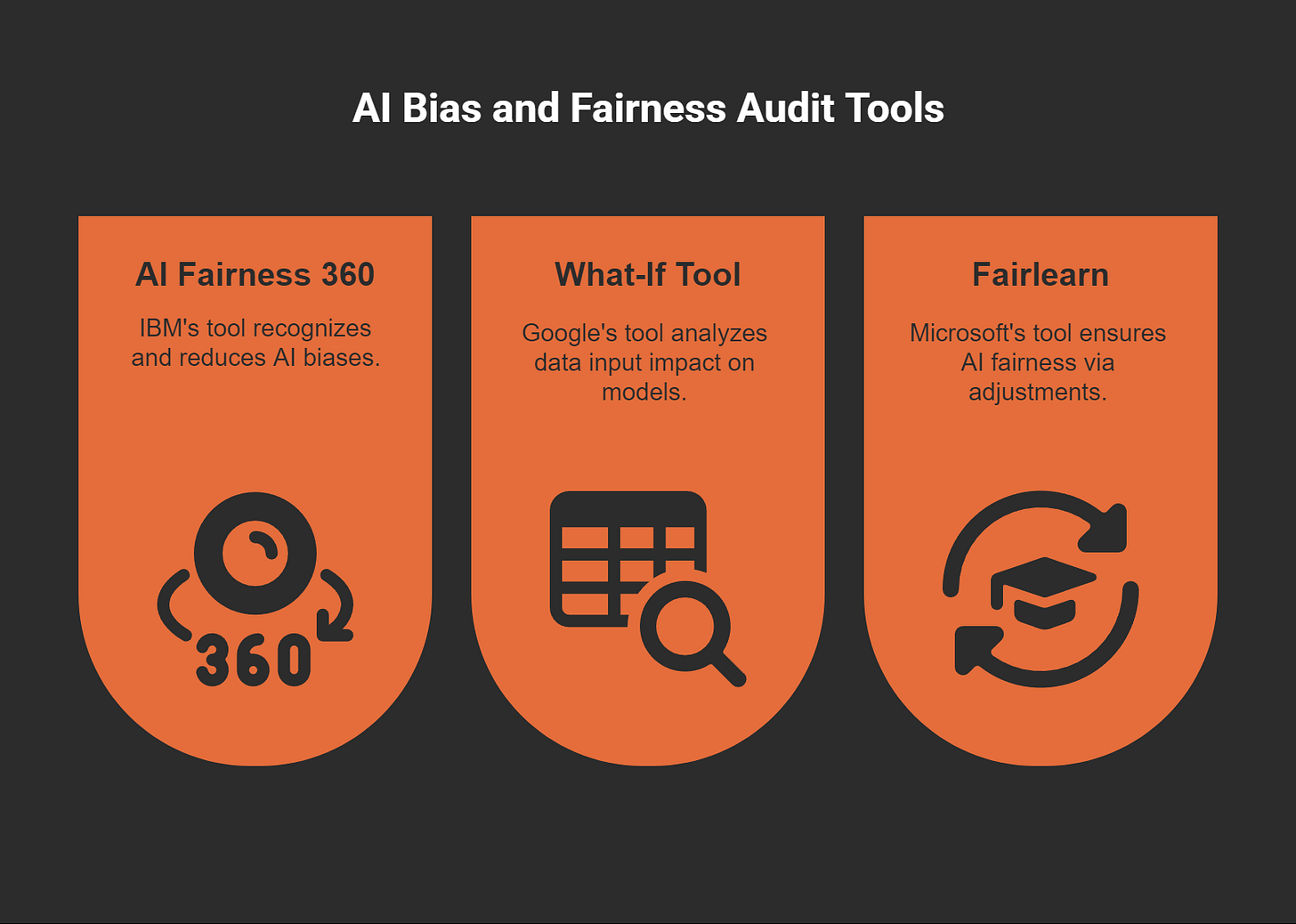

Audit Tools for Bias and Fairness

Audit tools play a crucial role in identifying and mitigating biases in AI systems. These tools help you ensure fairness by analyzing how AI models treat different groups and suggesting adjustments to improve equity. For example, IBM’s AI Fairness 360 provides a comprehensive suite of metrics and algorithms to detect and reduce biases. Google’s What-If Tool allows you to explore how changes in data inputs affect model outcomes, offering actionable insights for improving fairness. Microsoft’s Fairlearn focuses on re-weighting data and other methods to align AI models with fairness goals.

These tools emphasize the importance of socio-technical perspectives in AI auditing. They also encourage standardized methodologies, making it easier for firms and regulators to address biases effectively.

Tip: Regularly auditing your AI systems with these tools can help you build trust and ensure compliance with fairness standards.

Transparency Tools for Explainable AI

Transparency tools make AI systems more understandable by breaking down complex algorithms into simpler, human-readable explanations. These tools allow you to see how decisions are made, which builds trust and reduces the risk of misuse. For instance, SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations) are widely used to explain individual predictions made by AI models. They highlight which features influenced a decision, helping you identify potential errors or biases.

Visualization tools also enhance transparency. They allow you to explore how different inputs affect AI outputs, making it easier to understand the system’s behavior. By using these tools, you can ensure that your AI systems remain interpretable and aligned with ethical standards.

Note: Transparency tools not only improve accountability but also help non-technical stakeholders understand AI systems better.

Privacy-Enhancing Technologies

Privacy-enhancing technologies (PETs) protect user data while maintaining the analytical value of AI systems. These technologies use advanced methods like data anonymization, differential privacy, and federated learning to mitigate risks. For example, anonymization dynamically identifies and masks sensitive information, ensuring privacy without compromising data utility. Differential privacy allows you to extract insights from sensitive data while providing mathematical guarantees of privacy. Federated learning enables AI models to train on decentralized data, reducing the need to share raw information.

These methods address the limitations of traditional privacy-protection techniques. By integrating PETs into your AI systems, you can safeguard user trust and comply with data protection regulations.

Tip: Always evaluate your AI systems for privacy risks and update them with the latest PETs to stay ahead of emerging threats.

Open-Source Frameworks and Standards

Open-source frameworks and standards provide you with essential tools to implement effective AI governance. These resources help you align your AI systems with ethical principles, ensuring transparency, fairness, and accountability. By adopting open-source solutions, you gain access to community-driven innovations that promote collaboration and continuous improvement.

One of the most impactful standards is ISO/IEC 42001, the first AI management system standard. It offers a structured approach to managing AI opportunities and risks ethically. This standard helps you establish governance practices that align with global expectations. Another valuable resource is ISO/IEC 23894:2023, which focuses on risk management for AI. It provides recommendations to integrate risk management into your AI activities, ensuring your systems remain secure and reliable.

To address algorithmic bias, you can rely on the Algorithmic Bias Considerations framework. This resource outlines methodologies for identifying and mitigating bias in AI algorithms. By using this framework, you promote equity and openness in your AI development processes.

Here’s a quick overview of these standards:

Open-source frameworks like TensorFlow and PyTorch also play a critical role. These tools allow you to build and test AI models while incorporating governance features such as explainability and fairness. For example, TensorFlow’s Model Card Toolkit helps you document model performance and limitations, enhancing transparency.

Tip: Start by exploring one framework or standard that aligns with your organization’s goals. Gradually expand your toolkit as your governance needs evolve.

By leveraging these open-source resources, you can create AI systems that are not only innovative but also responsible and trustworthy.

Building a Culture of Responsible AI

Training Teams on AI Ethics and Best Practices

Training your team on AI ethics and best practices is essential for fostering responsible AI use. A well-designed training program enhances AI literacy and equips participants with the skills to address ethical challenges. These programs often include modules on responsible AI practices, ethical considerations, and the use of large language models (LLMs). Participants also learn design thinking techniques to solve AI-related problems effectively.

A foundational program typically covers:

Essential AI knowledge and data literacy.

Responsible AI practices and ethical decision-making.

Practical applications of LLMs in real-world scenarios.

A 2024 industry survey revealed that 85% of senior marketing professionals who completed foundational AI training saw significant improvements in campaign performance. Within six months, they reported better lead generation quality and higher marketing ROI. This demonstrates how training can directly impact organizational success.

Tip: Regularly update training modules to reflect advancements in AI technology and evolving ethical standards.

Encouraging Cross-Departmental Collaboration

Cross-departmental collaboration strengthens AI governance by integrating diverse perspectives. When teams from different departments work together, they bring unique insights that reduce the risk of bias in AI systems. Collaborative efforts also ensure that AI tools align with governance standards and remain user-friendly.

Benefits of cross-functional collaboration include:

Reduced bias through diverse viewpoints.

Development of reliable and accessible AI systems.

For example, organizations that encourage collaboration often find it easier to implement governance frameworks. By involving stakeholders from various departments, you create a unified approach to AI governance that aligns with organizational goals.

Note: Use AI tools to facilitate communication and streamline collaborative processes across teams.

Engaging Stakeholders in Governance Discussions

Engaging stakeholders in governance discussions ensures that AI systems align with ethical principles and organizational objectives. Stakeholders, including executives, legal advisors, and technical teams, play a critical role in shaping governance policies. Their involvement fosters transparency and accountability, which are key to building trust.

Organizations that prioritize stakeholder engagement often achieve measurable outcomes. For instance:

These examples highlight how stakeholder involvement drives innovation and compliance. By including stakeholders in governance discussions, you ensure that AI systems meet ethical standards and deliver value to users.

Tip: Schedule regular meetings with stakeholders to review governance policies and address emerging challenges.

Promoting Transparency and Open Communication

Transparency and open communication are essential for building trust in AI governance. When you share information openly, you create an environment where stakeholders feel informed and valued. This approach not only fosters collaboration but also ensures that your AI systems align with ethical and organizational goals.

To promote transparency, start by sharing clear and accessible documentation about your AI systems. Include details about how the systems work, their limitations, and the data they use. For example, publishing model cards or datasheets for datasets can help stakeholders understand the purpose and performance of your AI models. These documents make it easier for others to evaluate your systems and provide constructive feedback.

Open communication involves engaging with stakeholders regularly. You can organize workshops, webinars, or Q&A sessions to discuss your AI governance practices. These events allow you to address concerns, gather insights, and build consensus. For instance, inviting feedback from diverse groups ensures that your AI systems meet the needs of all users, not just a select few.

Tip: Use plain language when communicating with non-technical audiences. Avoid jargon to ensure everyone understands your message.

Here are some practical steps to enhance transparency and communication:

Create a centralized platform: Use tools like dashboards to share updates and metrics about your AI systems.

Encourage feedback loops: Provide channels for stakeholders to share their thoughts and concerns.

Publish regular reports: Share progress on AI governance initiatives to keep everyone informed.

By prioritizing transparency and open communication, you demonstrate your commitment to responsible AI practices. This approach not only strengthens trust but also helps you identify and address potential issues early.

AI governance is essential for ensuring ethical, transparent, and secure AI systems. It helps you mitigate risks, build trust, and align AI technologies with societal values. Practical steps like conducting audits, implementing ethical guidelines, and leveraging tools such as fairness metrics and privacy-enhancing technologies make governance achievable.

Start small by focusing on one principle, such as transparency or fairness. Gradually expand your efforts as your expertise grows. This approach ensures steady progress while maintaining manageable goals.

Tip: Begin with pilot projects to test your governance strategies before scaling them across your organization.

FAQ

What is the first step to implementing AI governance in your organization?

Start by forming a governance committee. This team should include experts from technology, ethics, and legal fields. Define clear objectives and create a roadmap for implementation. Pilot projects can help you test frameworks before scaling them across your organization.

Tip: Begin with small, manageable goals to build momentum.

How can you ensure fairness in AI systems?

Use fairness metrics like demographic parity or equalized odds to evaluate your AI models. Collaborate with domain experts to interpret results and address biases. Regularly monitor and update your systems to adapt to societal changes and maintain equitable outcomes.

Note: Fairness requires continuous effort, not a one-time fix.

What tools can help you improve AI transparency?

Tools like SHAP and LIME explain AI decisions by highlighting influential factors. Visualization tools also help you understand how inputs affect outputs. These resources make your AI systems more interpretable and trustworthy.

Example: Use SHAP to identify which features influenced a loan approval decision.

How do privacy-enhancing technologies protect user data?

Technologies like data anonymization, differential privacy, and federated learning safeguard sensitive information. They allow you to analyze data without exposing personal details. These methods reduce risks and comply with privacy regulations.

Tip: Regularly evaluate your systems for privacy risks and update them with the latest technologies.

Why is continuous monitoring important for AI governance?

Continuous monitoring tracks system performance and identifies risks early. It ensures your AI systems remain accurate, reliable, and aligned with organizational goals. Techniques like drift monitoring and anomaly detection help you address issues before they escalate.

Reminder: Regular updates keep your systems effective and compliant.