Why Explainable AI Is Essential for Transparent Machine Learning Predictions

Every day, you interact with algorithms that shape your opportunities, from job applications to financial approvals. When you face a decision made by a machine, you want to know the reason behind it. Black box models can miss key risks, as seen when statistical models fail to capture new threats in financial markets. Without explainable answers, you lose control and fairness fades. Explainable AI brings transparency, allowing you to see why a decision was made. This explainable approach builds trust and gives you the power to challenge or improve outcomes.

Key Takeaways

Explainable AI reveals how machine learning models make decisions, helping you understand and trust their outcomes.

Transparency in AI prevents hidden biases and errors, promoting fairness and accountability in important areas like hiring, finance, and healthcare.

Tools like LIME and SHAP break down complex AI decisions into simple explanations you can use to question and improve results.

Clear AI explanations build your confidence, letting you challenge unfair decisions and make better choices.

Demanding explainable AI supports ethical progress and helps create fairer, safer, and more trustworthy technology for everyone.

The Black Box Problem

Complexity in Machine Learning

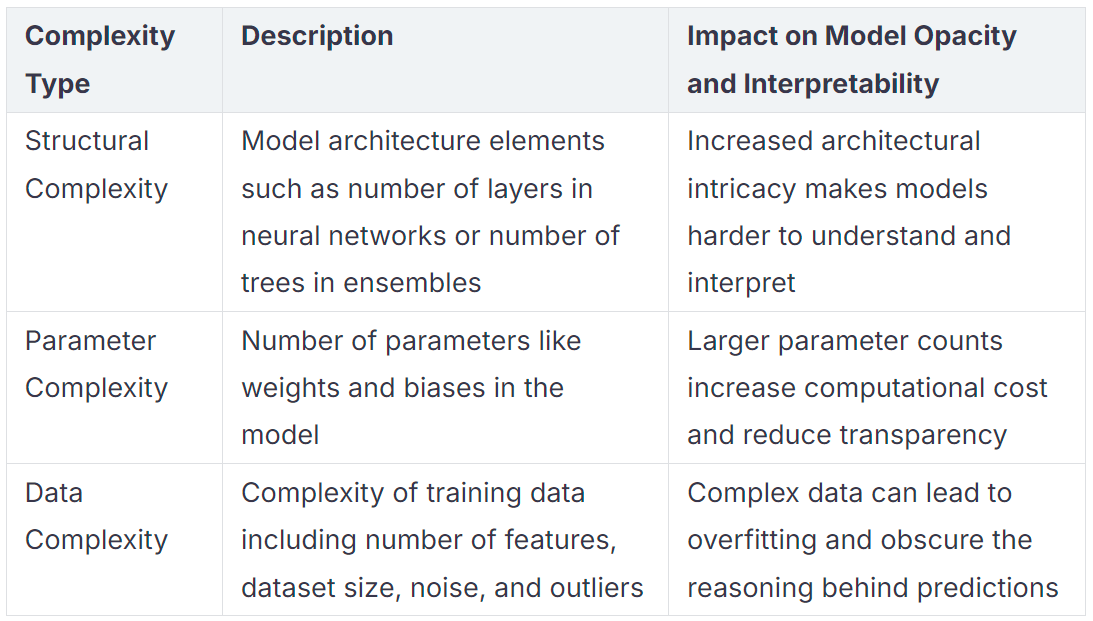

When you use machine learning models, you often face a challenge: understanding how they reach a decision. These models can look like black boxes because of their complexity. You might wonder why even experts struggle to explain the decision-making process. The answer lies in the structure, the number of parameters, and the data used in the process.

Here is a table that breaks down the main sources of complexity:

As you can see, each type of complexity adds another layer to the decision-making process. The more complex the model, the harder it becomes to trace the path from input to decision. This makes it difficult for you to understand or trust the process behind the outcome.

Real-World Consequences

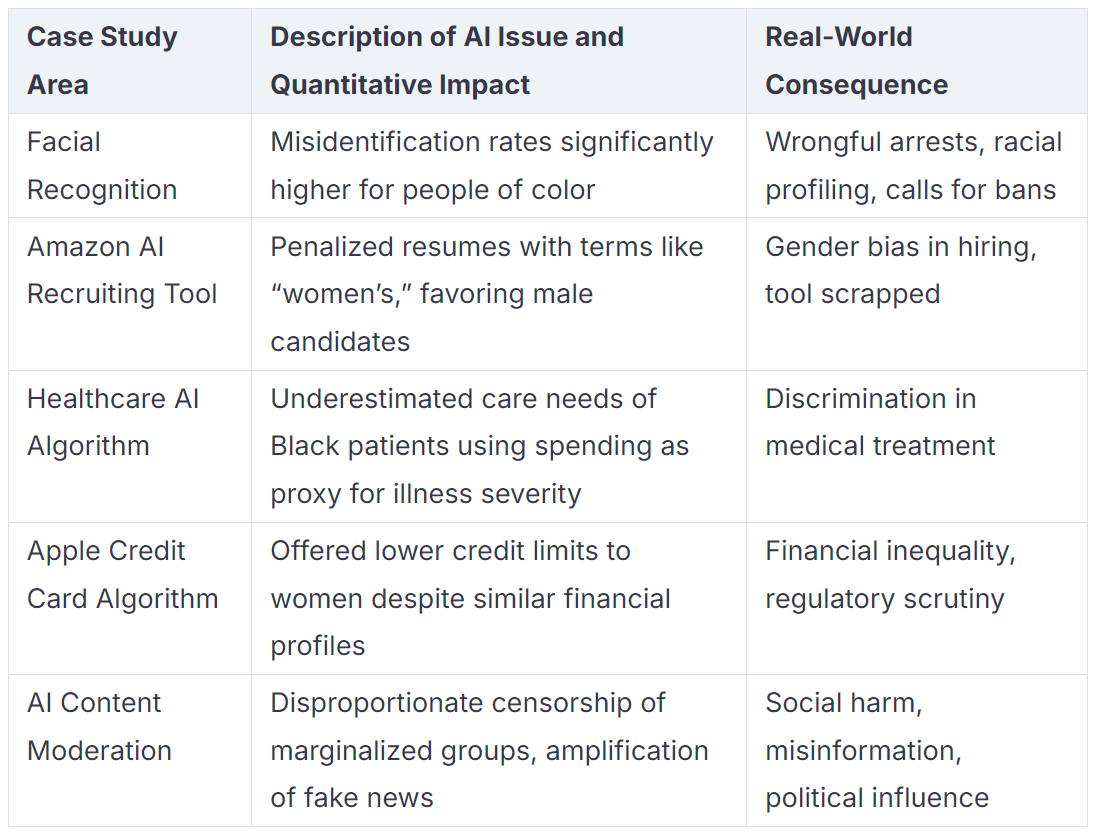

When you cannot see how a machine learning model makes a decision, real problems can arise. The decision-making process can hide mistakes or biases that affect your life. For example, facial recognition systems have shown higher misidentification rates for people of color. This has led to wrongful arrests and calls for bans. In hiring, an AI recruiting tool once penalized resumes with words like “women’s,” favoring male candidates. This decision-making process created gender bias and forced the company to scrap the tool.

Here are some real-world examples:

These cases show that when the decision-making process stays hidden, the model can cause harm. You might face unfair treatment, financial loss, or even social harm. Understanding the process behind each decision helps you spot errors and demand fairness. When you know how a model works, you can trust its decision and hold it accountable.

Explainable AI and Transparency

What Is Explainable AI?

You often hear about artificial intelligence making decisions in areas like healthcare, finance, and hiring. But how do you know why a model made a certain choice? Explainable AI gives you the answer. It is not just a technical tool. It is a bridge between the complex logic of machine learning models and your need to understand their reasoning. The main goal of explainable AI is to make the decision-making process clear and meaningful for you.

When you use explainable AI, you get explanations that help you see the logic behind each decision. These explanations can come in many forms, such as natural language explanations, visual highlights, or even counterfactual explanations that show what would happen if you changed certain inputs. For example, if a model denies your loan application, an explainable system can show you which factors mattered most and what you could change to get a different result.

Researchers see explainable AI as a communication process. It is not enough for a model to be accurate. You need to understand and interpret its reasoning. Studies show that when you receive clear explanations, your confidence in AI decisions increases. In one user study, participants from different backgrounds used explainable AI methods like LIME and SHAP to interpret a model’s decision about mushroom edibility. The study found that well-designed explanations helped users understand complex decisions, especially when tailored to their needs. This shows that explainable AI can make even the most advanced models accessible and trustworthy.

Explainable AI also supports fairness and accountability. When you can see how a model makes decisions, you can check for bias and demand fair treatment. Surveys show that explanations improve your sense of fairness and trust in AI systems. This is especially important in high-stakes areas, where transparency is not just helpful but necessary.

Note: The core purpose of explainable AI is to turn the decision-making process into a dialogue. You do not just accept the answer; you understand the reasoning and can ask questions. This approach helps you work with AI, not just follow its orders.

Making Machine Learning Models Transparent

Making machine learning models transparent means you can see and understand how they work. This process uses explainable tools to break down the black box and reveal the steps behind each decision. You do not have to be a data scientist to benefit from this transparency. Tools like feature importance, LIME, and SHAP help you interpret the model’s logic in simple terms.

Let’s look at how these tools work:

Feature Importance: This tool shows you which features matter most in a model’s decision. For example, if a model predicts customer churn, feature importance might reveal that recent support calls and account age are the top factors. This helps you focus on what really drives the outcome.

LIME (Local Interpretable Model-Agnostic Explanations): LIME explains individual decisions by tweaking input data and observing changes in the output. Imagine you inspect a car door panel for defects. LIME can highlight a hairline crack (+45% impact), a misaligned handle (+25%), and paint discoloration (+15%). It can also show that a correctly placed logo reduces the chance of a defect (-10%). This level of detail helps you act quickly and accurately.

Hairline crack near the window frame: +45% impact

Slight misalignment of the door handle: +25% impact

Minor paint discoloration on the lower edge: +15% impact

Correct placement of the company logo: -10% impact

This numerical breakdown lets you focus on the most important issues, saving time and improving quality. Aggregated explanations help you spot patterns, manage suppliers, and train both AI and human inspectors. These improvements lead to cost savings and higher customer confidence.

You can also use explainable AI to check for bias and ensure fairness. In medical imaging, researchers use SHAP to visualize how each feature affects a diagnosis. This process makes the model’s reasoning visible and helps reduce errors. Simulation studies show that using multiple data splits and feature importance statistics can reveal hidden biases and make machine learning models transparent. By combining these tools, you get a clear view of how the model works and where it might go wrong.

A systematic framework helps you measure how well explainable AI reduces opacity. You can check for consistency, plausibility, fidelity, and usefulness. For example, in healthcare, experts use these criteria to judge if explanations match real-world knowledge and help improve patient care. This approach turns explainability into something you can measure and trust.

Tip: When you use transparent AI models, you gain control over the decision-making process. You can ask, “Why did the model make this choice?” and get a clear, actionable answer.

Explainable AI does more than just explain. It builds a partnership between you and the technology. You become an active part of the process, able to question, improve, and trust the decisions that shape your life. As AI becomes more common, making machine learning models transparent will help you stay informed and empowered.

Building Trust with Explainability

User Confidence

You want to feel confident when you use technology that makes important decisions. Explainable AI helps you gain trust in these systems by making their reasoning clear. When you see how a model reaches its decision, you can judge if the process makes sense. This transparency turns a mysterious black box into a tool you can understand and use with confidence.

Surveys and studies show that explainable features can boost your trust, but only if the explanations are accurate and complete. For example:

In a survey of 30 clinicians who analyzed CT images, strong explanations led to higher trust ratings, even though the difference was not statistically significant.

Thirteen clinicians said explanations helped them, but they wanted more detail and context, such as patient age or medical history.

Prior research found that you trust a model more when its explanations match the real reasoning and are easy to understand.

If you see poor or incomplete explanations, your trust drops. You may even lose faith in the system.

Early exposure to strong explanations helps you form a better impression of the model and its decisions.

You can see that explainability is not just about showing any explanation. The quality and context matter. When you get clear, precise, and relevant explanations, you feel more comfortable relying on AI for better decision making. You can ask questions, spot mistakes, and understand the logic behind each decision.

Tip: If you want to gain trust in AI, look for systems that offer detailed and context-rich explanations. These systems help you make better choices and feel more in control.

Accountability and Fairness

Explainable AI does more than build your confidence. It also supports accountability and fairness in every decision. When you understand how a model works, you can check if it treats everyone fairly. You can see if the model uses the right information and avoids hidden biases.

Transparency is key for trust and accountability. If a model denies your loan or job application, you deserve to know why. Explainable systems let you see the main factors behind each decision. This openness helps you challenge unfair outcomes and demand better practices.

Here is how explainable AI supports fairness and accountability:

You play an active role when you use explainable AI. You do not just accept decisions. You can ask for explanations, check for fairness, and help improve the system. This partnership leads to better decision making and a more just society.

Note: Explainability is the foundation of trust and accountability. When you understand the logic behind AI, you help ensure that technology serves everyone fairly.

Explainable AI gives you the tools to question, understand, and improve every decision. With transparency and clear explanations, you can trust AI to support your goals and values.

Transparency in AI Across Industries

Healthcare

You see transparency in AI making a real difference in healthcare. When doctors use AI to help diagnose diseases or recommend treatments, they need to understand how the system reaches its conclusions. Transparent AI models allow you to see which symptoms or test results matter most. This clarity helps doctors trust AI recommendations and improves patient outcomes. For example, in emergency care, explainable AI can show the cause-and-effect behind its advice, helping you act quickly and confidently. Transparent applications also help spot errors and biases, making healthcare safer for everyone.

Finance

In finance, transparency in AI is not just helpful—it is required. You rely on AI for credit scoring, fraud detection, and investment advice. Regulations such as GDPR and the EU AI Act demand that financial institutions explain their AI-driven decisions. If your loan application is denied, you have the right to know why. Financial organizations use explainable AI tools like SHAP and LIME to meet these rules. These applications help you understand risk assessments and portfolio choices. Transparent AI also protects your rights, supports fair lending, and builds trust between you and your bank.

Key regulations in finance require transparency, accountability, and fairness. Explainable AI ensures that financial applications remain unbiased and auditable.

Justice and Public Sector

Transparency in AI shapes justice and public sector applications in powerful ways. When governments use AI for criminal justice or public services, you need to know how decisions are made. Platforms that integrate data from different agencies improve transparency and help policymakers make better choices. For example, sharing anonymized justice data supports safer communities and protects privacy. Standards like the UK’s Algorithmic Transparency Recording Standard push agencies to document and explain their AI systems. When frontline workers understand AI decisions, they can serve you more effectively and fairly.

Real-life applications in justice and public services show that transparency in AI is essential for trust, accountability, and better outcomes.

The Future of Explainability

Responsible AI

You play a key role in shaping responsible AI. As you use AI systems, you expect them to make fair and transparent decisions. Responsible AI means balancing accuracy, transparency, fairness, and accountability. Explainability stands at the center of this balance. When you understand how a model works, you can trust the process and the outcome.

Researchers have built a strong foundation for responsible AI. Some important studies include:

Ribeiro et al. (2016) introduced LIME, which explains any model’s decision.

Lundberg and Lee (2017) developed SHAP, a method to interpret predictions.

Rudin (2019) argued that you should use interpretable models for high-stakes decisions.

Doshi-Velez and Kim (2017) called for rigorous science in interpretable machine learning.

Gilpin et al. (2018) and Guidotti et al. (2018) surveyed methods for explaining black-box models.

These studies show that you need both interpretability and explainability. Some experts say you should use models that are easy to understand from the start. Others focus on tools that explain complex models after the fact. New frameworks, like System-of-Systems Machine Learning, aim to improve how well explanations match human thinking. This helps you align the AI process with your own values and needs.

When you demand responsible AI, you help create systems that respect your rights and support better decision making.

Ongoing Challenges

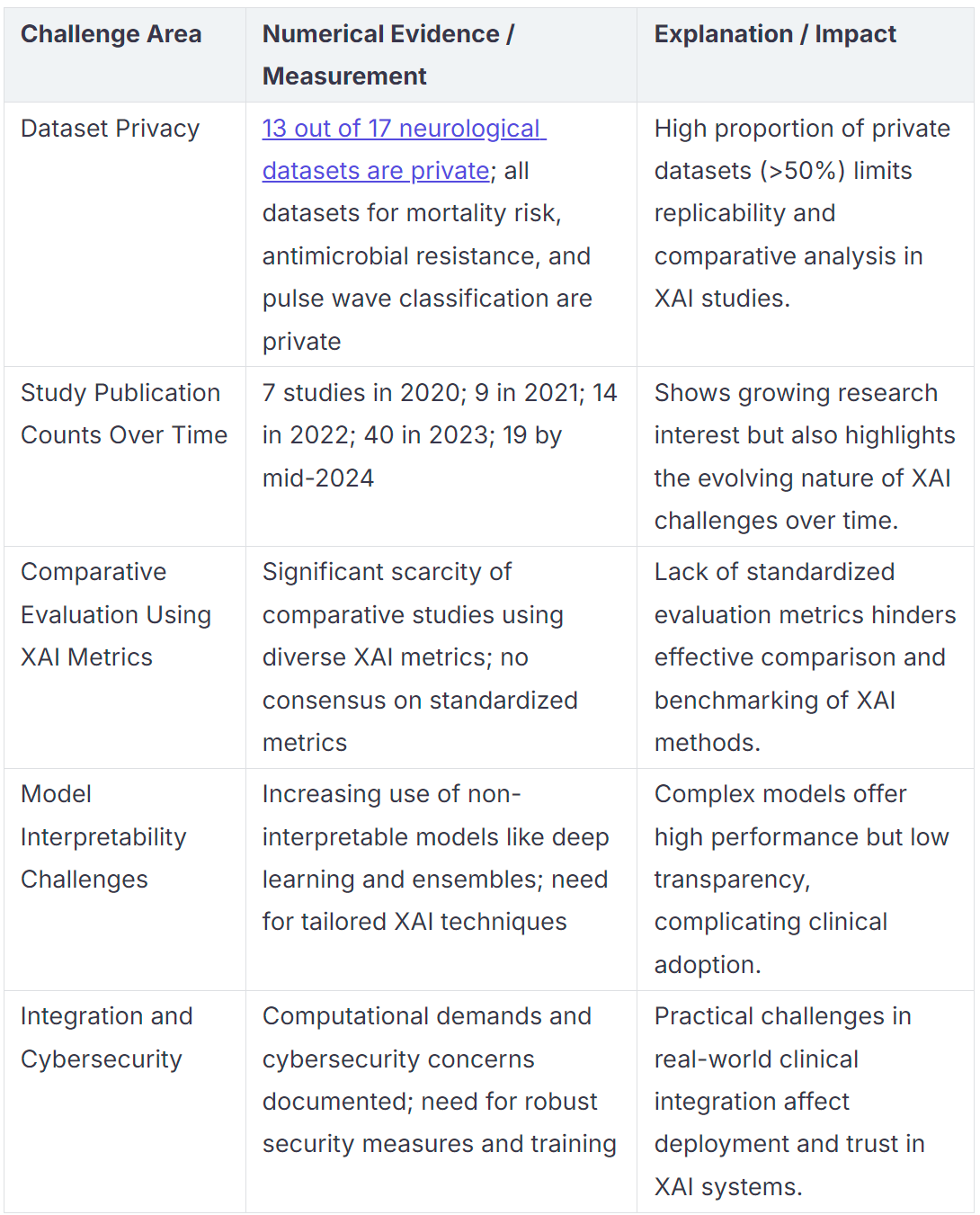

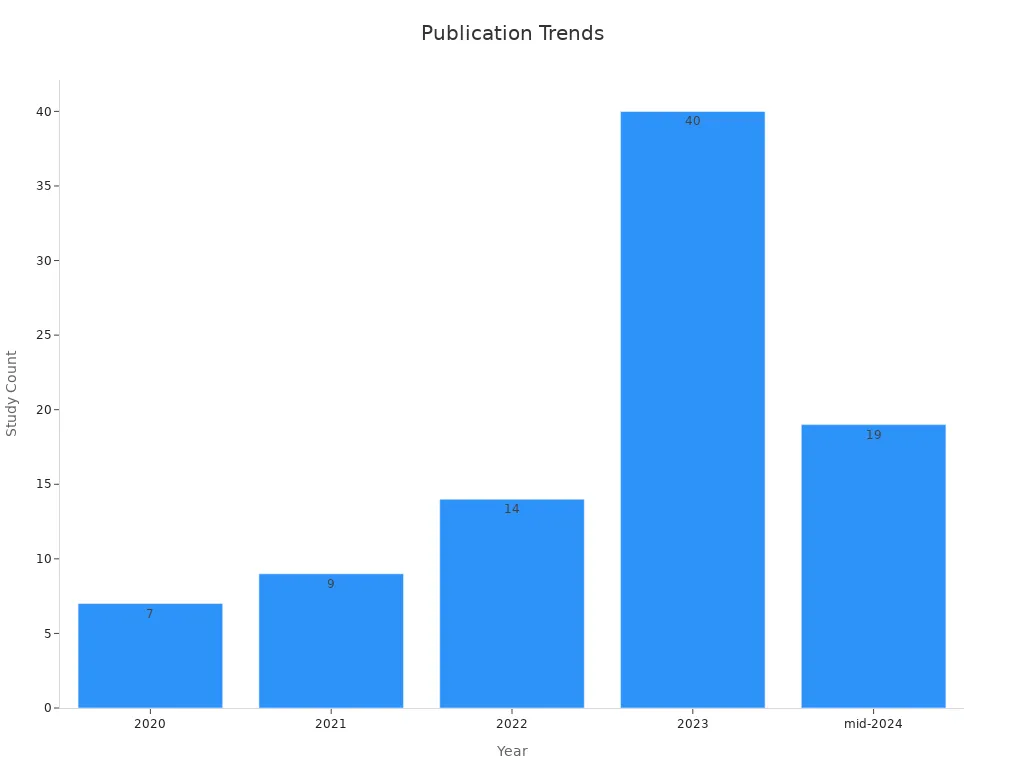

You still face many challenges when you want to make AI explainable. Studies show that most neurological datasets remain private, with 13 out of 17 unavailable for public use. This limits your ability to compare and improve explainable AI methods. In fields like mortality risk and antimicrobial resistance, all datasets are private, making it hard to test new ideas.

You also see a trade-off between accuracy and interpretability. When you use a complex model, you may get better results, but the process becomes harder to explain. Protecting sensitive data and ensuring privacy add more layers to the challenge. Developers must also consider energy use, security, and how to scale explanations for large systems.

Note: Interdisciplinary teamwork is essential. You need AI researchers, domain experts, and industry leaders to work together. This collaboration helps you move from research to real-world solutions.

You can help shape the future of explainable AI by asking for clear explanations and supporting responsible practices. As research grows, you will see new tools and frameworks that make the decision process more transparent and trustworthy.

You need explainable AI to ensure transparency, trust, and ethical progress in every decision that affects your life. When you use explainable systems, you gain clear reasons behind outcomes, which supports fairness and accountability. In healthcare and education, explainable models help you trust AI’s feedback and protect your rights.

You should always demand explainable AI in the tools you use or develop. This approach leads to better decisions, safer outcomes, and a more just society.

FAQ

What is the main goal of explainable AI?

Explainable AI helps you understand how and why an AI system makes decisions. You can see the key factors behind each outcome. This builds trust and lets you use AI more safely.

How does explainable AI help prevent bias?

You can spot unfair patterns when you use explainable AI. It shows which features influence decisions. This helps you find and fix hidden biases in your models.

Can you use explainable AI with any machine learning model?

Yes, you can use explainable AI tools like LIME or SHAP with most models. These tools work with both simple and complex systems. You get clear explanations, even from black box models.

Why should you care about AI transparency in daily life?

AI affects your job, health, and finances. When you understand AI decisions, you can make better choices. Transparency gives you control and helps protect your rights.