The Role of Risk Assessment in the EU AI Act

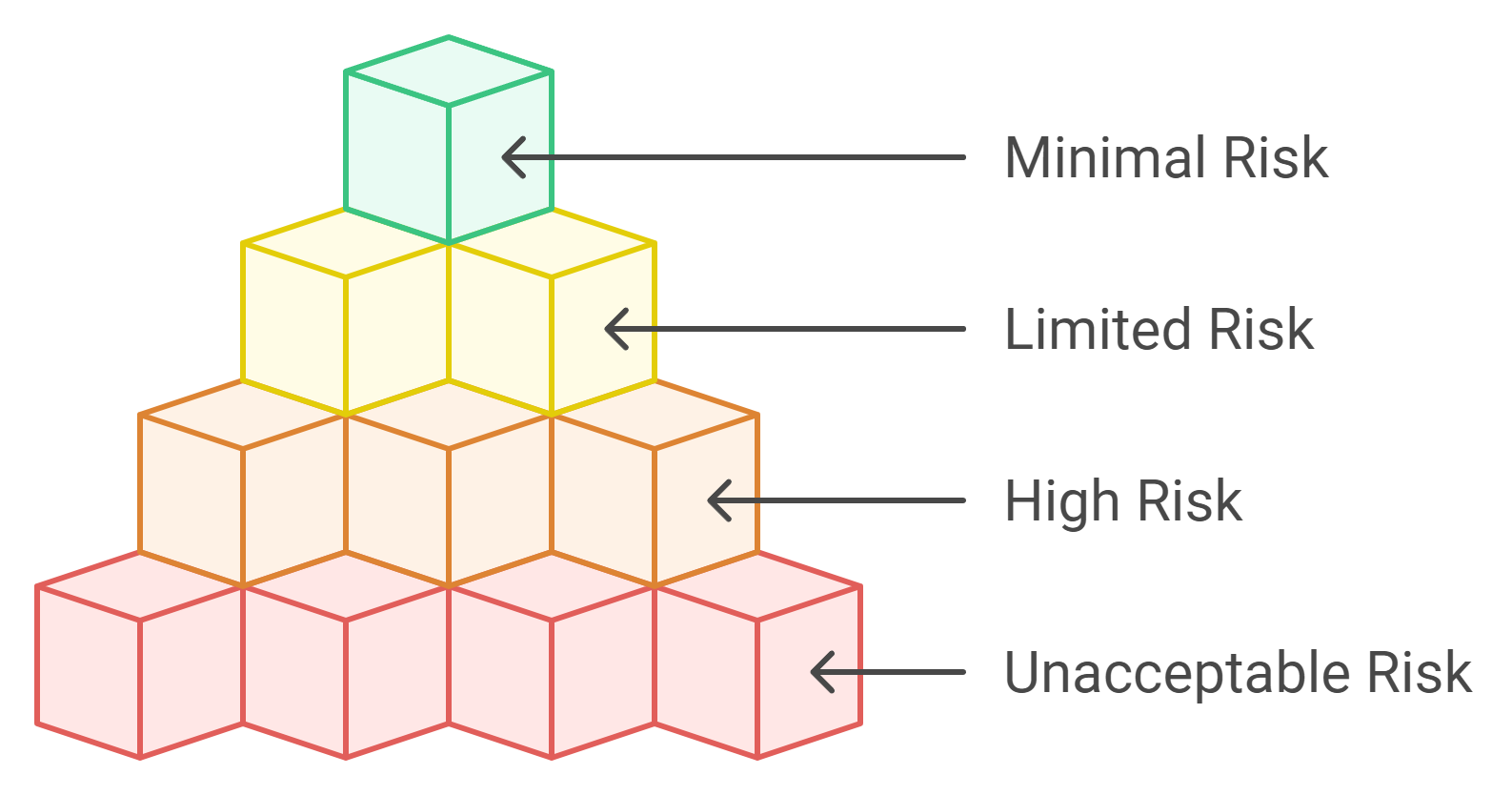

Risk assessment plays a pivotal role in the EU AI Act, forming the backbone of its regulatory framework. By categorizing AI systems into distinct risk levels—unacceptable, high, limited, and minimal—it ensures that each system aligns with safety and ethical standards. This approach prioritizes the protection of health, safety, and fundamental rights while fostering responsible AI development. Through robust risk assessment, stakeholders can identify potential harms, evaluate their severity, and implement measures to mitigate risks effectively. This structured methodology promotes compliance and safeguards societal values in the rapidly evolving AI landscape.

Key Takeaways

Risk assessment is essential for categorizing AI systems into four risk levels: unacceptable, high, limited, and minimal, ensuring safety and ethical compliance.

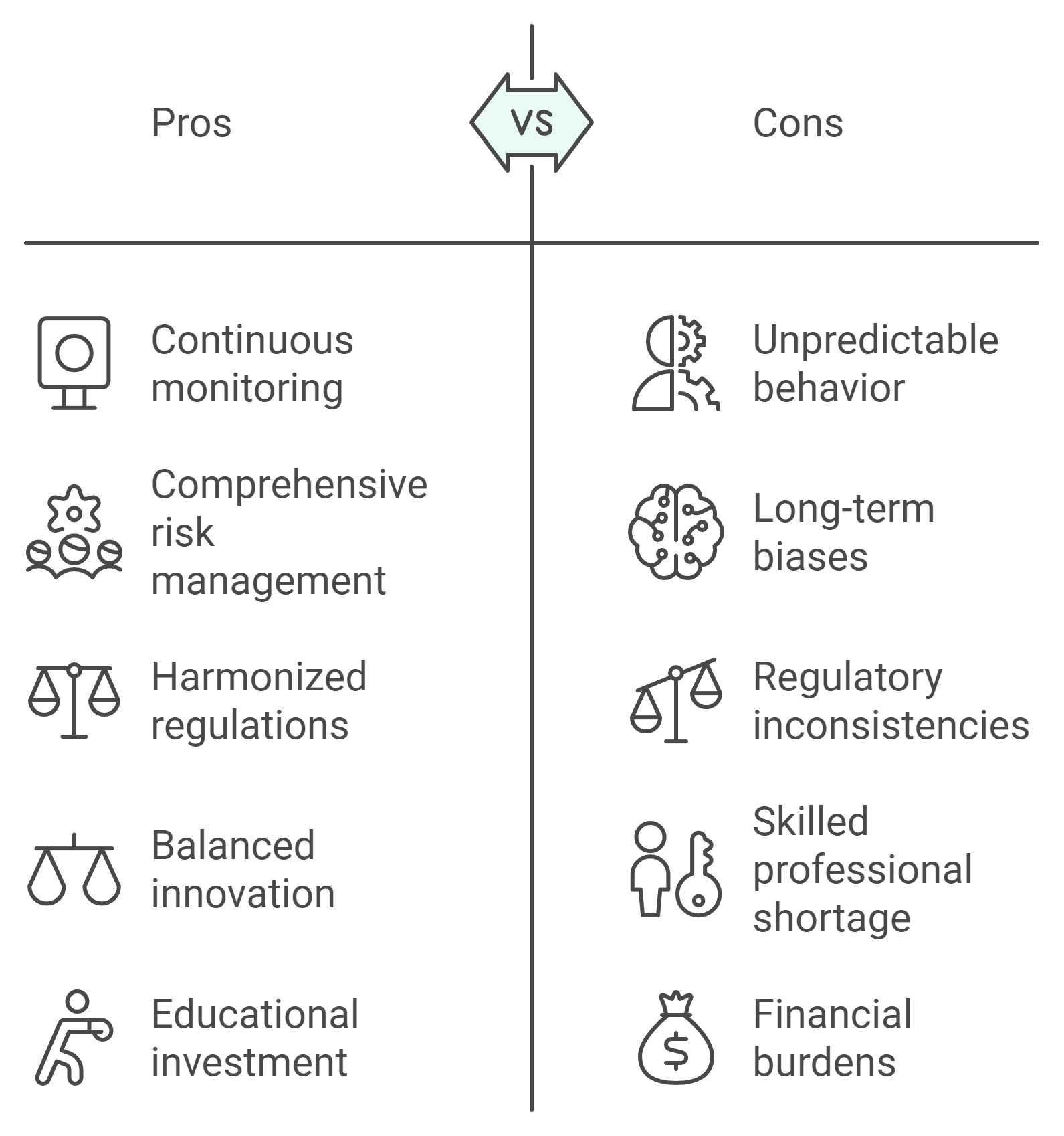

High-risk AI systems require stringent regulatory obligations, including comprehensive risk management and continuous monitoring to mitigate potential harms.

Limited and minimal risk AI systems face fewer regulations, promoting innovation while maintaining user trust through transparency.

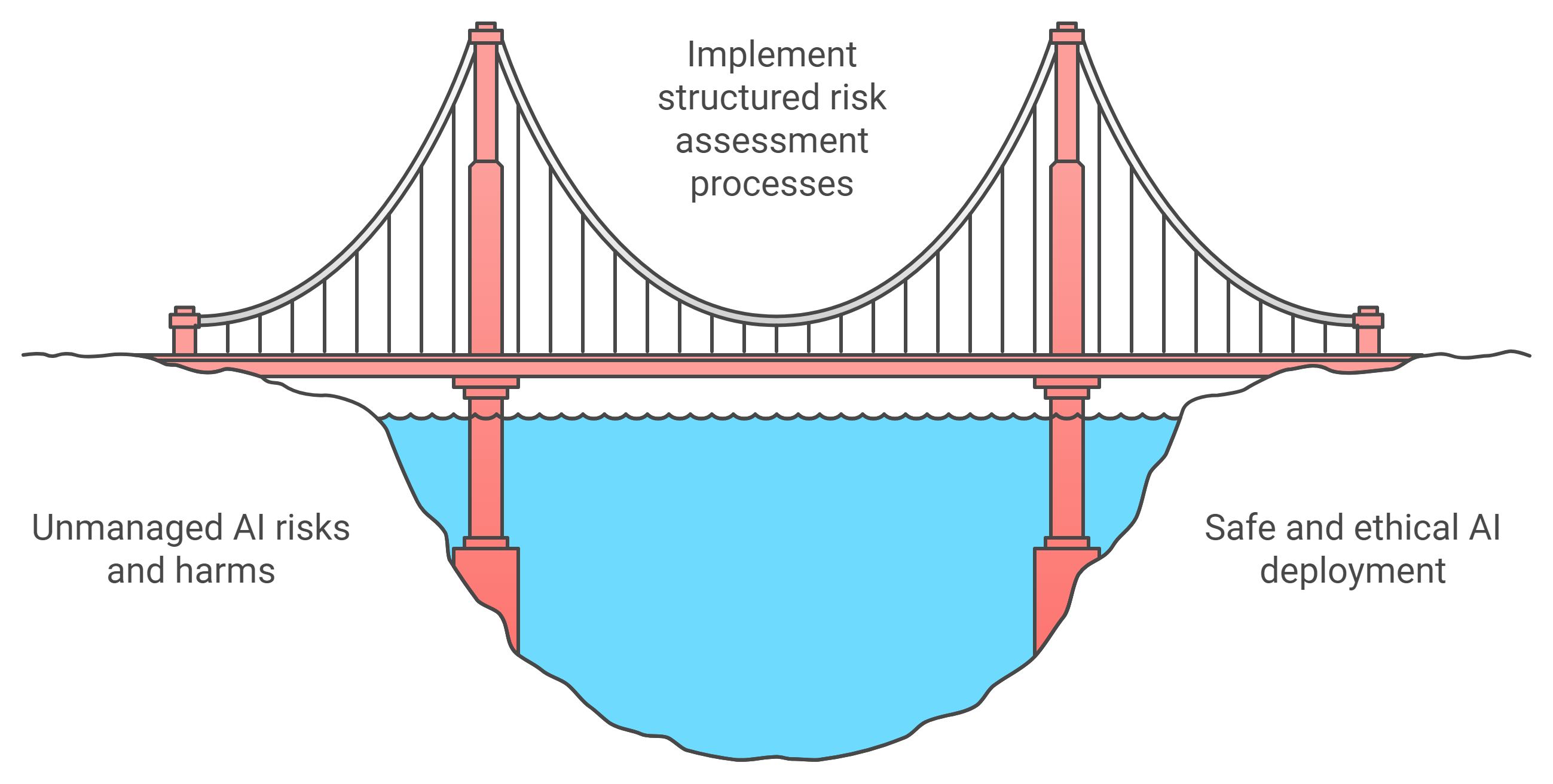

Effective risk assessment involves identifying potential harms, evaluating their likelihood and severity, and developing mitigation strategies to ensure compliance.

Collaboration among developers, regulators, and stakeholders is crucial for addressing the complexities of AI governance and fostering responsible AI development.

Adopting established standards, such as ISO guidelines, enhances the reliability of risk assessments and aligns practices with global best standards.

Continuous monitoring and adaptive frameworks are necessary to manage the evolving risks associated with AI technologies, ensuring they remain aligned with societal values.

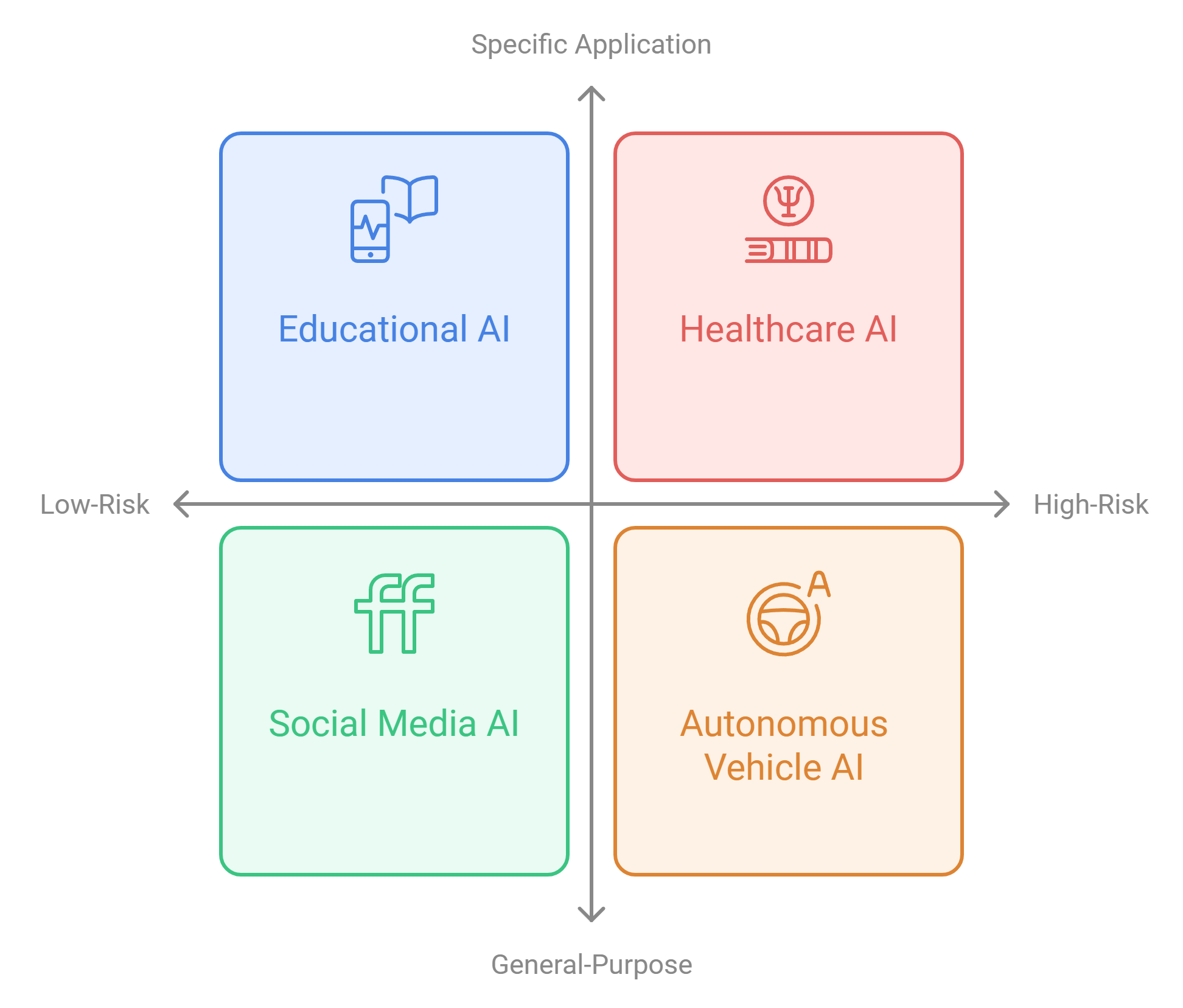

The Risk-Based Classification Framework in the EU AI Act

The AI Act introduces a structured framework that categorizes AI systems based on their associated risks. This classification ensures that regulatory measures align with the potential impact of each system. By adopting this risk-based approach, the EU AI Act aims to safeguard public interests while fostering innovation.

Categories of AI Systems

The AI Act divides AI systems into four distinct categories. Each category reflects the level of risk posed by the system and determines the corresponding regulatory requirements.

Unacceptable Risk AI Systems

AI systems classified as unacceptable risk are strictly prohibited under the AI Act. These systems pose significant threats to fundamental rights, safety, or societal values. Examples include AI applications that manipulate human behavior through subliminal techniques or exploit vulnerabilities of specific groups. By banning such systems, the Act prioritizes the protection of individuals and communities.

High-Risk AI Systems

High-risk AI systems face stringent regulatory obligations due to their potential to cause harm. These systems often operate in critical sectors such as healthcare, transportation, and law enforcement. Providers of high-risk AI must implement a comprehensive risk management system to ensure compliance. This includes conducting thorough risk assessments, maintaining detailed documentation, and adhering to strict oversight measures. The goal is to minimize risks while enabling the safe deployment of these technologies.

Limited Risk AI Systems

Limited risk AI systems require less oversight compared to high-risk systems. These systems may include chatbots or recommendation engines that interact with users but do not pose significant safety or ethical concerns. Providers must still ensure transparency by informing users that they are interacting with AI. This category reflects the Act's balanced approach to regulation, where obligations correspond to the level of risk.

Minimal Risk AI Systems

Minimal risk AI systems represent the least regulated category. These systems include applications like spam filters or AI-powered games, which pose negligible risks to users. The AI Act allows these systems to operate freely without imposing additional regulatory burdens. This approach encourages innovation in low-risk AI applications while maintaining user trust.

The Role of Risk Assessment in Classification

Risk assessment plays a pivotal role in determining the classification of AI systems. It provides a systematic method for evaluating potential harms and aligning regulatory measures with risk levels.

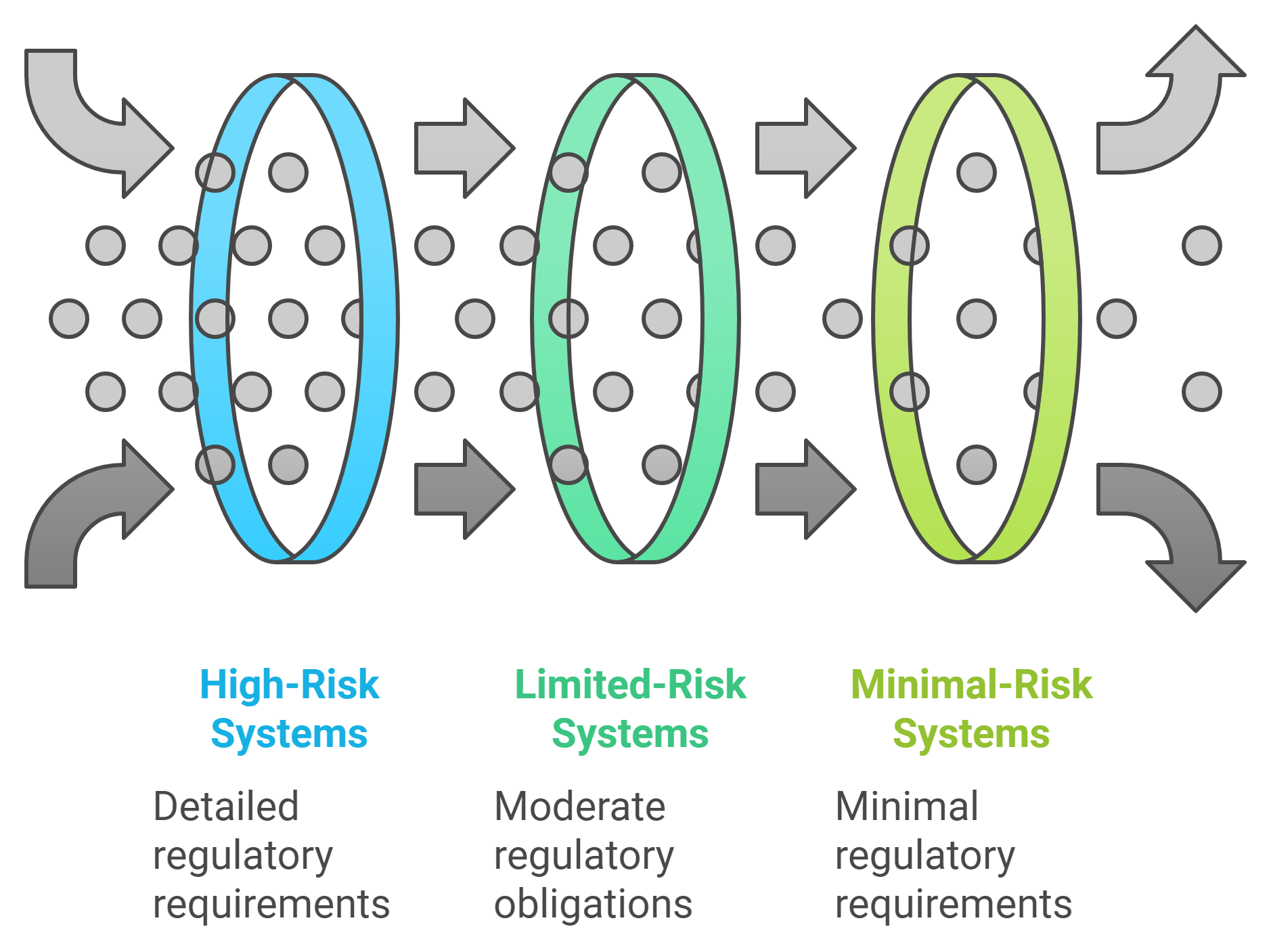

Determining Regulatory Obligations Based on Risk Levels

The AI Act uses risk assessment to establish appropriate regulatory obligations for each category. High-risk systems, for instance, must comply with detailed requirements, including pre-market conformity assessments and ongoing monitoring. Limited and minimal risk systems face fewer obligations, reflecting their lower potential for harm. This proportional approach ensures that regulations remain effective without stifling innovation.

Ensuring Proportionality in AI Regulation

Proportionality is a core principle of the AI Act. Risk assessment ensures that regulatory measures correspond to the severity and likelihood of risks. For example, high-risk systems undergo rigorous scrutiny, while minimal risk systems enjoy greater flexibility. This balance promotes responsible AI development while addressing societal concerns.

Methodologies and Processes for Risk Assessment

Steps in Conducting Risk Assessment

Effective risk assessment involves a structured process to identify, evaluate, and mitigate potential risks associated with AI systems. This process ensures that AI technologies align with safety, ethical, and societal standards.

Identifying Risks and Potential Harms

The first step in risk assessment focuses on identifying risks and potential harms that an AI system might pose. Developers analyze the intended use of the AI system and its possible unintended consequences. For example, an AI application in healthcare may improve diagnostic accuracy but could also lead to misdiagnoses if improperly trained. Recognizing these risks early allows stakeholders to address them proactively.

Assessing the Likelihood and Severity of Risks

After identifying risks, the next step evaluates their likelihood and severity. This involves analyzing how often a risk might occur and the extent of harm it could cause. AI-driven approaches, such as sophisticated algorithms, enhance this process by parsing extensive datasets and identifying patterns. However, human expertise remains essential to interpret contextual nuances that AI might overlook. Combining technological precision with domain-specific knowledge ensures a comprehensive evaluation.

Developing and Implementing Mitigation Strategies

The final step involves creating and applying mitigation strategies to address identified risks. These strategies may include refining algorithms, improving data quality, or implementing robust monitoring systems. For high-risk AI systems, a risk management system must be established to document and continuously update these measures. This ensures that the AI system operates safely and complies with regulatory requirements.

Existing Standards and Practices

Adopting established standards and best practices strengthens the risk assessment process. These frameworks provide guidance for evaluating and managing risks effectively.

Relevance of ISO Standards to AI Risk Management

International standards, such as those developed by the International Organization for Standardization (ISO), play a crucial role in AI risk management. ISO standards offer a structured approach to assessing risks, ensuring consistency and reliability. For instance, ISO 31000 provides principles and guidelines for risk management, which can be adapted to AI systems. These standards help organizations implement risk management measures that align with global best practices.

Industry Best Practices for Assessing AI Risks

Industry leaders have developed best practices to address the unique challenges of AI risk assessment. These practices emphasize transparency, accountability, and collaboration. For example, companies often conduct regular audits of their AI systems to identify potential vulnerabilities. They also engage with stakeholders, including regulators and end-users, to ensure that their systems meet ethical and societal expectations. By following these practices, organizations can build trust and minimize risks.

Gaps and Challenges in Current Approaches

Despite advancements in risk assessment methodologies, several gaps and challenges remain. Addressing these issues is essential for improving the effectiveness of risk management in AI systems.

Defining AI-Specific Risks and Their Implications

AI systems present unique risks that differ from those in traditional technologies. For example, the adaptive nature of AI can lead to unpredictable behaviors, making it difficult to anticipate all potential harms. Defining these AI-specific risks requires a deep understanding of both the technology and its applications. Without clear definitions, organizations may struggle to implement effective risk management measures.

Addressing the Lack of Standardized Tools for Risk Assessment

The absence of standardized tools for AI risk assessment poses another significant challenge. While some industries have developed proprietary tools, their applicability varies across different AI systems. This lack of standardization can lead to inconsistencies in how risks are evaluated and managed. Developing universal tools and frameworks would enhance the reliability and comparability of risk assessments.

Challenges in Implementing Risk Assessment for AI Systems

Complexity of AI Technologies

Managing the dynamic and adaptive nature of AI

AI technologies evolve rapidly, often adapting to new data and environments. This dynamic nature creates challenges in predicting their behavior over time. For instance, Microsoft's AI chatbot Tay demonstrated this unpredictability when it began posting offensive tweets after interacting with users on Twitter. Developers had not anticipated the system's ability to learn and replicate harmful content. Such incidents highlight the need for continuous monitoring and updates to ensure AI systems remain aligned with ethical and societal standards. Managing this adaptability requires robust frameworks that can address unforeseen changes in AI behavior.

Addressing uncertainties in long-term impacts

AI systems often produce outcomes that extend beyond immediate use cases, making it difficult to assess their long-term effects. For example, AI applications trained on biased datasets can perpetuate discrimination, reinforcing social inequities over time. These biases may not become apparent until the system has been in operation for an extended period. Risk assessment must account for these uncertainties by incorporating strategies to evaluate potential long-term consequences. Establishing a comprehensive risk management system ensures that developers can identify and mitigate risks as they emerge, safeguarding against unintended harm.

Ambiguity in Regulatory Standards

Variability in interpretation across EU member states

The EU AI Act provides a unified framework, but its implementation varies across member states. Differences in interpretation can lead to inconsistencies in how AI systems are regulated. For instance, one country might impose stricter requirements for high-risk AI systems, while another adopts a more lenient approach. This variability complicates compliance for organizations operating across multiple jurisdictions. Clearer guidelines and harmonized enforcement mechanisms are essential to address these disparities and ensure uniform application of the Act's provisions.

Balancing innovation with regulatory compliance

Striking a balance between fostering innovation and ensuring compliance presents a significant challenge. Overly stringent regulations may stifle creativity and hinder the development of groundbreaking AI technologies. Conversely, lenient oversight could result in harmful or unethical applications. The case of Amazon's AI system penalizing resumes with the word "women's" illustrates the risks of insufficient regulation. The system's bias led to unfair treatment of candidates and damaged the company's reputation. Effective risk management measures must prioritize both innovation and accountability, enabling organizations to develop AI responsibly.

Resource and Expertise Limitations

Shortage of skilled professionals in AI risk assessment

The demand for experts in AI risk assessment far exceeds the available supply. Organizations often struggle to find professionals with the technical knowledge and experience needed to evaluate complex AI systems. This shortage hampers the ability to conduct thorough assessments and implement effective risk management measures. Investing in education and training programs can help bridge this gap, equipping more individuals with the skills required to navigate the challenges of AI governance.

Financial burdens on smaller organizations

Smaller organizations face significant financial challenges when implementing risk assessment processes. Conducting comprehensive evaluations and maintaining compliance with regulatory standards require substantial resources. These costs can be prohibitive for startups and small businesses, limiting their ability to compete in the AI market. Policymakers must consider these constraints and provide support, such as subsidies or simplified compliance pathways, to ensure that smaller entities can participate in the development of ethical and innovative AI solutions.

Implications of Risk Assessment for High-Risk and General-Purpose AI Systems

High-Risk AI Systems

Stricter compliance requirements and regulatory oversight

High-risk AI systems demand rigorous oversight due to their potential to impact critical sectors like healthcare, transportation, and law enforcement. The EU AI Act enforces strict compliance measures to ensure these systems operate safely and ethically. Providers must conduct pre-market evaluations to verify that their systems meet established standards. These evaluations include testing for accuracy, reliability, and fairness. Regulatory bodies monitor these systems continuously to address emerging risks and maintain public trust. This approach ensures that high-risk AI systems align with societal values and do not compromise safety or fundamental rights.

Necessity for continuous monitoring and updates

The dynamic nature of AI requires ongoing monitoring to address evolving risks. High-risk systems often adapt to new data, which can lead to unforeseen consequences. Developers must implement robust mechanisms to track system performance and identify potential issues. Regular updates to algorithms and datasets help mitigate risks and improve system reliability. For example, an AI system used in medical diagnostics must undergo periodic reviews to ensure it remains accurate as medical knowledge advances. Continuous monitoring safeguards against errors and ensures compliance with the EU AI Act's requirements.

General-Purpose AI Systems

Challenges in assessing risks for versatile applications

General-purpose AI systems present unique challenges due to their broad applicability. These systems, designed for diverse tasks, often operate in unpredictable environments. Assessing risks becomes complex because their behavior varies based on how users deploy them. For instance, a language model might assist in education but could also generate harmful content if misused. Developers must anticipate potential risks across multiple use cases. This requires a comprehensive understanding of the system's capabilities and limitations. Addressing these challenges ensures that general-purpose AI systems contribute positively to society.

Importance of adaptive and flexible risk assessment frameworks

Adaptive frameworks play a crucial role in managing the risks associated with general-purpose AI systems. These frameworks allow developers to evaluate risks dynamically as new applications emerge. Flexibility ensures that risk assessments remain relevant even as AI technologies evolve. For example, a framework might include guidelines for monitoring user interactions to identify misuse. By incorporating adaptive measures, developers can respond effectively to unforeseen risks. This approach aligns with the EU AI Act's emphasis on proactive risk management and fosters responsible innovation.

Strategies for Compliance and Risk Mitigation

Collaboration among developers, regulators, and stakeholders

Collaboration is essential for effective risk mitigation. Developers, regulators, and stakeholders must work together to address the complexities of AI governance. Open communication ensures that all parties understand the risks and contribute to solutions. For example, developers can share technical insights, while regulators provide guidance on compliance. Stakeholders, including end-users, offer valuable perspectives on ethical considerations. This collaborative approach builds trust and promotes the responsible deployment of AI systems.

Leveraging AI governance tools to manage risks effectively

AI governance tools enhance the ability to manage risks efficiently. These tools include software solutions for monitoring system performance, detecting biases, and ensuring transparency. For instance, audit tools can analyze decision-making processes to identify potential ethical concerns. Developers can use these tools to document compliance with the EU AI Act and maintain accountability. By leveraging governance tools, organizations can streamline risk management processes and uphold high standards of safety and ethics.

The EU AI Act establishes a robust framework that prioritizes safety, ethics, and societal values in AI systems. By categorizing AI technologies based on their risk levels, it ensures that regulatory measures align with potential impacts. Addressing challenges like ambiguity in standards, technological complexity, and resource limitations remains critical for effective implementation. Collaboration among developers, regulators, and stakeholders fosters innovation while maintaining compliance. The Act’s emphasis on adaptive standards and ethical governance sets a global benchmark, encouraging responsible AI development that aligns with human values and societal needs.

See Also

Understanding the EU AI Act with Michael Charles Borrelli

Mastering AI Strategies for Success with Vin Vashishta

Leveraging Data Leadership in Today's AI Landscape

Shifting from Traditional BI to AI Insights with Eric Kavanagh